Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: August 22, 2020

In this tutorial, we’ll study the problem of sentiment analysis in natural language processing.

We’ll also identify some training datasets that we can use to develop prototypes of our models.

At the end of this tutorial, we’ll know where to find common datasets for sentiment analysis, and how to use them for simple natural language processing.

The problem of analyzing sentiments in human speech is the subject of the study of natural language processing, cognitive sciences, affective psychology, computational linguistics, and communication studies. Each of them adds their own individual perspective to the understanding of a phenomenon, the relationship between language and human emotions, which is as frequently occurring as it’s mysterious.

In our article on emotion detection in texts, we discussed how it’s unclear whether a unique mapping between language and emotions exists. If it doesn’t, as it’s suspected, then the problem of identification and processing of emotions through language can’t be solved algorithmically. This, in turn, means that we can’t successfully apply machine learning to it.

However, machine learning does indeed perform sentiment analysis in many commonly used applications. Examples of these are the identification of negative feedbacks in user comments, the detection of variation in the political support for candidates, and the prediction of stock market prices on the basis of social media data.

How is it therefore possible that machine learning is used, in practice, when we’re theoretically confident that it can’t generally solve the problem of sentiment analysis?

The theory of artificial intelligence distinguishes between the solution of narrow and general tasks. While the solution of general cognitive problems is yet unattained, for narrow tasks machine learning tends to perform exceedingly well.

We can similarly distinguish between general and narrow tasks when working on emotions. The current theoretical understanding suggests that the identification of sentiments can’t be solved in general. However, the solution of narrowly-defined tasks can still be obtained with great accuracy.

We also discussed how there’s generally no agreement regarding the ontology of emotions that regulate the human psyche. As a consequence of this, there can’t be any agreement on the ontology to use for the identification of emotions in texts either. However, if we arbitrarily presume that a given ontology applies to a problem, then we can solve that problem through machine learning.

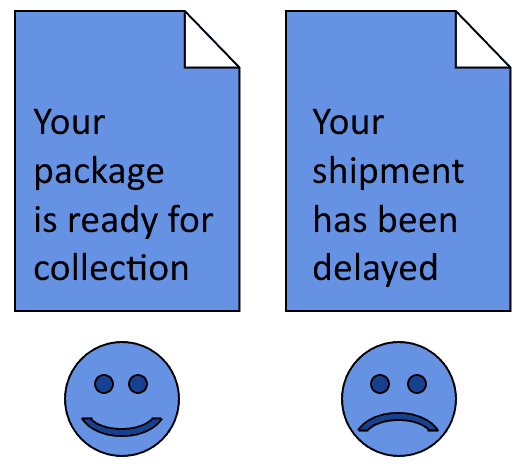

The typical ontology comprises two classes: positive and negative sentiments. The unit of analysis, whether text, word, or sentence, is then univocally assigned to one of those classes:

The definition of what “positive” and “negative” mean may however change greatly from an author to another. From this, we conclude that several methodologies for sentiment analysis exist, each of them arising from the narrow definition of what sentiments are in a given context. The better the definition that we give frames a particular problem, the better its application to machine learning will be.

This also means that not all training datasets are equal. A dataset that has been developed in the application of a particular methodology, won’t work well if we apply it to a different context. This implies that the selection of a dataset that follows the specific theoretical assumptions that apply to our problem is extraordinarily important.

For this reason, we’re here going to discuss the most common datasets for sentiment analysis, and also the circumstances under which they were developed in the first place. We’ll also study examples of their usages in the scientific literature, in order to understand what possibilities each of them opens to us.

In our introductory article on emotion detection, we listed some public datasets for emotion detection that we can use to develop a base model. In here, we list a different set, containing a more thorough description of the features and scientific usages pertaining to each.

We’ll start by listing the most common datasets for supervised learning in sentiment analysis. They’re all particularly suitable for developing machine learning models that classify texts according to a predetermined typology.

The MPQA Opinion Corpus is comprised of 70 annotated documents, that correspond news items published in the English-speaking press. It uses a specific annotation scheme that comprises the following tags or labels:

The two tags, expressive and direct-subjectivity, also contain the measurement of polarity assigned to the particular sentence to which they refer. This dataset is particularly suitable for training models that learn both the explicit and implicit expressions of sentiments in regard to particular entities. It has also been used for the training of deep learning models for sentiment analysis and, more in general, for the conduct of opinion mining.

The dataset Sentiment 140 contains an impressive 1,600,000 tweets from various English-speaker users, and it’s suitable for developing models for the classification of sentiments. The name comes, of course, from the defining character limitation of the original Twitter messages.

This dataset comprises automatically-tagged messages, marked as “positive” or “negative” according to whether, respectively, they contain the emoticons or

. This automatic approach to tagging, albeit used commonly, is however characterized by known limitations especially in terms of blindness to irony.

The features of the dataset are:

Sentiment 140 proves useful to train Maximum Entropy models in particular. Additionally, the scientific literature also shows its usage with Naive Bayesian models. And furthermore, it’s useful to analyze the population’s attitude towards pandemics, modeled by means of support vector machines.

The Paper Reviews dataset contains 405 reviews, in Spanish and English, on papers submitted to an international conference on computer science. The number of papers to which they refer is a bit more than half since it’s common in scientific publishing to use at least two reviewers per paper. The dataset itself is in JSON format, and contains the following features:

The Paper Reviews dataset finds usage for the training of hybrid models that include swarm optimization. It’s also suitable for general classification and regression tasks, given that the evaluation score has a numerical ordinal value. It should also be useful, and not well-exploited as of yet, to study the relationship between emotions, objectivity, and scores in the peer-review process.

One common belief in science is that the peer review process is generally fair and equitable. This belief is however questionable, especially in relation to some known human cognitive biases such as gender, institutional prestige, and, most importantly for us in natural language processing, language. This dataset is, therefore, particularly adapt to analyze human bias and its role in the publication of scientific discoveries.

Another popular dataset containing reviews, in this case on movies, is the Large Movie Review Dataset. The dataset contains 50’000 reviews divided into training and testing, all containing highly polarized texts. It’s particularly suitable for binary classification, and it comprises just two features:

This dataset found usage in the training of hybrid supervised-unsupervised learning models. But also, of support vector classifiers, naive Bayesian classifiers, and jointly, of neural networks and k-nearest neighbors. A large collection of notebooks containing models for classification of this dataset is available on Kaggle.

The Stanford Sentiment Treebank is a corpus of texts used in the paper Deeply Moving: Deep Learning for Sentiment Analysis. The dataset is comprised of 10,605 texts extracted from the website Rotten Tomatoes that specializes in movie reviews. It comprises the following features:

The Stanford Sentiment Treebank finds usage in the training of support vector classifiers and deep learning models. It also inspired the development of similar datasets for other languages, with the creation of the Arabic Sentiment Treebank.

This dataset for multi-domain analysis was initially developed by the University of Pennsylvania on the basis of Amazon product scraped from the website. The products belong to four categories: electronics, books, kitchen utensils, and DVDs. Each review possesses a polarization score of “positive” or “negative”, corresponding to, respectively, more than three stars or less than three stars out of a maximum of five.

Both an unprocessed and a preprocessed version of the reviews are available. The latter comes already tokenized into uni or bi-grams. The features of the preprocessed version are:

The two classes of positive and negative reviews possess 1000 elements each. Unlabeled data is also present, in the form of 3685 reviews for DVDs and 5945 for kitchen utensils. The usage of unlabeled data might help to compare the predictions of different models against previously unseen data.

The dataset has found ample usage in the literature on sentiment analysis. Among these, a joint sentiment-topic model proved useful in learning the factors that predict the emotional connotation of a review. Naive Bayesian models and sequential minimal optimization also successfully performed the classification of the texts from this dataset.

The Pros and Cons Dataset relates to the task of opinion mining at the sentence-level. It contains around 23,000 sentences indicating positive and negative judgments and is meant to be used in relation to the Comparative Sentences dataset. The dataset is suitable for two usages:

The papers in the scientific literature that leverage this dataset fall into two categories: model development, and extension of automatic polarity classification to languages other than English.

Regarding the first category, the usage of this dataset was effective for automated speech processing. In relation to this task, the dataset provides the classification labels for polarity, that a model for audio processing can use to determine the sentiment of user speech. Its related dataset Comparative Sentences also found a similar usage, in the attribution of sentiment to Youtube videos.

Regarding the second category, the dataset inspired the creation of a corpus of polarized sentences in Norwegian, but also a multi-lingual corpus for deep sentiment analysis. Multi-lingual sentiment analysis is notoriously difficult because it’s language-dependent, and the usage of this dataset together with others in different languages can help address this problem.

The Opinosis Opinion Dataset is a resource that comprises user reviews for products and services, grouped by topic. It contains a notable amount of 51 different topics related to products sold on the websites Amazon, Tripadvisor, and Edmunds. For each topic, there are about 100 distinct sentences that mostly relate to electronics, hotels, or cars.

All sentences are divided into tokens, which are subsequently augmented with parts-of-speech tags. The dataset is especially useful for text summarization because it lacks polarization labels. Its usage in conjunction with a lexicon for sentiments, though, allows also the conduct of supervised sentiment analysis, as was the case for all previous datasets.

The advantage of the Opinosis Opinion Dataset lies in its parts-of-speech tags. Studies suggest that a model that uses adjectives and adverbs outperforms one that uses adjectives alone, and we need parts-of-speech tags to discriminate between the two groups. This dataset, therefore, allows the construction of models for sentiment analysis that implement parts-of-speech tags as well as lexica.

Another dataset originating from Twitter is the Twitter US Airlines Dataset, that comprises thematic messages on the quality of service by American aviation companies. The dataset contains these features:

In scientific literature, the dataset is used for classification tasks in general. But also, more specifically, for support vector machines and AdaBoost, and for ensemble approaches that combine predictions from multiple algorithms.

Interestingly, we can note that some US airline companies that are represented in this dataset react to negative customer feedback on Twitter surprisingly quickly. This may lead us to believe that they themselves might have adopted a system for the detection of negative polarity in user tweets.

One last note concerns the application of unsupervised learning to sentiment analysis. We know that if we want to assign sentiment values to a text we are, indeed, performing either a classification or regression task, according to whether we treat the labels as unordered categorical or numeric variables respectively. The literature, however, discusses also methods for unsupervised sentiment analysis, though it’d be better to say semi-supervised.

One such method is the following. We first select two words present in the dataset and assign them an antinomial polarity score. If we use the words selected in the paper linked above, then we can express this process as assigning and

. The process takes the name of “seeding”, because it’s analogous to the seeding process for random generators.

We can then use some measures to determine the polarity value assigned to all other words in the dataset. The common measure is mutual information, but some variations of it are also used. It appears, however, that the polarity score assigned to non-seed tokens changes drastically according to which ones we use for seeding, thus giving doubts to the reliability of this methodology.

From the description of this methodology, it naturally descends that a text corpus containing words that a human tagger labels as polarized, such as “excellent” and “poor”, is suitable for unsupervised sentiment analysis. This means that, as a general rule, a dataset prepared for supervised sentiment analysis is equally appropriate for unsupervised analysis.

There’s however another method for unsupervised sentiment analysis, that takes the name of the lexicon-based method. This method builds upon the idea that some words have an intrinsic positive or negative meaning, such as the words “positive” and “negative” themselves. If this is true, then it’s possible to build dictionaries that contain the association between a word token and a polarity score.

There are lexica of this type publicly available online. One of them is the VERY NEG VERY POS lexicon, that contains also the parts-of-speech tags for each word. Another lexicon is SO-CAL, that also includes the weights and the negation of the polarized words.

Lastly, there’s also an automatic method for developing a lexicon from a dataset. We can refer to this method, if in the language we’re using there’s no readily available dataset, or if we’re unsure about the reliability of the available ones.

In this article, we studied the basics of the methodology for sentiment analysis.

We’ve also listed the public datasets for supervised sentiment analysis.

For each of them, we discussed the features they possess and the known usage cases in the scientific literature.

Lastly, we described the basics for unsupervised sentiment analysis and identified datasets and lexica that help to work with it.