1. Introduction

In this tutorial, we’ll talk about node impurity in decision trees. A decision tree is a greedy algorithm we use for supervised machine learning tasks such as classification and regression.

2. Splitting in Decision Trees

Firstly, the decision tree nodes are split based on all the variables. During the training phase, the data are passed from a root node to leaves for training. A decision tree uses different algorithms to decide whether to split a node into two or more sub-nodes. The algorithm chooses the partition maximizing the purity of the split (i.e., minimizing the impurity). Informally, impurity is a measure of homogeneity of the labels at the node at hand:

There are different ways to define impurity. In classification tasks, we frequently use the Gini impurity index and Entropy.

3. Gini Impurity

Gini Index is related to the misclassification probability of a random sample.

Let’s assume that a dataset contains examples from

classes. Its Gini Index,

, is defined as:

(1)

where is the relative frequency of class

in

, i.e., the probability that a randomly selected object belongs to class

.

As we can observe from the above equation, Gini Index may result in values inside the interval . The minimum value of zero corresponds to a node containing the elements of the same class. In case this occurs, the node is called pure. The maximum value of 0.5 corresponds to the highest impurity of a node.

3.1. Example: Calculating Gini Impurity

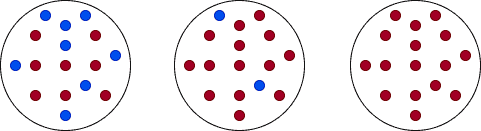

In this example, we’ll compute the Gini Indices for 3 different cases of a set with 4 balls of two different colors, red and blue:

- 4 red & 0 blue balls:

- 2 red & 2 blue balls:

- 3 red & 1 blue balls:

4. Entropy

Ιn statistics, entropy is a measure of information.

Let’s assume that a dataset associated with a node contains examples from

classes. Then, its entropy is:

(2)

where is the relative frequency of class

in

. Entropy takes values from

. As is the case with the Gini index, a node is pure when

takes its minimum value, zero, and impure when it takes its highest value, 1.

4.1. Example: Calculating Entropy

Let’s compute the entropies for the same examples as above:

- 4 red & 0 blue balls:

- 2 red & 2 blue balls:

- 3 red & 1 blue balls:

5. Splitting the Data

The quality of splitting data is very important during the training phase. When splitting, we choose to partition the data by the attribute that results in the smallest impurity of the new nodes.

We’ll show how to split the data using entropy and the Gini index.

5.1. Information Gain

The information gain is the difference between a parent node’s entropy and the weighted sum of its child node entropies.

Let’s assume a dataset with

objects is partitioned into two datasets:

and

of sizes

and

. Then, the split’s Information Gain (

) is:

(3)

In general, if splitting into

subsets

with

objects, respectively, the split’s Information Gain (

) is:

(4)

5.2. Example: Splitting by Information Gain

Let’s consider dataset , which shows if we’re going to play tennis or not based on the weather:

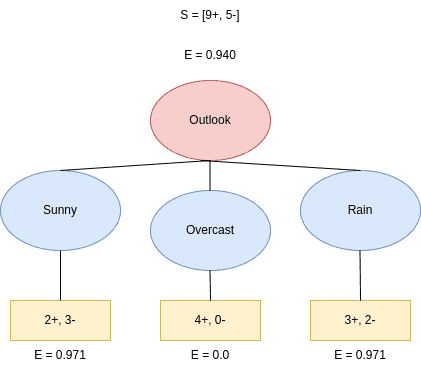

It contains 9 positive outcomes (Yes) and 5 negatives (No). So, the starting structure is . We want to determine the attribute that offers the highest Information Gain.

Let’s see what happens if we split by Outcome. Outlook has three different values: Sunny, Overcast, and Rain. As we see, the possible splits are and

(

and

stand for entropy and split):

Firstly, we calculate the entropies. They are: and

. Therefore, the Information Gain based on the Outlook,

, is:

(5)

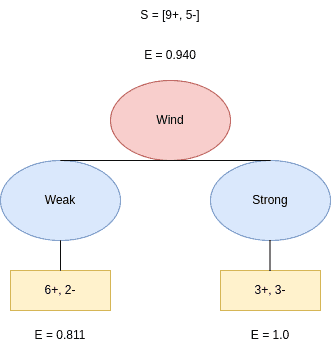

Next, we split the tree based on the Wind feature. It can take two values: Weak and Strong, with the possible splits and

:

The corresponding entropies are: and

. Therefore,

is:

(6)

Following this idea, we calculate the gains for Humidity and Temperature as well.

(7)

(8)

We see that the feature with the maximum Information Gain is Outlook. Thus, we conclude that Outlook is the best attribute to split the data at the tree’s root.

5.3. Example: Splitting by Gini Index

We can split the data by the Gini Index too. Let’s compute the required probabilities:

(9)

Out of the 14 days in the above example, Sunny, Overcast, and Rain occur 5, 4, and 5 times, respectively. Then, we compute the probabilities of a Sunny day and playing tennis or not. Out of the 5 times when Outlook=Sunny, we played tennis on 2 and didn’t play it on 3 days:

Having calculated the required probabilities, we can compute the Gini Index of Sunny:

We follow the same steps for Overcast and Rain:

Therefore, the weighted sum of the above Gini Indices is:

(10)

Similarly, we compute the Gini Index of Temperature, Humidity, and Wind:

(11)

We conclude that Outlook has the lowest Gini Index. Hence, we’ll choose it as the root node.

6. Conclusion

In this article, we talked about how we can compute the impurity of a node while training a decision tree. In particular, we talked about the Gini Index and entropy as common measures of impurity. By splitting the data to minimize the impurity scores of the resulting nodes, we get a precise tree.