1. Introduction

In this tutorial, we’ll look at CPU and I/O burst cycles. We’ll see what they are and discuss their role in performance optimization and how they impact the efficiency and responsiveness of a system.

2. CPU Bursts

At its core, a CPU burst is a period during which a process or program demands and actively utilizes the Central Processing Unit (CPU) for computation. It’s when the CPU executes a sequence of instructions for a specific task. These bursts are not malicious, to the contrary, they are essential for processing tasks and computations in virtually every application, from running operating systems to handling complex software.

CPU bursts have two typical characteristics – duration and variability.

CPU bursts can vary significantly in duration. Some may be short and intensive, while others may be long and less resource-demanding. Understanding the duration of CPU bursts helps in resource allocation and task scheduling.

The variability of CPU bursts is a critical factor in system performance. Burst patterns can be irregular, leading to unpredictable system behaviour. Managing this variability is essential for maintaining system stability.

Long CPU bursts are mostly common in CPU-intensive computing scenarios. Some applications that showcase big CPU bursts are video rendering and transcoding, scientific simulations and modelling, compiling large software projects or cryptographic operations.

Identifying the length and variability of CPU bursts is an important step when doing performance optimization in systems. To effectively manage these, tools and metrics are essential. Profiling tools like that can help monitor CPU usage (for example, perf program on Linux). Key metrics include CPU utilization, execution time, and context switches. Analyzing these metrics provides insights into how efficiently CPU resources are utilized.

3. I/O Bursts

In contrast to CPU bursts, I/O bursts involve Input/Output operations. During an I/O burst, a process or program waits for data to be read from or written to external storage devices, such as disks or network resources. I/O bursts are common in scenarios where data needs to be fetched from or delivered to external sources.

I/O bursts also have two typical characteristics, waiting time and I/O operation type:

- I/O bursts are characterized by the time a process waits for data to be retrieved or stored. This waiting time can vary widely, depending on the speed of the storage medium and the complexity of the I/O operation. For example, it’s much faster to bring data from a cache memory than to go all the way to a hard drive.

- I/O bursts can involve various types of operations, including disk I/O (reading/writing files), network I/O (sending/receiving data over a network), and more. Each type has its unique characteristics and challenges.

As we’d expect, there exist workloads that are more I/O-intensive and, therefore, require more I/O bursts. Those may include things like database operations, where data is retrieved or stored on disk, web servers handling numerous client requests or video streaming, where data is constantly read from storage (or network).

To optimize I/O performance, it’s crucial to measure and analyze I/O bursts. Tools like `iostat` and `sar` on Linux provide insights into disk I/O. Metrics include disk throughput, I/O queue length, and response times. By monitoring these metrics, system administrators can identify and address performance bottlenecks.

4. Relationship Between CPU and I/O Bursts

In many real-world scenarios, CPU and I/O bursts are interconnected. For example, a web server may experience CPU bursts while processing requests and I/O bursts when reading or writing data to storage. Balancing the utilization of CPU and I/O resources is essential for optimizing system performance.

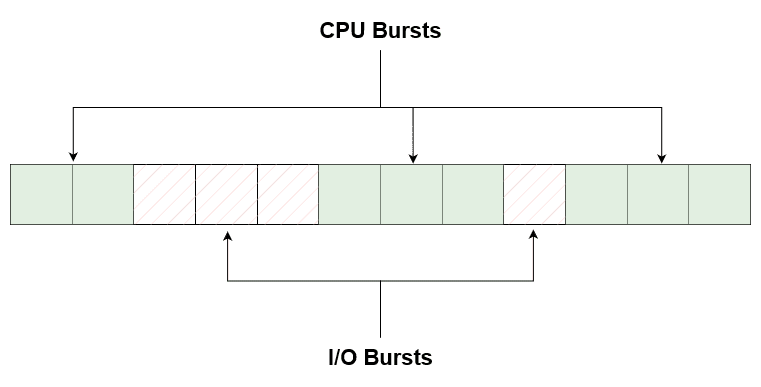

In the figure below, we can see how one process or program on a system changes between CPU and I/O utilization while running:

How many of these bursts a program showcases and how long they last depends on the workload.

Efficiently managing both CPU and I/O-intensive workloads involves strategic resource allocation. Techniques like task scheduling and parallel processing can help balance these workloads. For example, multi-threading allows CPU-bound tasks to continue execution while waiting for I/O operations to complete.

Real-world scenarios where this relationship plays a critical role in the performance of systems include the following:

- Database management – database systems often face a delicate balance between CPU-intensive query processing and I/O-bound data retrieval. Optimizations, such as indexing and caching, are used to minimize the impact of bursts on database performance

- Web server performance – web servers encounter bursts of incoming requests that require CPU processing. Additionally, serving static assets like images or videos involves I/O bursts. Efficient request handling and caching strategies are crucial in maintaining responsive web services

4.1. Strategies for Handling Bursts

Let’s take a look at strategies for handling big CPU and I/O bursts.

For CPU bursts, a big part of resource allocation is task scheduling. Operating systems use scheduling algorithms to manage CPU bursts. Prioritizing tasks based on their urgency and resource requirements ensures fair resource allocation.

Parallel processing is equally important and helps greatly improve performance on CPU bursts. To fully utilize multi-core processors, tasks can be parallelized. This enables simultaneous execution of CPU-bound operations, reducing the impact of bursts.

On the other hand, we have I/O bursts. Caching is probably the most effective strategy with regard to I/O bursts. Caching frequently accessed data in memory can reduce I/O bursts. This approach minimizes the need to retrieve data from slower storage devices. Caches in critical systems can have many layers to help optimize data retrieval times.

Another solution is using asynchronous I/O. Asynchronous I/O is basically allowing processes to continue execution while waiting for I/O operations to complete asynchronously. With this step, systems can maximize CPU utilization and responsiveness.

4.2. Techniques for Predicting Bursts

Since the effect of bursts on system performance is so crucial, engineers developed techniques to predict them. System engineers can use them to predict what kind of bursts are expected, when they are expected and how long they last.

On the one hand, we have predictive analytics. In predictive analytics, we use historical data and machine learning models to predict bursts. This helps in proactive resource allocation and workload optimization.

However, with the recent emergence of machine learning and AI, machine learning models are taking over burst prediction. Advanced machine learning algorithms can analyze system metrics in real-time and predict impending bursts. These models can trigger adaptive resource allocation strategies. Using machine learning for system optimization is a recent trend in computer architecture.

5. Future Trends and Challenges

We are living through the emergence of Cloud Computing and High-Performance computing. Machine learning model training and computationally intensive AI platforms are only growing larger daily. To this end, performance optimization is becoming more and more relevant.

The field of burst management continues to evolve. The impact of optimizing bursts – especially in large-scale systems – is therefore more crucial than ever. Emerging technologies such as edge computing, serverless computing, and advanced storage solutions influence how we handle bursts in modern computing environments.

As systems become more complex and interconnected, new challenges emerge. Managing bursts in distributed systems, ensuring security during burst handling, and optimizing for energy efficiency are some of the evolving challenges in this field. Research into burst prediction, resource allocation algorithms, and efficient caching strategies remains active.

6. Conclusion

In conclusion, we saw why understanding CPU and I/O bursts is paramount in the world of computing. These bursts, whether they involve intensive CPU computation or waiting for I/O operations, have a profound impact on system performance.

By employing the right tools, strategies, and predictive techniques, we can optimize our systems to handle bursts efficiently. We can now clearly see that while technology continues to evolve, managing CPU and I/O bursts will remain critical to maintaining responsive and high-performance computing environments.