1. Overview

Maintaining an application in a consistent state is more important than keeping it running. It’s true for the majority of cases.

In this tutorial, we’ll learn how to explicitly stop the application on OutOfMemoryError. In some cases, without correct handling, we can proceed with an application in an incorrect state.

2. OutOfMemoryError

OutOfMemoryError is external to an application and is unrecoverable, at least in most cases. The name of the error suggested that an application doesn’t have enough RAM, which isn’t entirely correct. More precisely, an application cannot allocate the requested amount of memory.

In a single-threaded application, the situation is quite simple. If we follow the guidelines and don’t catch OutOfMemoryError, the application will terminate. This is the expected way of dealing with this error.

There might be some specific cases when it’s reasonable to catch OutOfMemoryError. Also, we can have some even more specific ones where it might be reasonable to proceed after it. However, in most situations, OutOfMemoryError means the application should be stopped.

3. Multithreading

Multithreading is an integral part of most of the modern applications. Threads follow a Las Vegas rule regarding exceptions: what happens in threads stays in threads. This isn’t always true, but we can consider it a general behavior.

Thus, even the most severe errors in the thread won’t propagate to the main application unless we handle them explicitly. Let’s consider the following example of a memory leak:

public static final Runnable MEMORY_LEAK = () -> {

List<byte[]> list = new ArrayList<>();

while (true) {

list.add(tenMegabytes());

}

};

private static byte[] tenMegabytes() {

return new byte[1024 * 1014 * 10];

}

If we run this code in a separate thread, the application won’t fail:

@Test

void givenMemoryLeakCode_whenRunInsideThread_thenMainAppDoestFail() throws InterruptedException {

Thread memoryLeakThread = new Thread(MEMORY_LEAK);

memoryLeakThread.start();

memoryLeakThread.join();

}

This happens because all the data that causes OutOfMemoryError is connected to the thread. When the thread dies, the List loses its garbage collection root and can be collected. Thus, the data that caused OutOfMemoryError in the first place is removed with the thread’s death.

If we run this code several times, the application doesn’t fail:

@Test

void givenMemoryLeakCode_whenRunSeveralTimesInsideThread_thenMainAppDoestFail() throws InterruptedException {

for (int i = 0; i < 5; i++) {

Thread memoryLeakThread = new Thread(MEMORY_LEAK);

memoryLeakThread.start();

memoryLeakThread.join();

}

}

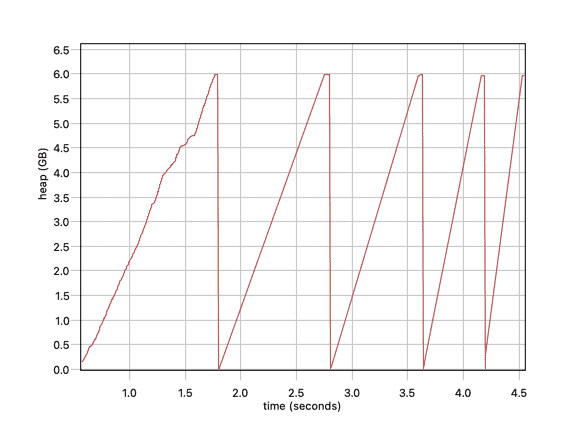

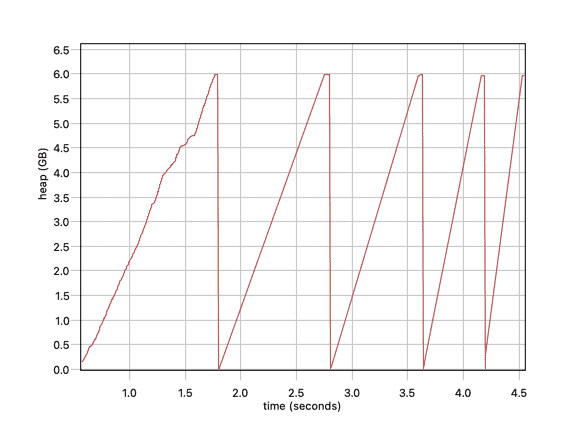

At the same time, garbage collection logs show the following situation:

In each loop, we deplete 6 GB of available RAM, kill the thread, run garbage collection, remove the data, and proceed. We’re getting this heap rollercoaster, which doesn’t do any reasonable work, but the application won’t fail.

At the same time, we can see the error in the logs. In some cases, ignoring OutOfMemoryError is reasonable. We don’t want to kill an entire web server because of a bug or user exploits.

Also, the behavior in an actual application might differ. There might be interconnectivity between threads and additional shared resources. Thus, any thread can throw OutOfMemoryError. This is an asynchronous exception; they aren’t tied to a specific line. However, the application will still run if OutOfMemoryError doesn’t happen in the main application thread.

4. Killing the JVM

In some applications, the threads produce crucial work and should do it reliably. It’s better to stop everything, look into and resolve the problem.

Imagine that we’re processing a huge XML file with historical banking data. We load chunks into memory, compute, and write results to a disc. The example can be more sophisticated, but the main idea is that sometimes, we heavily rely on the transactionality and correctness of the processes in the threads.

Luckily, the JVM treats OutOfMemoryError as a special case, and we can exit or crash JVM on OutOfMemoryError in the application using the following parameters:

-XX:+ExitOnOutOfMemoryError

-XX:+CrashOnOutOfMemoryError

The application will be stopped if we run our examples with any of these arguments. This would allow us to investigate the problem and check what’s happening.

The difference between these options is that -XX:+CrashOnOutOfMemoryError produces a crash dump:

#

# A fatal error has been detected by the Java Runtime Environment:

#

# Internal Error (debug.cpp:368), pid=69477, tid=39939

# fatal error: OutOfMemory encountered: Java heap space

#

...

It contains information that we can use for analysis. To make this process easier, we can also make a heap dump to investigate it further. There is a special option to do it automatically on OutOfMemoryError.

We can also make a thread dump for multithreaded applications. It doesn’t have a dedicated argument. However, we can use a script and trigger it with OutOfMemoryError.

If we want to treat other exceptions similarly, we must use Futures to ensure that the threads finish their work as intended. Wrapping an exception into OutOfMemoryError to avoid implementing correct inter-thread communication is a terrible idea:

@Test

void givenBadExample_whenUseItInProductionCode_thenQuestionedByEmployerAndProbablyFired()

throws InterruptedException {

Thread npeThread = new Thread(() -> {

String nullString = null;

try {

nullString.isEmpty();

} catch (NullPointerException e) {

throw new OutOfMemoryError(e.getMessage());

}

});

npeThread.start();

npeThread.join();

}

5. Conclusion

In this article, we discussed how the OutOfMemoryError often puts an application in an incorrect state. Although we can recover from it in some cases, we should consider killing and restarting the application overall.

While single-threaded applications don’t require any additional handling of OutOfMemoryError. Multithreaded code needs additional analysis and configuration to ensure the application will exit or crash.

As usual, all the code is available over on GitHub.