Yes, we're now running our Black Friday Sale. All Access and Pro are 33% off until 2nd December, 2025:

Random Variables

Last updated: June 28, 2024

1. Introduction

In this tutorial, we’ll explain random variables.

2. Background

Let’s say that is the set of all possible outcomes of a random process we’re analyzing. We call

the sample space. For instance, when tossing a coin, there are two outcomes: head (

) and tail (

), so

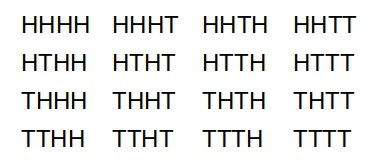

. Similarly, when flipping a coin 4 times in a row, there are

outcomes in the sample space:

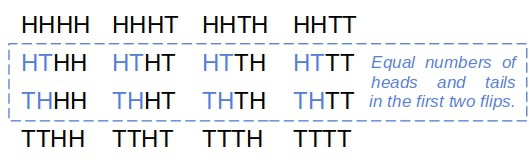

An event is any subset of . For example:

If we define a probability telling us how likely each event is, we get a probability space. More precisely,

maps the events defined over

to

. That’s where random variables come into play.

2.1. Random Variables

Usually, we’re interested in the numerical values the events represent or can be assigned. For example, if we toss a coin 100 times, we may be interested only in the number of heads and not the exact sequence of the s and

s.

Intuitively speaking, random variables are numerical interpretations of events. Those numerical values aren’t arbitrary. They represent precisely those quantities we’re interested in.

So, a random variable maps events to numbers

. Using the events’ probability

, we derive the probability

with which

takes values from

.

For example, if our coin is fair, each outcome in is equally likely. If we define

as the number of heads in four flips, we get this

:

There are two main types of random variables: discrete and continuous.

3. Discrete Variables

We say that is discrete if the set of values it can take with a non-zero probability is countable.

For instance, if is finite,

is a discrete random variable. But, a variable can take infinitely many values and still be discrete.

3.1. Countability

Let’s say we’re flipping a coin until we get two heads in a row. We can get in the first two flips, but there may be a sequence of 100

s before we get the first

. In fact, we may never get two

s one after another since there’s always a non-zero chance to get a

after an

.

So, if our random variable represents the number of tosses until getting two

s in a row, the set of its values

will be infinite:

However, it’s still countable! That means we can arrange it as an array. Since each value is possible with a non-zero probability, we say that the is discrete.

3.2. The Probability Mass Function

Mathematically speaking, the probability is defined over subsets of

just as the probability

is defined over subsets of

. The function mapping individual values

of

to their probabilities is known as the probability mass function (PMF)

.

The distinction is technical for the most part since we can define one using the other. Here’s how we get PMF from :

and vice versa:

3.3. The Cumulative Distribution Function

Let be any value

can take. The cumulative distribution function (CDF) of

is defined as:

For discrete variables, we calculate the CDF by summing individual probabilities:

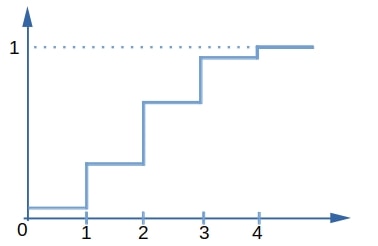

In our example with 4 tosses and with denoting the number of heads,

shows us the probability to get

or fewer heads:

As we see, plotting against sorted

reveals a non-decreasing staircase function. The probability that

gets a value between

and

is the corresponding area under the CDF.

We calculate it as follows:

3.4. Examples

We differentiate between various types of variables depending on the shapes of their CDFs.

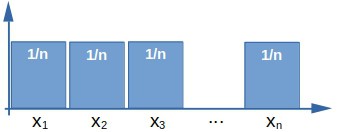

For instance, a uniform discrete variable assigns equal probabilities to each value in

:

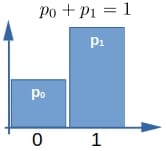

If there are only two values can take, which we usually denote as 0 and 1, we have a Bernoulli random variable:

4. Continuous Variables

Let model the time (in minutes) we spend waiting for an order in a restaurant. Let’s also say that the restaurant guarantees the waiting time is 15 minutes at most. So,

. In what ways is this

different from the count of

s discussed above?

First, there are uncountably many different values it can take: 10, 11, 10.5, 10.55, 10.555 minutes, and so on. But that’s not the most important difference.

The probability is spread over the uncountably many values in the range

. Since all those values are possible, we need to allocate some probability to each one. However, because there are infinitely many of them, and the total probability is finite (=1), the allocated amounts get so small that they’re practically zero (

). So, if we single out an individual value

, the probability of its realization

is zero.

That’s the definition of continuous variables. A random variable is continuous if

for every value

it can take.

4.1. Continuous CDF

The CDF of a continuous random variable is continuous everywhere:

The jumps and the staircase shape of a discrete variable’s CDF happen at the points at which . Since

for each

if

is continuous, there can be no jumps in the CDF plot. By definition, that means the corresponding CDF is continuous.

4.2. Probability Density Function

If has a derivative

, it holds that:

We call such a function the probability density function (PDF) of

. It’s zero outside the variable’s support, and the integral over it must be equal to 1:

Otherwise, it isn’t a proper density since we’ll get a total probability greater or lower than 100%, which doesn’t make sense.

The PDF of a continuous variable is analogous to the PMF of a discrete one. Both functions take the variable’s individual values as arguments. However, while PMF reveals their probabilities, the PDF shows us only how likely the values are one versus the other.

4.3. Examples

A continuous uniform variable’s PDF is constant over the range it’s defined over:

So the corresponding CDF is:

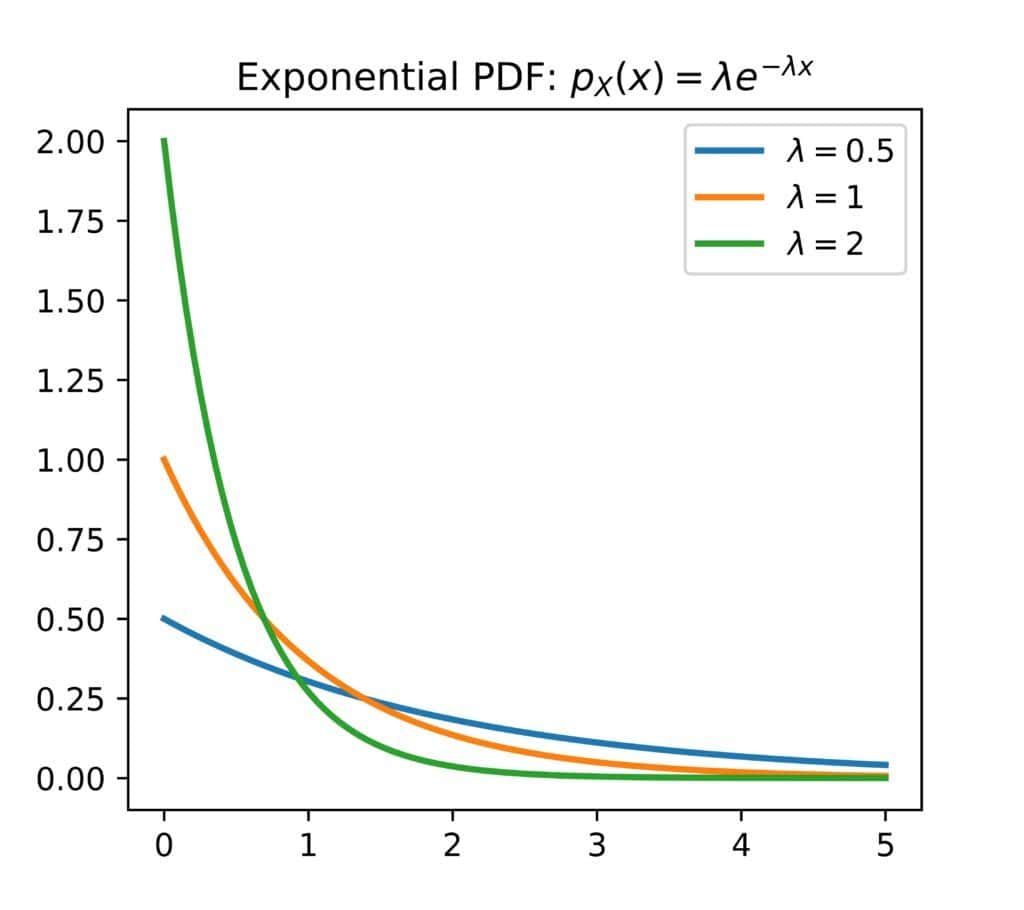

Another example is the class of exponential variables. Their densities drop exponentially, so small values are more likely than larger ones. The rate at which the density decreases is controlled by a parameter we usually denote as :

5. Discrete vs. Continuous Variables

Here’s a summary of the differences between discrete and continuous random variables:

| Discrete | Continuous |

|---|---|

| Countably many values with positive probabilities | No values with positive probabilities |

| Non-continuous step CDF | Continuous CDF |

| Usually denote counts | Usually represent measurements |

6. Determinism and Randomness

In a non-probabilistic context, a math variable holds an unknown but fixed value. So, chance plays no part in calculating it.

In programming, we can update a variable:

But at every point during our code’s execution, always holds one and only one value. So, each time we use a deterministic

, it evaluates to a single value. Unless we update it, that value stays the same.

In contrast, a random variable models a random process or an event. It doesn’t hold values but samples them according to the underlying probability. Each time we “use” a random variable, it can generate a different value due to randomness in the process or phenomenon.

6.1. The Nature of Randomness

There are two main interpretations of randomness.

In the frequentist school of thought, randomness is a property of physical reality. From this viewpoint, some natural (and even human-driven) processes are governed by inherently random laws. Those laws determine the long-term frequencies of the processes’ possible outcomes through probability functions. In other words, a random law doesn’t define the outcomes but the chances they’ll materialize. The laws’ true analytical forms are unknown, and the goal of statistics and science is to uncover or approximate them.

In the subjectivist (or Bayesian) tradition, probabilities quantify and represent our uncertainty about the world. They aren’t laws of nature or human society but mathematical tools we use to formalize our belief states. Hence, probabilities don’t exist independently from us and aren’t unique. Each conscious being can develop its own beliefs about a process or an event and express them using a functional form different from those others choose. Therefore, randomness originates from our inability to understand the world completely and aligns with the limitations of our knowledge.

7. Mixed and Multivariate Variables

Apart from continuous and discrete variables, there are also mixed ones. A mixed variable’s CDF consists of the step-like and continuous parts:

where the are those values at which

is positive.

All the variables we discussed were univariate (one-dimensional). However, a random variable can have more than one dimension. In that case, we call it multivariate. We consider each dimension a univariate variable, so a multivariate variable denotes an array of one-dimensional ones.

8. Conclusion

In this tutorial, we explained random variables. We use them to quantify our belief states or the outcomes of random processes and events.