Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: February 28, 2025

Neural networks are machine learning models capable of learning complex patterns from data. In order to learn these patterns, neural networks rely on the interactions between layers of neurons. Two important concepts that play a crucial role in these interactions are the concepts of dense and sparse.

In this tutorial, we’ll explore the concepts of dense and sparse layers, their differences, and their applications.

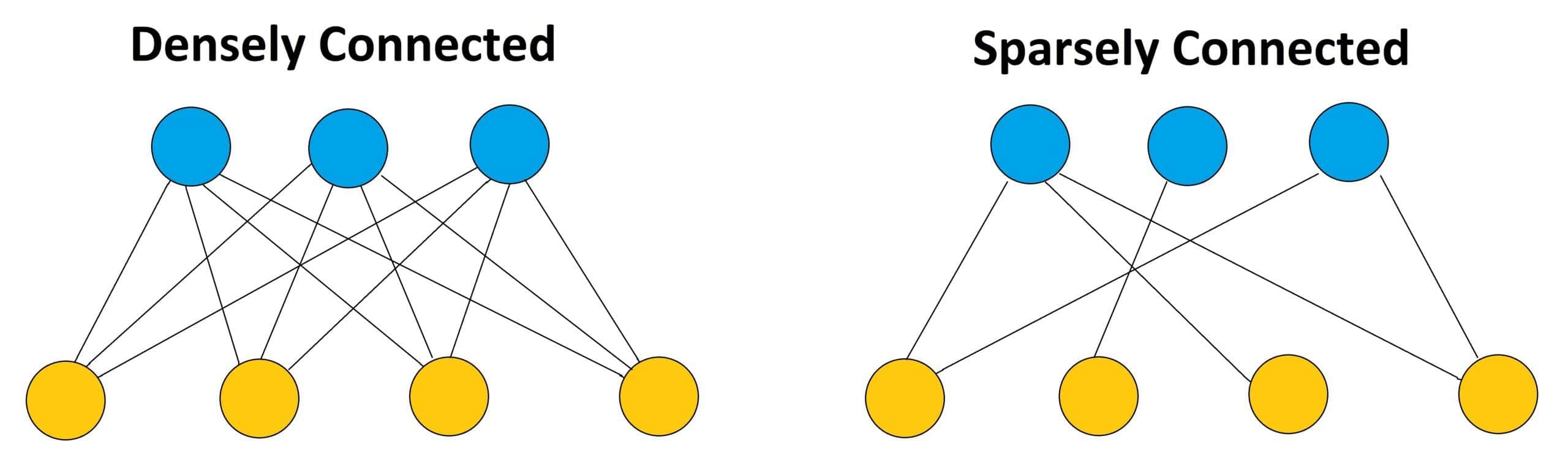

In the context of neural networks, dense and sparse refer to the connectivity between layers of neurons. A dense layer is a layer where each neuron is connected to every neuron in the previous layer. In other words, the output of each neuron in a dense layer is computed as a weighted sum of the inputs from all the neurons in the previous layer.

On the other hand, a sparse layer is a layer where each neuron is only connected to a subset of the neurons in the previous layer. In other words, the output of each neuron in a sparse layer is computed as a weighted sum of the inputs from a subset of the neurons in the previous layer:

Dense layers are the most common type of layer in neural networks. They are used in many different types of neural networks, including feedforward neural networks, Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs).

The primary advantage of dense layers is that they are able to capture complex patterns in data by allowing each neuron to interact with all the neurons in the previous layer. This makes dense layers well-suited for tasks such as image classification, where the input is a high-dimensional image, and the output is a prediction of the class of the object in the image.

However, the downside of dense layers is that they require many parameters, making them computationally expensive to train. In addition, dense layers can suffer from overfitting, where the model memorizes the training data instead of learning generalizable patterns.

Sparse layers, on the other hand, have a smaller number of parameters than dense layers. This is because each neuron only interacts with a subset of the neurons in the previous layer, which reduces the number of connections between neurons.

The primary advantage of sparse layers is that they can be more computationally efficient than dense layers, particularly when dealing with high-dimensional data. In addition, sparse layers can help prevent overfitting, as the reduced connectivity between neurons can help the model generalize better to new data.

Sparse layers are particularly well-suited for tasks such as Natural Language Processing (NLP), where the input is a sequence of words, and the output is a prediction of the next word in the sequence. In these types of tasks, the relationships between words are often sparse, as each word is only related to a small subset of the other words in the sequence.

While dense and sparse layers have different strengths and weaknesses, they are not mutually exclusive. In fact, many neural networks use a combination of dense and sparse layers to achieve the best of both worlds.

One common approach is to use sparse layers early in the network to reduce the dimensionality of the input data and then use dense layers later in the network to capture more complex patterns. For example, in a CNN for image classification, the early layers might use sparse connections to reduce the dimensionality of the image. Then the later layers might use dense connections to classify the object in the image.

Another approach is to use dense layers to learn representations of the input data and then use sparse layers to make predictions based on those representations. For example, in an RNN for NLP, the dense layers might be used to learn representations of the words in the sequence, and then the sparse layers might be used to predict the next word in the sequence based on those representations.

The choice between dense and sparse layers in a neural network depends on the nature of the data and the specific task being performed. Dense layers are generally more appropriate for data with a lot of structure and dependencies, such as images or audio signals. This is because dense layers are able to capture complex patterns in the data and learn the relationships between different parts of the input.

Sparse layers, on the other hand, are more appropriate for data that is sparse or has a lot of noise. For example, in text classification tasks, sparse layers have been found to be effective in representing the input data as a bag of words, where each word is represented by a binary value indicating its presence or absence in the input.

In addition, the choice between dense and sparse layers can depend on the specific task being performed. For example, in a task that requires learning long-term dependencies, such as language translation or speech recognition, RNNs with sparse connections have been found to be effective.

Let’s explain how dense and sparse layers can behave differently for different types of data.

For text data, dense layers are often used in NLP tasks, such as text classification, sentiment analysis, and language translation. This is because text data often have a complex structure with long-term dependencies that can be better captured by a dense layer with a high degree of connectivity.

For example, in a neural network for language translation, the input sentence is fed into a dense layer to capture the sentence’s meaning and then fed into a sequence-to-sequence layer to generate the output translation.

Dense layers can help the network capture the relationships between words and phrases in the input text and generate more accurate translations.

On the other hand, sparse layers can also be used for text data in certain situations, such as in topic modeling, where the goal is to identify the most important words or phrases in a document. In this case, a sparse layer can help enforce sparsity in the network and highlight the most salient features in the text.

For image data, sparse CNNs are often used due to their ability to process spatial data with a lot of noise efficiently. In a sparse CNN, only a subset of the pixels is connected to neurons in the previous layer, which can reduce the computational complexity of the network. This is particularly important for large image datasets, where the use of dense layers can result in prohibitively large networks that are difficult to train. Sparse CNNs are widely used in object detection, image segmentation, and facial recognition tasks.

However, dense layers can also be used in certain image processing tasks, such as image captioning or style transfer, where the goal is to capture fine-grained details in the image. In these cases, a dense layer can help the network capture the complex relationships between the different pixels in the image and generate more accurate captions or stylized images.

For audio data, dense layers are often used in speech recognition tasks, such as automatic speech recognition and speaker identification. This is because audio data often has a complex temporal structure with long-term dependencies that can be better captured by a dense layer with a high degree of connectivity.

For example, in a neural network for speaker identification, the input audio signal is fed into a dense layer to capture the unique features of the speaker’s voice and then fed into a SoftMax layer to identify the speaker. Dense layers can help the network capture the complex relationships between different parts of the audio signal and generate more accurate speaker identifications.

However, sparse layers can also be used in audio data processing, such as in music recommendation systems, where the goal is to identify the most important features in a music track. In this case, a sparse layer can help enforce sparsity in the network and highlight the most salient features in the audio signal.

In this article, we studied the concepts of dense and sparse in the context of neural networks. Dense layers allow each neuron to interact with all neurons in the previous layer. In contrast, sparse layers only allow each neuron to interact with a subset of the neurons in the previous layer.

Both dense and sparse layers have their own strengths and weaknesses, and the choice between them depends on the nature of the data and the specific task being performed.