1. Introduction

In this tutorial, we’ll show several ways to find the smallest numbers in an array. We’ll also discuss those methods’ complexity and relative performance to one another.

All those techniques can be adapted to the converse problem of finding the largest numbers in an array.

2. Problem Description

Given an array of

numbers, our goal is to find the

smallest elements and return them in the non-decreasing order.

For example, if and

, the solution is the array

because those are the three smallest numbers in

.

When , the problem reduces to finding the minimum of an array.

3. Naïve Solutions

We can loop over the array times and find and remove the smallest number in each pass. This solution’s time complexity is

. It’s inefficient because it traverses the whole array repeatedly.

Another naïve solution is to sort the whole non-decreasingly and then take the first

elements of the sorted array. The problem with this approach is that it sorts all the

elements even though we need only the

smallest. This method’s complexity depends on the sorting algorithm but will be inefficient whenever

is much smaller than

. For instance, it doesn’t make sense to sort the whole array of

numbers if we need only the five smallest.

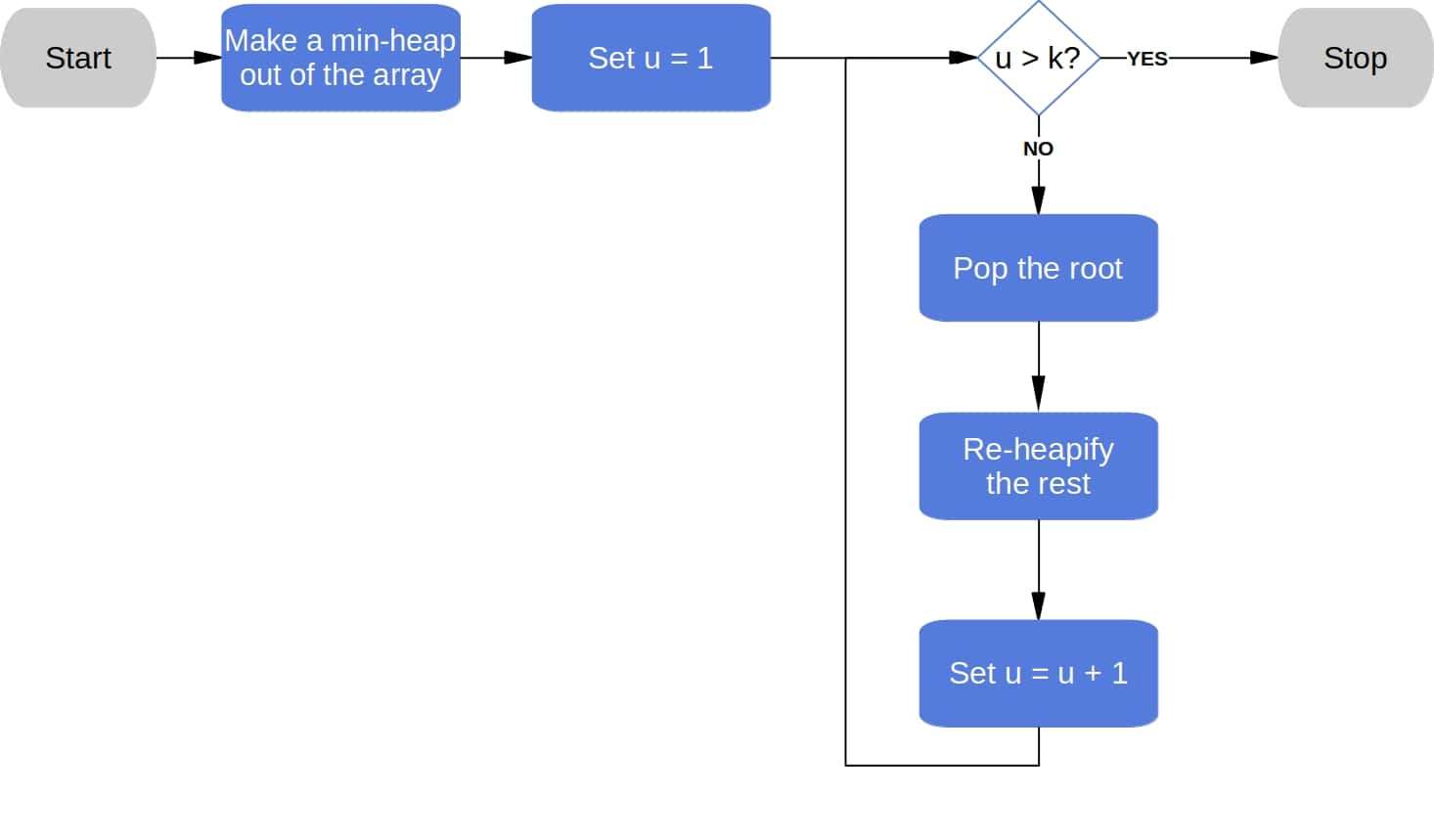

4. The Solution Based on a Min-Heap

We can do better with a min-heap. Thought of as a binary tree, a min-heap has the property that each node is less than or equal to its children. So, the root node is the minimum of a min-heap.

Once we build a min-heap out of the array, we can pop its root to get the smallest element in it. Then, we process the rest of the heap to preserve the heap property and pop the new root to get the second smallest number.

Repeating the process times gives us the desired output:

Using Floyd’s algorithm, we can build the heap in time. Popping the root and heapifying the rest takes

time, so

such steps can be done in

time in total. Hence, the total complexity is:

If is a linear function of

(for example,

when we look for the bottom

), then the complexity becomes

which is the lower bound of the complexity of sorting algorithms that use binary comparisons only.

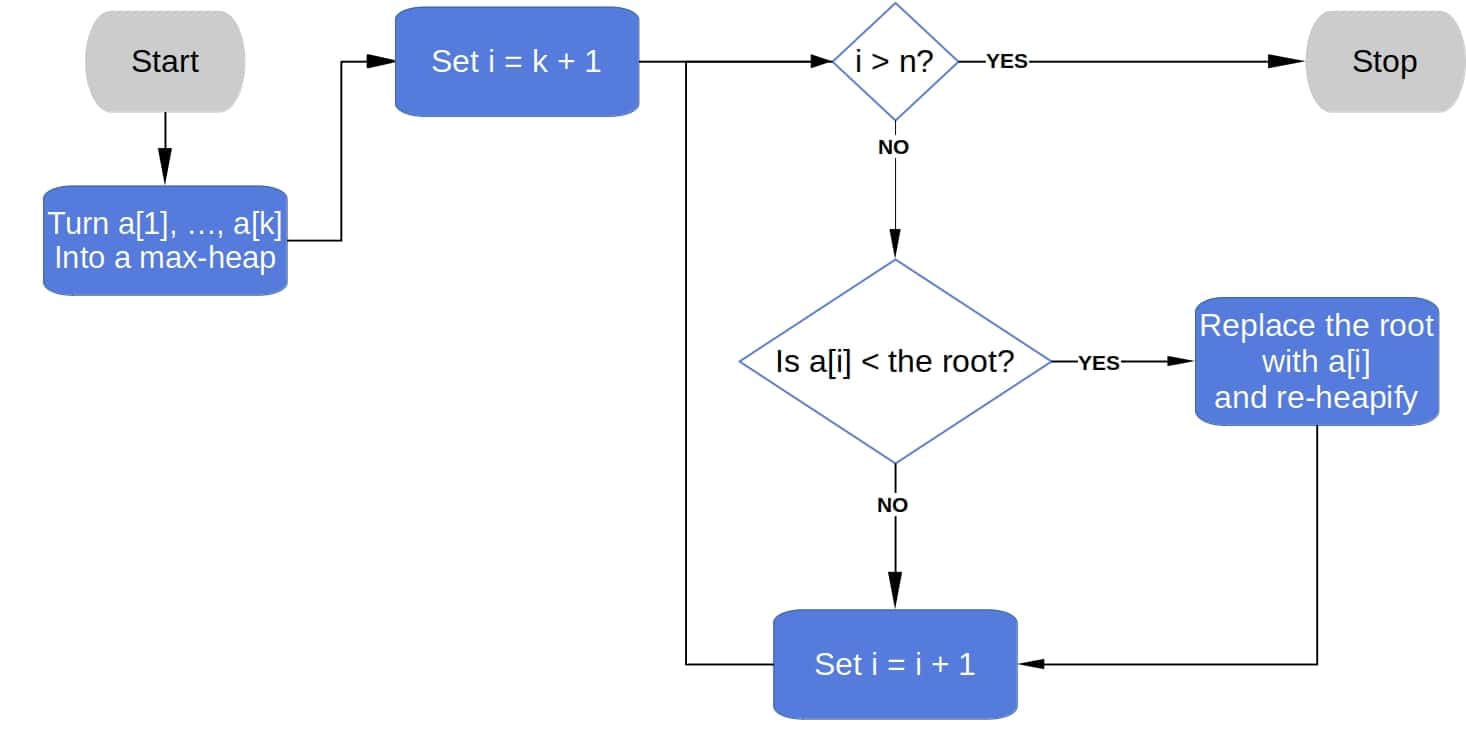

5. The Solution Based on a Max-Heap

We can use a max-heap to solve the problem. A max-heap is like a min-heap, the only difference being that each max-heap’s node is greater than or equal to its children. So, the root of a max-heap contains its maximum.

In the beginning, we take the first elements of the unsorted array and put them into a max-heap. Then, we iterate over the remaining

elements and compare them to the root.

If the current element () is lower than the root, then we know that there are at least

elements not greater than the root (

currently in our heap and the one with which we’ve just compared), so the root can’t be among the

smallest numbers. Hence, we replace the root with

, process the heap to preserve its property, and move to the next element in the array.

Otherwise, if is not lower than the root, we know that it’s greater than or equal to at least

other numbers in the array, so it can’t be one of the

smallest, and we can discard it:

Using Floyd’s algorithm, we can construct the heap in time. In the worst case, each of the

elements initially out of the heap will be lower than the root, so we’ll perform the

root replacement operation

times. As a result, the worst-case complexity is

If is a linear function of

, then the complexity is

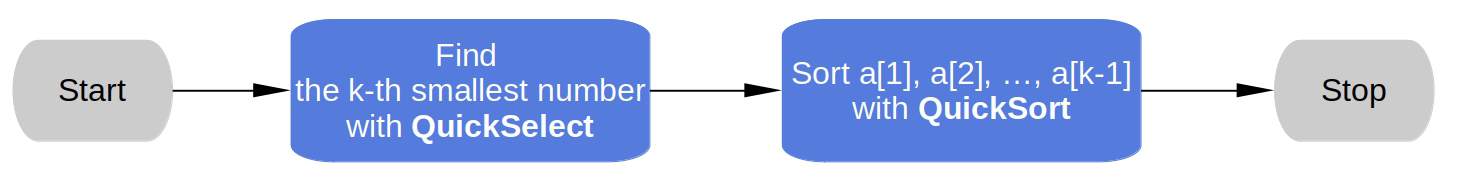

6. QuickSelSort

This approach combines QuickSelect with QuickSort.

First, we use QuickSelect to find the -th smallest number. It will place it at the

-th position in the input array

and put the

smaller numbers on positions

through

but won’t sort them.

To sort those numbers from the smallest to the largest, we apply QuickSort to . We don’t need to process

with QuickSort because we already know it’s the

-th smallest:

Although the worst-case complexity of the approach is

its average complexity is

which is rather good. However, if is a linear function of

, then the average complexity turns out to be

.

A notable advantage of QuickSelSort is that it uses two algorithms that are available in many libraries and tuned for performance, so we don’t have to implement it from scratch.

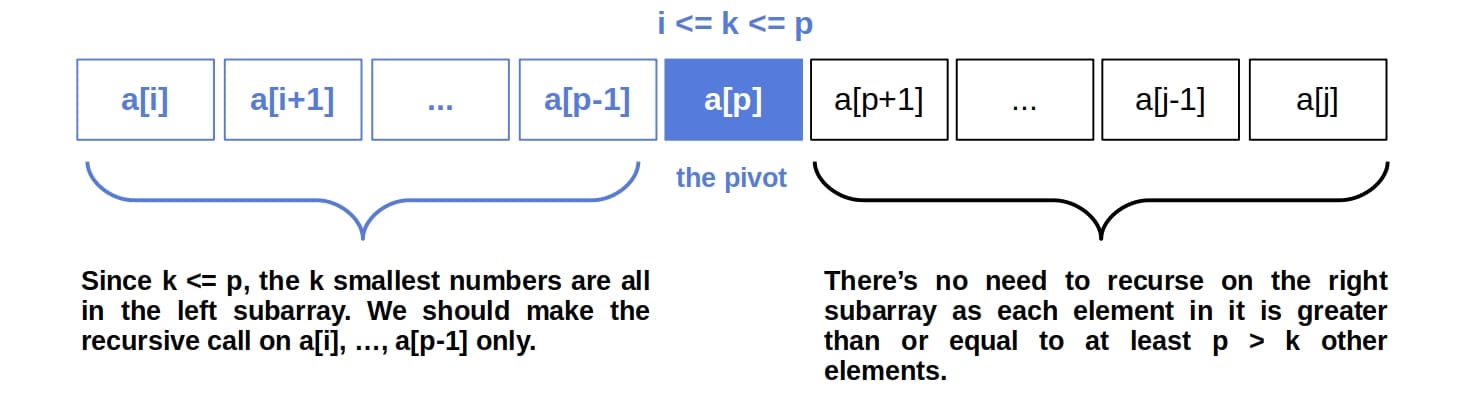

7. Partial QuickSort

Partial QuickSort is a modification of the usual QuickSort. The difference is that the former makes recursive calls only on the parts of the array that provably contain the smallest elements.

In the usual QuickSort, we get the subarray , select a pivot and partition

into two smaller subarrays

and

. Since the goal of QuickSort is to sort the whole array, we must make two recursive calls: one for the left and the other for the right subarrays.

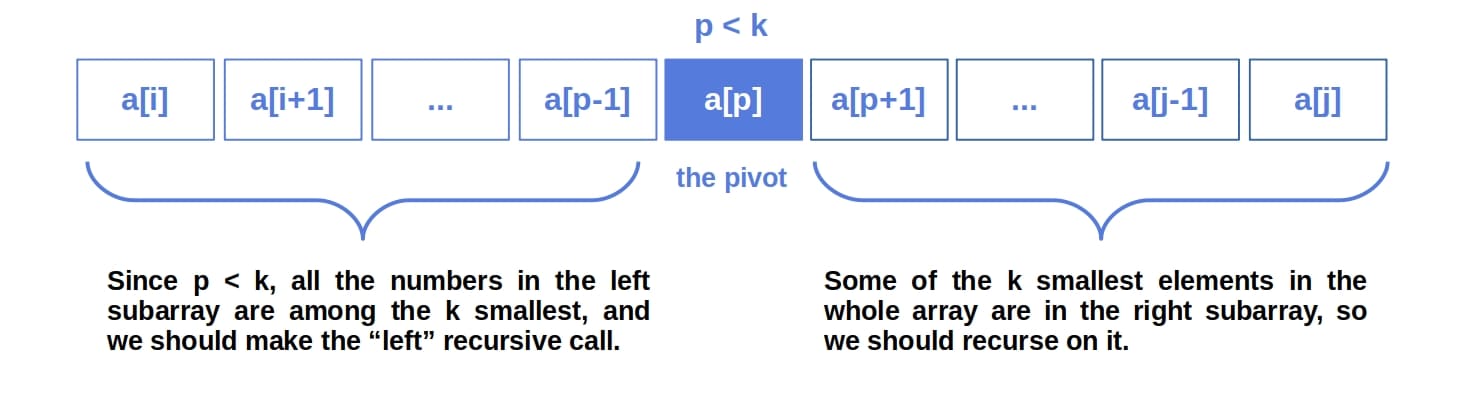

In contrast, Partial QuickSort doesn’t always sort the right subarray.

If , then Partial QuickSort completely ignores the right subarray since none of the

smallest numbers is in it. All the numbers we’re after are in the left subarray, so we make the recursive call only on

.

If , then some of the sought numbers are in

, so we have to make a recursive call on it as well:

If we select the pivot randomly, then the worst-case complexity of Partial QuickSort is , and the expected complexity is

, just as it’s the case with QuickSelSort. However, partial QuickSort makes fewer comparisons on average.

8. Partial QuickSort with Median of Medians

There’s a way to reduce the worst-case time complexity of Partial QuickSort to . We can achieve that by choosing the pivot element with an algorithm known as Median of Medians.

8.1. Median of Medians

The QuickSort and Partial QuickSelect algorithms show the best asymptotic performance if we reduce the array size by a constant factor before the recursive calls, as the size of the array to process will drop exponentially with the recursion depth.

The Median of Medians (MoM) is a pivot selection algorithm that does precisely so.

MoM returns an element between the bottom and the top

of the input array. Therefore, the returned pivot splits the input array in a ratio between

and

. In the worst case, we reduce the size of the input by a factor of

. Since that’s a reduction by a known constant, the input size drops exponentially.

8.2. Complexity Analysis

MoM runs in time. As Partial QuickSort does

comparisons in each call, the overhead caused by MoM doesn’t change the asymptotic complexity of the call. However, the worst-case time complexity reduces to

That’s the average-case complexity of the generic version of Partial QuickSort.

9. Hybrid Partial QuickSort

Even though Partial QuickSort with MoM has an worst-case time complexity, it runs slower than the asymptotically inferior version of Partial QuickSort on most arrays in practice. MoM improves the worst-case complexity but makes the algorithm run slower on most inputs due to the additional overhead.

There’s a way to keep the practical efficiency and reduce the worst-case complexity at the same time. We draw inspiration from IntroSelect.

The idea is to start with the usual Partial QuickSort, which chooses pivots at random. We assume that the input at hand won’t cause the algorithm’s worst-case behavior and that it’s safe to use random selection. A good deal of time, we’ll be right. The average–case model will apply to most input arrays. However, if we notice that random selection results in inefficient partitioning, we fall back on MoM to avoid the worst-case and have it

instead.

We can switch to MoM with two simple rules:

- We track the input array sizes in all the recursive calls. If any

consecutive calls do not halve the input’s length, where

is a constant set in advance, then we switch to MoM.

- We track the sum of the input array sizes in recursive calls. If we notice that the sum in a call exceeds

, where

, then we switch to MoM.

These rules limit the recursion depth to ensuring that the worst-case complexity is

. In addition, they’ll keep the algorithm efficient on most inputs. Let’s see how the second strategy works:

10. Complexity Comparison

We see that all the methods based on QuickSort and QuickSelect have the same average–case time complexity of . However, QuickSelSort and Partial QuickSort have an

worst-case time complexity. Though asymptotically equivalent, the latter is more efficient in practice.

If we use MoM to choose the pivot in Partial QuickSort, we reduce the worst-case complexity to , but end up with an inefficient algorithm because of the overhead caused by MoM. Hybrid Partial QuickSort has the same asymptotic complexity as Partial QuickSort with MoM. But, it’s faster in practice because it uses MoM only when random selection results in inefficient partitioning.

Heaps offer interesting alternatives. However, their performance in practice depends on how much is larger than

.

If is a linear function of

, all the approaches become log-linear in

in the average case. Partial QuickSort with MoM, Hybrid Partial QuickSort, and the heap-based solutions become

in the worst case as well.

11. Other Approaches

Instead of QuickSort, we can adapt other sorting algorithms, such as MergeSort.

We can also base our solutions on the Floyd–Rivest selection algorithm. It finds the -th smallest element in an array just as QuickSelect but is faster on average.

12. Conclusion

In this tutorial, we showed several ways to find the smallest numbers in an array. We based most of our solutions on QuickSort but also presented two heap-inspired approaches and mentioned other algorithms that we can adapt to solve the problem.