1. Overview

In this tutorial, we’ll talk about image stitching, a fundamental technique in computer vision that aims to combine multiple images into a seamless panorama. We discuss the key steps involved in image stitching and explain the underlying algorithms. Finally, we discuss the applications of image stitching in different fields.

2. What Is Image Stitching?

Image stitching is the process of combining multiple images to create a single larger image, often referred to as a panorama. The images depict the same three-dimensional scene, and they must overlap in some regions. Image stitching aims to create a seamless transition between adjacent images while preserving the geometry and visual quality.

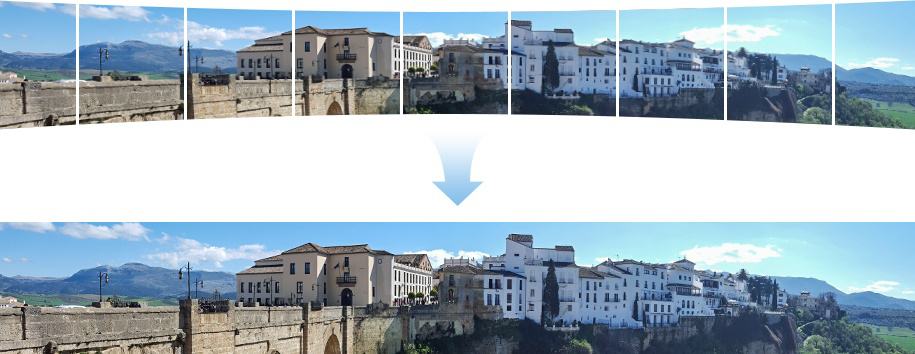

The following image shows some input photographic images and the corresponding output of the image stitching process.

3. Image Stitching Pipeline

Image stitching is a multi-step technique that involves the following image-processing operations:

- Feature detection

- Feature matching

- Transformation estimation

- Image warping

- Blending

Here, we discuss in detail the methods for each of these steps.

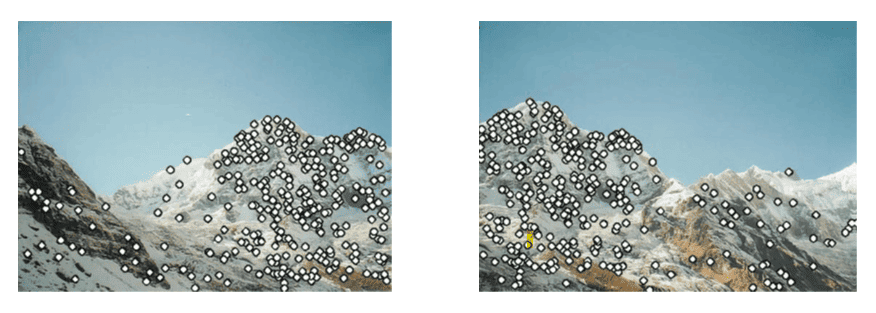

3.1. Feature Detection

Feature detection is the task of identifying distinctive and salient points in an image. These points, generally called keypoints, serve as landmarks for aligning the acquired images.

Keypoints should be distinctive and remain relatively invariant under changes in scale, rotation, lighting conditions, and viewpoint. The most frequently used algorithms for feature detection are SIFT (Scale-Invariant Feature Transform), SURF (Speeded-Up Robust Features), FAST (Features from Accelerated Segment Test), and ORB (Oriented FAST and Rotated BRIEF).

The following figure shows the SIFT features extracted from two images depicting the same scene from different viewpoints:

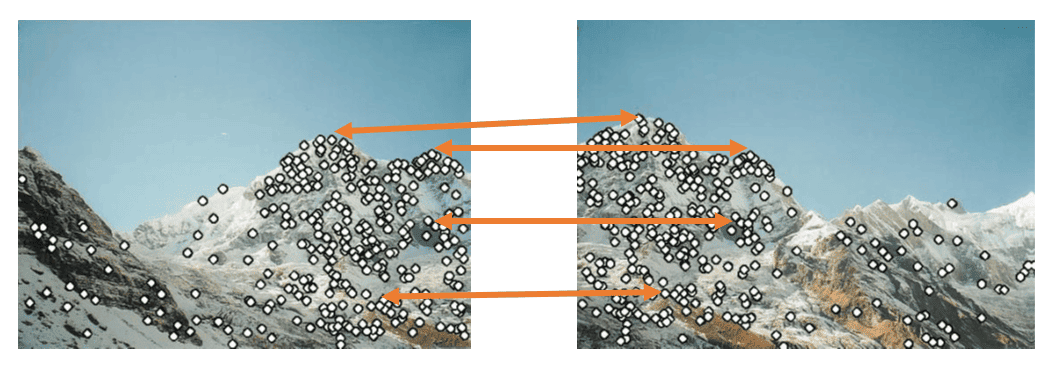

3.2. Feature Matching

Once features are detected, the next step is to find corresponding features between the images. The goal is to identify points in one image that correspond to the same real-world location in another image.

For each detected feature, a descriptor is computed. The descriptor is a compact numerical representation of the local image information around the feature point. It captures the key characteristics of the feature and is used for matching.

The descriptors from one image are compared to the descriptors from another image to identify pairs of descriptors that are similar. The algorithms commonly used for this task are the Brute Force Matching and the FLANN (Fast Library for Approximate Nearest Neighbors).

In Brute Force Matching, each feature descriptor in one image is compared to every feature descriptor in the other image. It involves an exhaustive search, checking all possible pairs of descriptors. This method is straightforward but can be computationally expensive, especially when dealing with many features.

On the other hand, FLANN employs a tree-based approach for efficient nearest-neighbor search. It builds a k-d tree structure from the feature descriptors of one image. During matching, it traverses this tree to find approximate nearest neighbors, allowing for faster and more efficient matching compared to the brute-force approach. FLANN is useful when dealing with a large number of high-dimensional feature descriptors.

The following figure shows some of the matching features between two images of the same scene:

3.3. Transformation Estimation

Once the pairs of matching features are identified, the transformations that align each image with a reference image are estimated. Such transformation is called homography. It is represented by a matrix, generally denoted as

, and allows to describe several geometric distortions, including rotations, translations, scaling, and skewing.

Not all pairs of key points found by the feature-matching procedure are accurate. Outliers and mismatches may occur due to noise, occlusions, or changes in the scene. The algorithm Random Sample Consensus (RANSAC) allows us to robustly estimate the best homography for the retrieved matches while disregarding outliers. RANSAC is an iterative algorithm commonly used in computer vision to find the parameters of a mathematical model from a set of observed data points containing outliers.

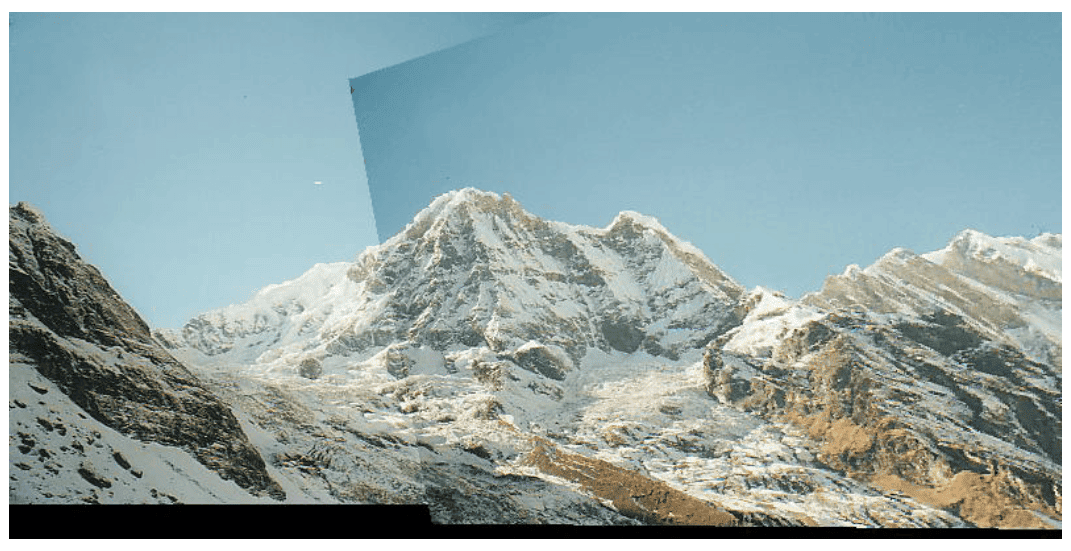

3.4. Image Warping

This step involves applying the transformations found in the previous step to all the images, except the reference image. In this way, the images are aligned in the same reference system. The process of image warping involves applying a transformation to each pixel’s coordinates in the original image and determining its location in the transformed image. The interpolation method used during this process influences the visual quality of the warped image. Common interpolation methods include nearest-neighbor, bilinear, and bicubic interpolation.

The following image shows the image warping and alignment of two images of the same scene:

3.5. Blending

Uneven lighting conditions and exposure differences between the acquired images lead to visible seams in the final panorama. Image blending techniques allow us to mitigate the seam problem.

If we consider for simplicity two images and

, the image blending can be expressed as:

(1)

where and

are parameters which are used to weigh the contribution of each image. These weights are set to

at one edge of the overlap and smoothly increase to

towards the center of the image. This gradient helps in achieving a smooth transition. The weights are often set using values of the distance transform. This function assigns the distance with the nearest boundary to each pixel.

4. Applications of Image Stitching

Image stitching finds diverse applications across various fields. In photography, image stitching allows the creation of expansive and high-resolution panoramas, enhancing the field of view and providing immersive experiences for viewers.

In the realm of augmented reality (AR), image stitching is crucial for seamlessly integrating virtual content with the real world, ensuring coherent and realistic overlays.

Remote sensing benefits from image stitching in satellite and aerial imagery, enabling the construction of detailed and comprehensive mosaics for applications in agriculture, disaster management, and urban planning.

Medical imaging utilizes image stitching for combining scans from different modalities or capturing large anatomical structures, aiding in accurate diagnosis and treatment planning.

5. Conclusion

In this article, we reviewed image stitching, a computer vision technique to combine multiple images into a single larger image. We discussed the different steps and algorithms involved in image stitching. Finally, we illustrated the applications of image stitching in different fields.