Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: February 28, 2025

In this tutorial, we’ll talk about Hopfield Network and its features. Also, we’ll consider the architecture and the way of its training.

John Hopfield created the Hopfield Network, a form of recurrent artificial neural network (ANN), in 1982. It is a straightforward associative memory that has the ability to retain and recall patterns. Typical applications for Hopfield Networks include pattern recognition, content-addressable memory, and optimization issues.

In content-addressable memory, where recorded patterns can be retrieved by presenting a distorted or noisy version of them, Hopfield Networks are predominantly employed. They have uses in associative memory, image recognition, mistake correction, and optimization issues. They are, however, somewhat basic in comparison to contemporary neural networks, such as deep learning models, and their application space is somewhat constrained by their architectural limitations.

A Hopfield Network comprises a collection of interconnected neurons or nodes. These neurons may be binary, with the states +1 and -1 (or 1 and 0), respectively. Each neuron stands for a component of memory or pattern. Each neuron in the network is connected to every other neuron in the system, including itself, with the exception of diagonal connections, which are often set to zero. The strength of the connections between neurons is typically represented by these connections, which are frequently binary weights (they can be +1 or -1).

Another essential characteristic of a Hopfield Network is an energy function, which is used by Hopfield networks to ascertain the network’s state. When the network reaches a stable state, which corresponds to a stored pattern or a collection of patterns, its energy is decreased. The network updates its states in discrete time steps based on the states of the neurons to which it is linked. Neurons typically choose their future state using a straightforward updating rule, such as the McCulloch-Pitts model. Asynchronously or sequentially, each neuron’s state is updated until the network reaches a stable state

Hopfield Networks have drawbacks, such as the possibility of bogus states and restrictions on the number of patterns they can dependably store. Additionally, complicated and high-dimensional data may be challenging for the network to handle.

The following components make up the Hopfield network’s architecture:

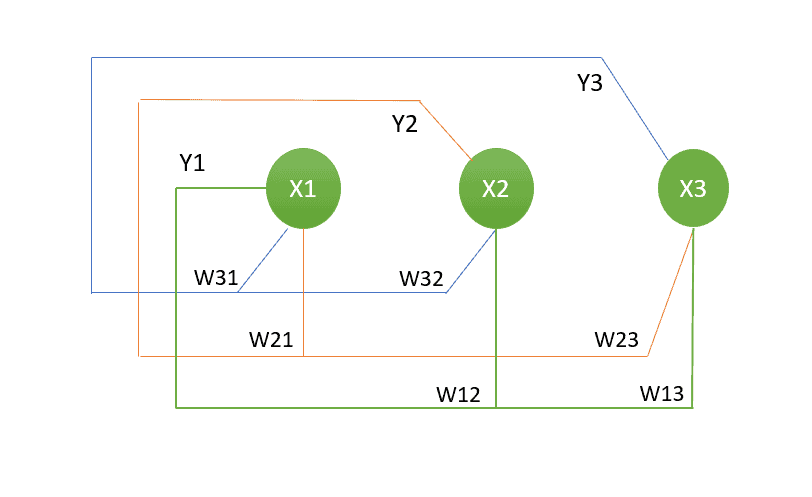

Following is a representation of the Hopfield network’s three-node example architecture:

Each symbol in the diagram above denotes:

In most cases, the Hopfield network learning rule is specified as follows for updating connection weights to store patterns:

In this formula:

This formula calculates the weight based on the co-occurrence of the states of neurons

and

across all the training patterns. It adjusts the weights such that the network can later retrieve these patterns when presented with noisy or partial inputs.

In this article, we discussed the introduction to the Hopfield Network and its characteristics. In addition, we went through its basic architecture and training.