1. Intro

In this article, we’ll describe one sampling technique called Gibbs sampling. In statistics, sampling is a technique for selecting a subset of individuals from a statistical population to estimate the characteristics of the whole population. By sampling, it’s possible to speed up data collection to provide insights in cases where it’s infeasible to measure the whole population.

One sampling technique for obtaining samples from a particular multivariate probability distribution is Gibbs sampling.

2. What Is Sampling?

As we mentioned above, sampling is a way of selecting a subset of elements from a given population. By using a proper way of sampling, we can select a smaller subset representing the whole population.

After that, we can work with that subset, and all conclusions that apply to the subset will also apply to the whole population.

Some of the sampling methods are:

- Random sampling

- Interval sampling

- Stratified sampling

- Cluster sampling

There are many different applications of sampling. Basically, in every task where we need to derive an approximation with a subset of data, we need to do some kind of sampling.

For example, while surveying our city, we need to sample a group of people that will represent the whole population. In machine learning, when we need to split the data into training and validation sets, one way of doing so is stratified cross-validation which refers to stratified sampling.

In a factory, a food technologist can sample several products and, based on their quality, determine the quality of the whole product stock and others.

3. Gibbs Sampling

Gibbs sampling is a way of sampling from a probability distribution of two or more dimensions or multivariate distribution. It’s a method of Markov Chain Monte Carlo which means that it is a type of dependent sampling algorithm. Because of that, if we need independent samples, some samples from the beginning are usually discarded because they may not accurately represent the desired distribution.

The main point of Gibbs sampling is that given a multivariate distribution, it’s simpler to sample from a conditional distribution than from a joint distribution. For instance, instead of sampling directly from a joint distribution , Gibbs sampling propose sampling from two conditional distribution

and

.

For a joint distribution , we start with a random sample

. Then we sample

from the conditional distribution

and

from the conditional distribution

. Analogously, we sample

from

and

from

.

4. Gibbs Sampling Definition

Suppose we want to obtain samples of

from a joint distribution

. Lets denote the

-th sample as

. The algorithm for Gibbs sampling is:

5. Example of Gibbs Sampling

To better explain this method, we will present a simple example. Let’s assume that we have two events and

. Assume that the joint probability that the event will happen is given as:

(both events will happen)

= 0.4 (only event A will happen)

= 0.3 (only event B will happen)

= 0.1 (neither A nor B will happen)

Suppose that we want to sample from the joint distribution above. We need to generate a sequence of pairs where

. For example,

indicates that only event A has happened.

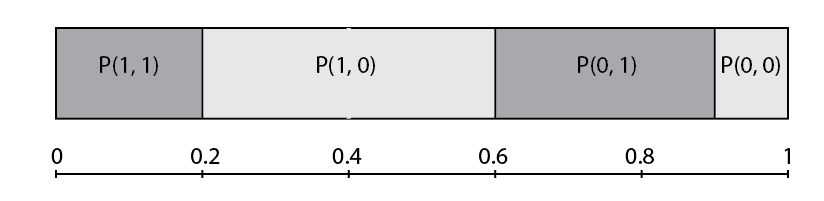

Of course, there is a simple way of sampling from the joint distribution above by taking a unit interval and dividing it into four parts, where the area of each part is equal to the probability above.

For example, we can generate a random number between

and

. If

is between

and

, then our sample is

, if

is between

and

, our sample is

, and analogously for others:

For this simple example, the method with unit interval is more convenient than Gibbs sampling but for some more complicated examples, especially with continuous distributions, we can’t use this method.

5.1. Conditional Distribution in Gibbs Sampling

In the Gibbs sampling method, we need to calculate conditional distribution and

. For example, to calculate

, we normalize values

and

by their sum

and get:

Similarly for , we have:

For the values are:

Once we know all conditional distributions, we can start with the Gibbs sampling algorithm. Assume that the initial random sample is . Now we need to sample

from the conditional distribution

. From the above, we have that probability for

is

and for

is 0.2. Let’s assume that we sample

or

.

Now we need to sample from the conditional distribution

. From the above, we have that probability for

is

and for

is

. Let’s assume that we sample

or

. It means that our second sample is equal to

and our sample set is currently

.

After this, we continue to sample other elements until all elements for the set are not generated.

6. Conclusion

In this article, we explained what does represent the term sampling. Our main goal was to explain Gibbs sampling using a formal definition, simple explanation, and simple example. Gibbs sampling is very useful when the joint distribution is not known explicitly or is difficult to sample from directly. Still, the conditional distribution of each variable is known and easier to sample from.