Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: September 16, 2024

In this tutorial, we’ll discuss the binomial distribution and its application.

Probability measures how likely an event is to occur. We use it in everyday conversations, like saying, “There’s a good chance it will rain today”, or “The odds of winning the football game is very low”.

In mathematical terms, probability is the ratio of the number of favorable outcomes to the total number of possible outcomes. For example, when we flip a fair coin, there are two possible outcomes: heads or tails. The probability of getting heads, denoted as , is:

In probability, we often deal with two types of random variables: discrete and continuous. Discrete random variables take countable values, and their probabilities are described using the Probability Mass Function (PMF). This tells us the likelihood of specific outcomes, like getting a certain number of heads when flipping a coin. Continuous random variables, which can take any value within a range, use the Probability Density Function (PDF) to describe how likely the variable falls within a particular interval.

Finally, the Cumulative Distribution Function (CDF) gives us the probability that a variable is less than or equal to a certain value. It can be applied to discrete and continuous distributions.

Regarding standard distributions, discrete distributions like the Binomial, Poisson, and Geometric distributions help us understand events that we can count, while continuous ones like the Normal, Exponential, and Uniform distributions handle measurements. Since our main focus here is the Binomial distribution, we’ll dive into that next.

Before diving into the binomial distribution, let’s quickly touch on Bernoulli trials. A Bernoulli trial is a basic random experiment with only two possible outcomes: success or failure. Think of flipping a coin—heads might be success, tails failure. The probability of success is , and failure is

, with both summing to

. When we repeat these trials independently a fixed number of times, we get the binomial distribution.

Imagine we’re flipping a fair coin 3 times, we want to calculate the probability of getting exactly 2 heads.

Let us go through the process of deriving the BDF from scratch.

For 3 coin flips, the possible outcomes (sequences) are:

There are possible outcomes in total.

We’re interested in the outcomes with exactly 2 heads. These are:

There are 3 outcomes with exactly 2 heads.

Each sequence of flips is independent, and the probability of any particular sequence occurring is the product of the probabilities of each flip. Independent means that the outcome of each coin flip does not affect the outcome of any other flip.

For example, the probability of the sequence is:

Since the coin is fair, all sequences have the same probability:

Since there are 3 successful outcomes , and each has a probability of 0.125:

Now, let’s generalize this approach for any number of trials and any number of successes

.

The number of different sequences (combinations) that result in exactly successes (heads) out of

trials (flips) is given by the binomial coefficient:

For our example, this is:

This matches the successful outcomes we identified earlier.

The probability of any one specific sequence with successes and

failures is:

For our example, with ,

, and

, this is:

Finally, we multiply the probability of one sequence by the number of such sequences to get the total probability:

For our example:

We derive the binomial formula as:

This formula allows us to calculate the probability of exactly successes in

independent trials, each with a success probability of

.

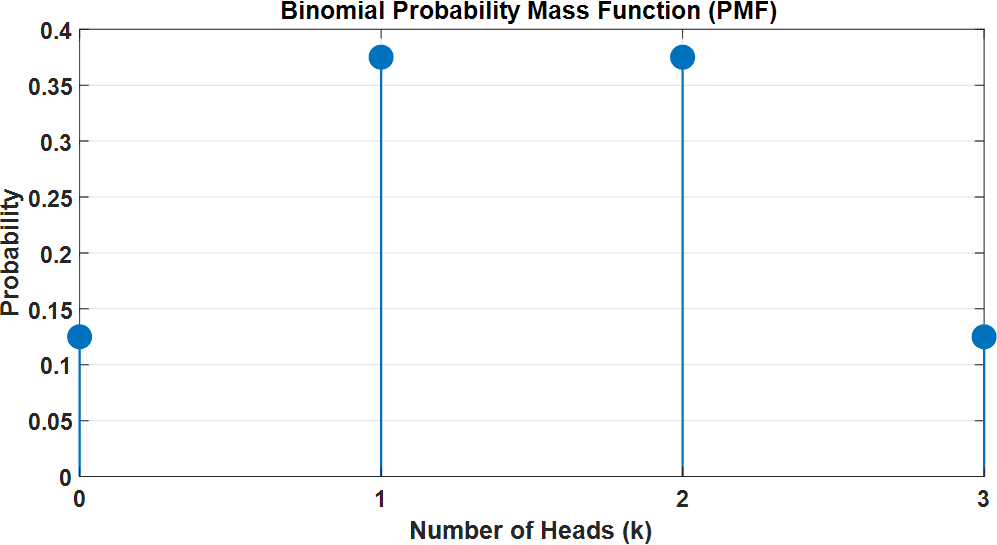

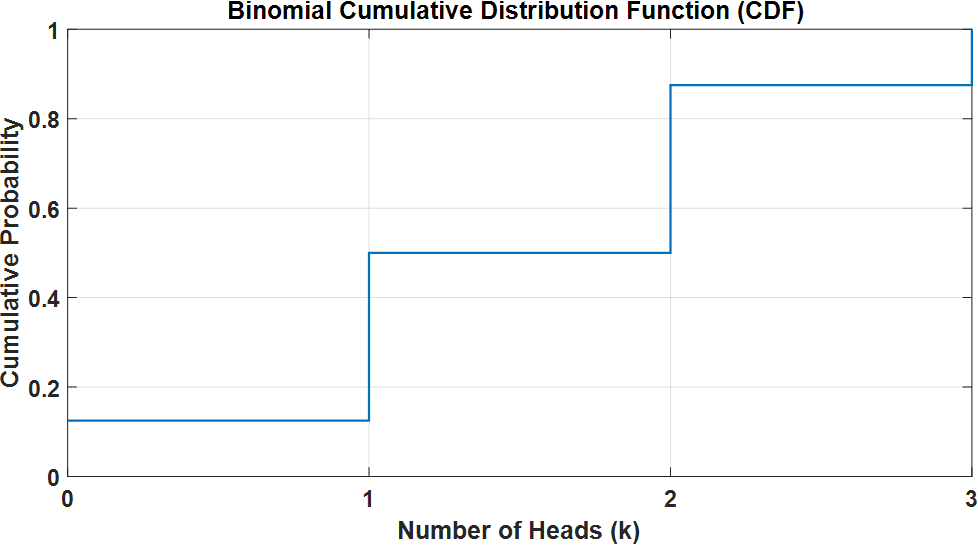

We will plot the and the

for the example of flipping a fair coin 3 times and getting exactly 2 heads:

The PMF plot clearly visualises the probabilities for each possible number of heads. In this example, it shows that getting exactly 2 heads has a probability of 0.375, which we calculated earlier:

The CDF plot represents the cumulative probability of getting up to a certain number of heads. For instance, the CDF value at 2 heads tells us the probability of getting 0, 1, or 2 heads combined.

Let’s discuss some of the important properties of the BDF.

When we want to determine if a scenario follows a binomial distribution, we should check if it meets these conditions:

If all these hold, we’re dealing with a binomial distribution.

The mean, or expected value, tells us the average number of expected successes. The formula is simple:

This helps us figure out what to expect in the long run.

Variance shows us how spread out the results are. For a binomial distribution, it’s given by:

It tells us how much the actual outcomes will differ from the average.

The standard deviation is just the square root of the variance:

It gives a more intuitive sense of how far outcomes typically are from the mean.

Skewness tells us if the distribution leans to one side. The formula is:

When , the distribution is symmetric. If

, it’s skewed to the right, and if

, it’s skewed to the left.

Kurtosis measures how the distribution is. The formula is:

A higher kurtosis means a sharper peak, while a lower one suggests a flatter shape.

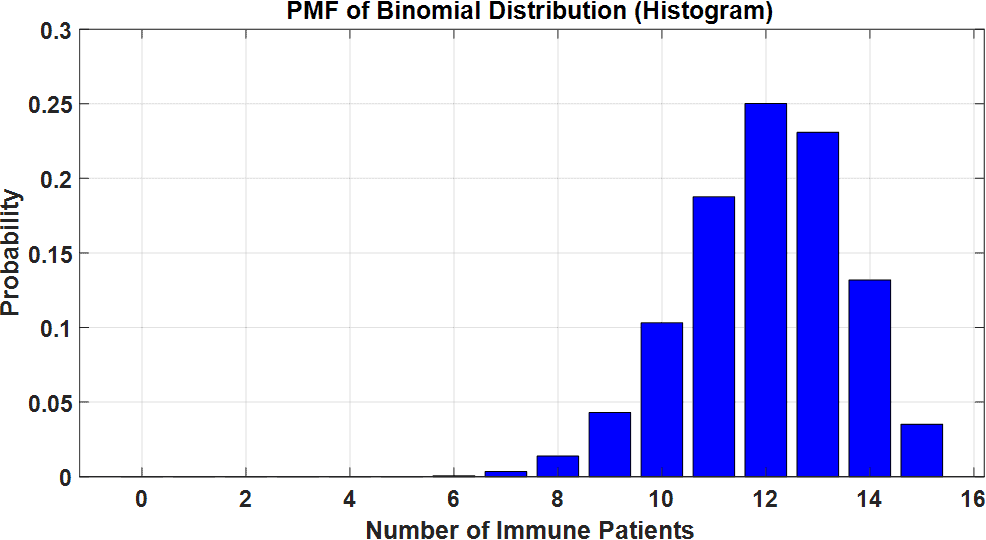

Let’s take a real-world scenario in the health sector: a vaccine trial. Suppose a vaccine is being tested and has an 80% success rate (i.e., it works for 80% of the patients). We run the trial on 15 patients and want to know how many will likely develop immunity.

This scenario meets the binomial distribution criteria:

The mean, or expected number of immune patients, is:

We expect 12 out of 15 patients to develop immunity.

Let’s break down the vaccine trial scenario using the binomial distribution formula step by step:

Let’s calculate the probability of exactly 12 out of 15 patients developing immunity (i.e., ):

First, calculate the binomial coefficient:

Now, calculate the powers:

Finally, putting everything together:

Thus, the probability of exactly 12 patients developing immunity is approximately 0.249, or 24.9%.

Let’s now plot the PMF and CDF to visualize the distribution:

The PMF plot shows how likely each number of immune patients is, with the highest probability around patients:

The CDF plot accumulates the probabilities, giving us a sense of how likely it is to have a certain number or fewer immune patients. For example, by looking at the CDF, we can easily estimate the probability of having fewer than 10 immune patients.

The variance, showing the spread of the number of immune patients:

The standard deviation is:

Let’s illustrate this with a plot:

We fill the region on the plot from to

with a red-filled area, showing one standard deviation 1.55 around the mean. This highlights that most immune patients will likely fall between ~10.45 and ~13.55, giving us a sense of the typical spread in the data.

For our scenario:

Since it’s negative, the distribution is slightly skewed to the left.

Let’s show the skewness of the distribution:

The left tail of the distribution is longer and thinner, which reflects the negative skewness value -0.41. This means that although the number of immune patients will mostly be close to the mean 12, there is a small chance of having fewer patients developing immunity.

The kurtosis:

This suggests a slightly flatter distribution than normal, as illustrated in the next plot:

A slightly negative kurtosis -0.3 implies that the distribution is less sharply peaked (i.e., less concentrated around the mean) and has lighter tails, indicating fewer extreme outcomes. This visualization shows that the vaccine trial results are more likely to be spread out, with a flatter curve around the central values.

This simple scenario and its corresponding plots tie together all the properties of the binomial distribution and provide insight into how we can predict outcomes in a medical trial setting.

In this article, we have provided a solid background to understanding the binomial distribution and its properties and show how to quantify probabilities in scenarios with binary outcomes.