Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: February 12, 2025

In this tutorial, we have a closer look at the 0-1 loss function. It is an important metric for the quality of binary and multiclass classification algorithms.

Generally, the loss function plays a key role in deciding if a machine learning algorithm is actually useful or not for a given data set.

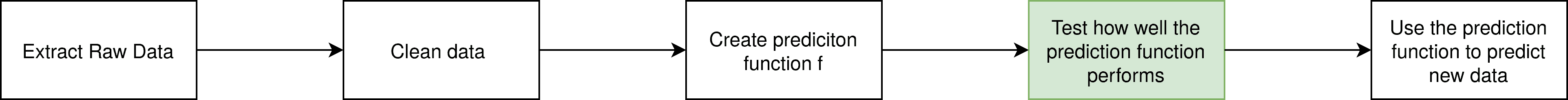

In the machine learning process, we have various steps including cleaning the data and creating a prediction function:

The step we want to have a closer look at is tagged in green: Measuring the quality of our prediction function. But how can we know if the model we created actually represents the underlying structure of our data?

To measure this quality of a data model, we can use three different metrics: Loss, accuracy, and precision. There are many more, like F1 score, recall, and AUC, but we will focus on those three.

The loss function takes the actual values and compares them with the predicted ones. There are several ways to compare them. A loss of 0 significates perfect prediction. The interpretation of the amount of loss depends on the given dataset and model. A popular loss function used in machine learning is the squared loss:

In this formula, is the correct result and

the predicted outcome. Squaring the differences actually gives us only positive results and magnifies large errors. As we can see, we also average our sum, dividing by

, to compensate for the size of our dataset.

Another statistical metric is the accuracy that directly measures how many of our predictions are right or wrong. In this case, it does not matter the size of the error, only if we have predicted false or correct. Accuracy can be calculated with the formula:

With our last metric, the precision, we calculate how close the predictions are. Not to the original values, but to each other. The formula for precision is given by:

TP means True Positives and FP False Positives.

Let’s have a look at an example to get a better understanding of this process. Suppose we are working with a dataset of three pictures and we want to detect whether our pictures show dogs or not. Our machine learning model gives us a probability of a dog being displayed for each picture. In this example, it gives us 80% for the first picture, 70% for the second, and 20% for the third picture. Our threshold for recognizing the picture as a dog picture is 70% and all of the pictures are actually dogs.

We thus have a loss of , an accuracy of

and a precision of

, since we have 2 true positives and 1 false positive.

The 0-1 Loss function is actually synonymous with the accuracy we presented in chapter 2 even though its formula is often presented quiet differetly:

This allows a different weighing of our results. For example, when working with disease recognition, we might want to have as few false negatives as possible, thus we could weigh them differently with the help of a loss matrix:

This loss matrix amplifies false negatives and weighs just half the loss of true positives.

The general loss matrix has the form:

The problem with our 0-1 Loss function stays. It’s not differentiable. Therefore it is not possible to apply methods such as gradient descent. Fortunately, we can still use a great palette of other classification algorithms, such as K-Means or Naive Bayes.

In this article, we covered the properties of the 0-1 loss function in the context of machine learning metrics.