Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: July 26, 2023

The free command is a common utility of Linux-based operating systems that provides information about the system’s memory usage. When using the free command, we may come across two terms: “buffer” and “cache”. These terms refer to different types of memory management used by the operating system to optimize performance. In this tutorial, we’ll see the difference between buffers and caches, and how they can help us gain insights into our system’s memory utilization using the free command.

The free command in Linux is a commonly used utility that provides information about the system’s memory usage, including both physical and virtual memory. When we run the free command in a terminal, it displays several values related to memory utilization. Here’s a breakdown of the meanings of these values:

It’s important to note that the values reported by the free command are dynamic and can change as processes and applications allocate or release memory. The values are presented in kilobytes by default, but we can specify different units, such as megabytes or gigabytes, by using appropriate options with the free command (e.g., free -m for megabytes).

In order to know what the numbers mean, we must understand the virtual memory (VM) subsystem in Linux. Linux, like most modern OS, will use free RAM for caching, so Mem: free will almost always be very low. Caches get freed automatically if memory gets scarce, so they do not really matter. Therefore, the line -/+ buffers/cache shows how much memory is free when ignoring the cache. A Linux system is really low on memory if the free value in -/+ buffers/cache the line gets low:

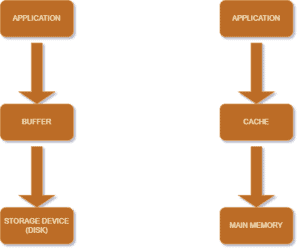

In the context of the free command, a buffer represents a portion of the system’s memory that temporarily stores data. When we access files on our computer’s hard drive or any other storage device, the operating system utilizes buffers to improve efficiency.

Buffers act as an intermediary between the storage device and the applications or processes that are accessing it. Buffer reads data from the storage device and stores it. Similarly, the buffer writes data to the storage device and stores it. This helps reduce the number of direct read or write operations to the storage device, which tends to be slower than accessing data from the buffer.

The purpose of buffers is to optimize disk I/O operations by aggregating data and reducing the overhead associated with frequent disk access. Buffers can hold both read and write data, making them essential for enhancing the overall performance of disk operations.

Cache, on the other hand, is another type of memory management mechanism of the operating system. Caches primarily improve the performance of the system’s main memory (RAM). The cache stores frequently accessed data, allowing for faster access compared to retrieving it directly from the main memory or disk.

When an application or process requires data from the main memory, the operating system first checks if the data is available in the cache. If it is, the data is retrieved from the cache, which is significantly faster than accessing the main memory. Cache operates based on the principle of locality, where it stores the data that has been accessed recently or is likely to be accessed in the near future.

Caches reduce memory latency and improve overall system performance by storing frequently used data closer to the CPU. This helps minimize the time spent waiting for fetching the data from the main memory, which is comparatively slower.

SysV shared memory segments also account for cache. We can check the size of the shared memory segments using the ipcs -m command.

The main distinction between buffer and cache, as reported by the free command, lies in their purpose and the type of data they manage. With respect to the storage devices, buffers read from or write to the disk, thereby optimizing disk I/O operations by temporarily storing the data. On the other hand, caches are focused on enhancing the performance of the system’s main memory by storing frequently accessed data.

While both buffer and cache aim to improve overall system performance, they operate at different levels within the memory hierarchy. Buffers work at the level of storage devices, whereas caches operate at the level of the main memory. Buffers help minimize disk access, while caches aim to reduce memory latency.

When we run the free command, the reported values for buffers and caches represent the amount of memory allocated for these purposes. Monitoring these values can provide insights into the usage of memory resources in our system. It also helps identify potential bottlenecks or areas of improvement.

To understand the concept of buffers, let’s try a little experiment with the reading of free command in Linux:

$ free -m

total used free shared buffers cached

Mem: 2897 465 2431 0 30 230

-/+ buffers/cache: 204 2692

Swap: 4000 0 4000

$ sync

$ free -m

total used free shared buffers cached

Mem: 2897 466 2431 0 30 230

-/+ buffers/cache: 205 2691

Swap: 4000 0 4000We know that the data gets flushed to the disk by running the sync command. However, we don’t see the buffer getting reduced even after executing the sync command.

Now, let’s try writing a huge file to the disk:

$ dd if=/dev/zero of=test bs=1024k As expected, the cached value should increase, and free is confirming this:

$ free -m

total used free shared buffers cached

Mem: 2897 1466 1430 0 32 1127

-/+ buffers/cache: 306 2590

Swap: 4000 0 4000Let’s again execute the sync command and then check using free. We can see that the buffer value decreases. There is no reduction in the cache. This means that the dirty pages in RAM after execution of the dd command are flushed to disk:

$ free -m

total used free shared buffers cached

Mem: 2897 1466 1430 0 10 1127

-/+ buffers/cache: 306 2590

Swap: 4000 0 4000Let’s now update the drop_cache kernel parameter in order to drop the cache value:

$ cat /proc/sys/vm/drop_caches

0

$ echo "1" > /proc/sys/vm/drop_caches

$ cat /proc/sys/vm/drop_caches

1free now confirms that there is a drop in both buffer and cache values:

$ free -m

total used free shared buffers cached

Mem: 2897 299 2597 0 1 74

-/+ buffers/cache: 224 2672

Swap: 4000 0 4000So, the conclusion that “Buffer” is RAM data that is yet to be flushed to disk seems right.

In this tutorial, we discussed buffers and caches, as reported by the free command. We learned that they are two distinct memory management mechanisms employed by the operating system. Buffers optimize disk I/O operations by temporarily storing data being read from or written to a storage device, while caches improve performance by storing frequently accessed data in the main memory. Understanding the difference between these two concepts can aid in assessing the memory.