1. Overview

While the volatile keyword in Java usually ensures thread safety, it’s not always the case.

In this tutorial, we’ll look at the scenario when a shared volatile variable can lead to a race condition.

2. What Is a volatile Variable?

Unlike other variables, volatile variables are written to and read from the main memory. The CPU does not cache the value of a volatile variable.

Let’s see how to declare a volatile variable:

static volatile int count = 0;

3. Properties of volatile Variables

In this section, we’ll look at some important features of volatile variables.

3.1. Visibility Guarantee

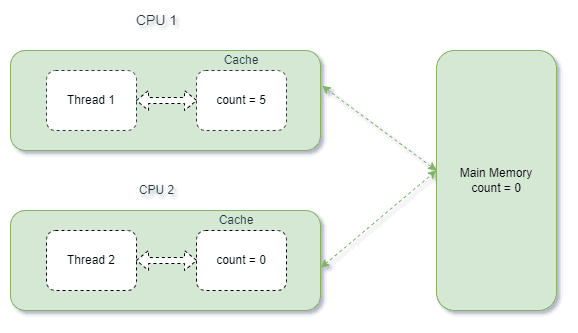

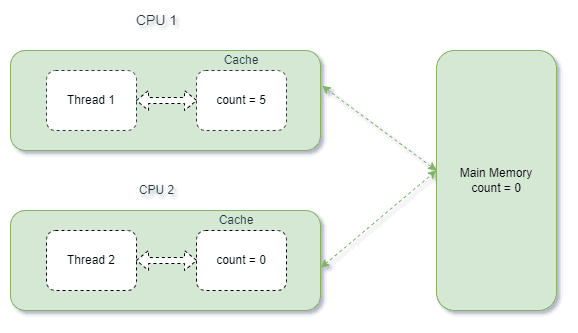

Suppose we have two threads, running on different CPUs, accessing a shared, non-volatile variable. Let’s further suppose that the first thread is writing to a variable while the second thread is reading the same variable.

Each thread copies the value of the variable from the main memory into its respective CPU cache for performance reasons.

In the case of non-volatile variables, the JVM does not guarantee when the value will be written back to the main memory from the cache.

If the updated value from the first thread is not immediately flushed back to the main memory, there’s a possibility that the second thread may end up reading the older value.

The diagram below depicts the above scenario:

Here, the first thread has updated the value of the variable count to 5. But, the flushing back of the updated value to the main memory does not happen instantly. Therefore, the second thread reads the older value. This can lead to wrong results in a multi-threaded environment.

On the other hand, if we declare count as volatile, each thread sees its latest updated value in the main memory without any delay.

This is called the visibility guarantee of the volatile keyword. It helps in avoiding the above data inconsistency issue.

3.2. Happens-Before Guarantee

The JVM and the CPU sometimes reorder independent instructions and execute them in parallel to improve performance.

For example, let’s look at two instructions that are independent and can run simultaneously:

a = b + c;

d = d + 1;

However, some instructions can’t execute in parallel because a latter instruction depends on the result of a prior instruction:

a = b + c;

d = a + e;

In addition, reordering of independent instructions can also take place. This can cause incorrect behavior in a multi-threaded application.

Suppose we have two threads accessing two different variables:

int num = 10;

boolean flag = false;

Further, let’s assume that the first thread is incrementing the value of num and then setting flag to true, while the second thread waits until the flag is set to true. And, once the value of flag is set to true, the second thread reads the value of num.

Therefore, the first thread should execute the instructions in the following order:

num = num + 10;

flag = true;

But, let’s suppose the CPU reorders the instructions as:

flag = true;

num = num + 10;

In this case, as soon as the flag is set to true, the second thread will start executing. And because the variable num is not yet updated, the second thread will read the old value of num, which is 10. This leads to incorrect results.

However, if we declare flag as volatile, the above instruction reordering would not have happened.

Applying the volatile keyword on a variable prevents instruction reordering by providing the happens-before guarantee.

This ensures that all instructions before the write of the volatile variable are guaranteed not to be reordered to occur after it. Similarly, the instructions after the read of the volatile variable cannot be reordered to occur before it.

4. When Does the volatile Keyword Provide Thread Safety?

The volatile keyword is useful in two multi-threading scenarios:

- When only one thread writes to the volatile variable and other threads read its value. Thus, the reading threads see the latest value of the variable.

- When multiple threads are writing to a shared variable such that the operation is atomic. This means that the new value written does not depend on the previous value.

5. When Does volatile Not Provide Thread Safety?

The volatile keyword is a lightweight synchronization mechanism.

Unlike synchronized methods or blocks, it does not make other threads wait while one thread is working on a critical section. Therefore, the volatile keyword does not provide thread safety when non-atomic operations or composite operations are performed on shared variables.

Operations like increment and decrement are composite operations. These operations internally involve three steps: reading the value of the variable, updating it, and then, writing the updated value back to memory.

The short time gap in between reading the value and writing the new value back to the memory can create a race condition. Other threads working on the same variable may read and operate on the older value during that time gap.

Moreover, if multiple threads are performing non-atomic operations on the same shared variable, they may overwrite each other’s results.

Thus, in such situations where threads need to first read the value of the shared variable to figure out the next value, declaring the variable as volatile will not work.

6. Example

Now, we’ll try to understand the above scenario when declaring a variable as volatile is not thread-safe with the help of an example.

For this, we’ll declare a shared volatile variable named count and initialize it to zero. We’ll also define a method to increment this variable:

static volatile int count = 0;

void increment() {

count++;

}

Next, we’ll create two threads t1 and t2. These threads call the above increment operation a thousand times:

Thread t1 = new Thread(new Runnable() {

@Override

public void run() {

for(int index=0; index<1000; index++) {

increment();

}

}

});

Thread t2 = new Thread(new Runnable() {

@Override

public void run() {

for(int index=0; index<1000; index++) {

increment();

}

}

});

t1.start();

t2.start();

t1.join();

t2.join();

From the above program, we may expect that the final value of the count variable will be 2000. However, every time we execute the program, the result will be different. Sometimes, it will print the “correct” value (2000), and sometimes it won’t.

Let’s look at two different outputs that we got when we ran the sample program:

value of counter variable: 2000 value of counter variable: 1652

The above unpredictable behavior is because both the threads are performing the increment operation on the shared count variable. As mentioned earlier, the increment operation is not atomic. It performs three operations – read, update, and then write the new value of the variable to the main memory. Thus, there’s a high chance that interleaving of these operations will occur when both t1 and t2 are running simultaneously.

Let’s suppose t1 and t2 are running concurrently and t1 performs the increment operation on the count variable. But, before it writes the updated value back to the main memory, thread t2 reads the value of the count variable from the main memory. In this case, t2 will read an older value and perform the increment operation on the same. This may lead to an incorrect value of the count variable being updated to the main memory. Thus, the result will be different from what is expected – 2000.

7. Conclusion

In this article, we saw that declaring a shared variable as volatile will not always be thread-safe.

We learned that to provide thread safety and avoid race conditions for non-atomic operations, using synchronized methods or blocks or atomic variables are both viable solutions.

The code backing this article is available on GitHub. Once you're

logged in as a Baeldung Pro Member, start learning and coding on the project.