1. Introduction

Sometimes, we might want to make parallel send/receive calls to the same socket. Whether that’s possible depends on the type of process, socket, and protocols we’re running on.

In this tutorial, we’ll discuss when parallel calls to send/receive on the same socket are valid and when we can use them.

2. What Are Send/Recv Calls?

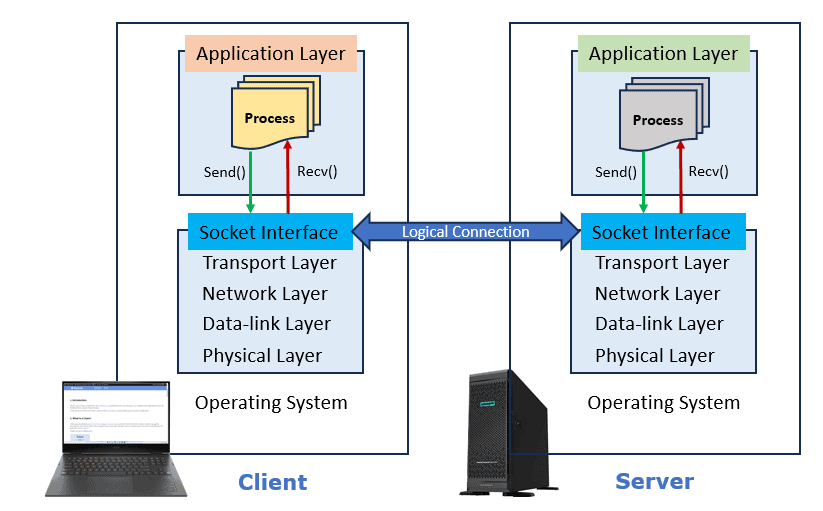

When there’s a connection between a client and a server, we use Internet Protocol (IP) to send and receive data across the network.

Further, an inter-process communication (IPC) sets sockets across the network to facilitate data exchange using the send() and recv() API calls:

So, a send/recv call includes:

- The address for the buffer and the socket

- The buffer size

- A flag that indicates how the data should be transmitted.

3. How to Make Parallel Calls?

The socket address in a send/recv call is associated with the process rather than a specific thread. As a result, we can use multiple threads to make parallel calls to send/recv on the same socket.

Furthermore, we can use one thread for multiple send calls and another for recv calls. The thread that sends data will transmit numerous packets to the same socket, independent of the thread that receives the data.

The POSIX standard suggests multiple threads can call send/recv on the same socket, provided each thread uses a separate buffer. As a result, each thread should have its buffer for storing or reading data.

Finally, the send/recv call relies on transport layer services to communicate using protocols such as Transmission Control Protocol (TCP) and User Datagram Protocol (UDP).

Let’s discuss how TCP and UDP protocols can support making parallel calls to send/recv on the same socket.

4. Using TCP

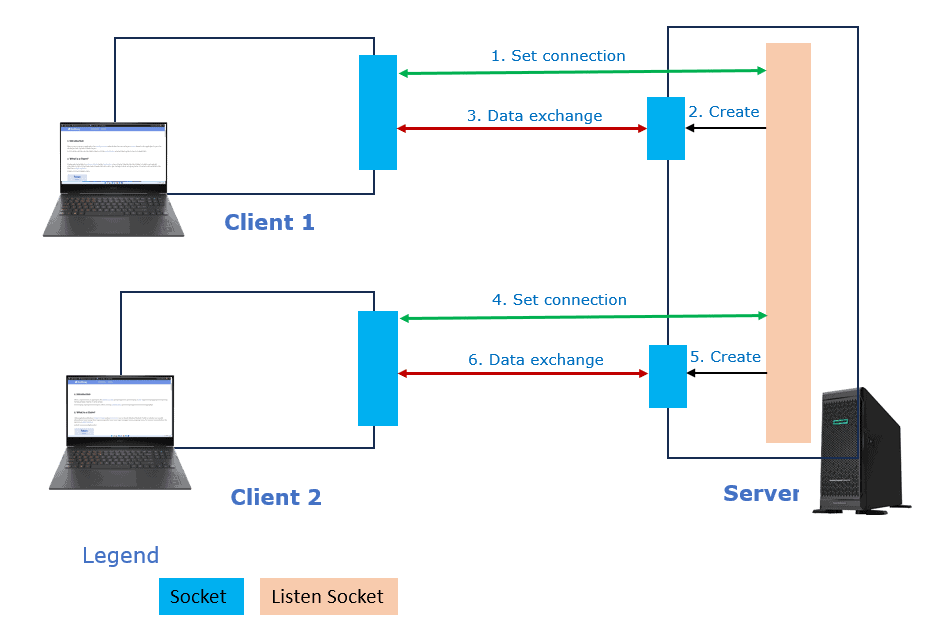

TCP offers a dependable, organized, and bidirectional communication channel. Further, the server uses two different sockets for communication:

- The listening socket for listening for incoming connection requests from clients and for establishing a connection

- The communication socket for exchanging data with the client

For example:

During data exchange, multiple threads from the same process can make parallel calls to send/recv on the same socket. Both the client and server sockets end when the client process ends.

4.1. Advantages

TCP protocol ensures that all data is delivered in the correct order. TCP can handle congestion, flow control, and data delivery depending on network conditions and the receiver’s capacity.

Further, TCP allows an application layer to interact with the network without worrying about packet boundaries, fragmentation, or reassembly.

4.2. Disadvantages

TCP protocol includes overhead and latency for acknowledgments, connection establishment, retransmission, etc.

This protocol isn’t suitable for real-time applications requiring low latency and large throughput. The fixed address and port number make the TCP protocol inflexible for routing and addressing.

5. Using UDP

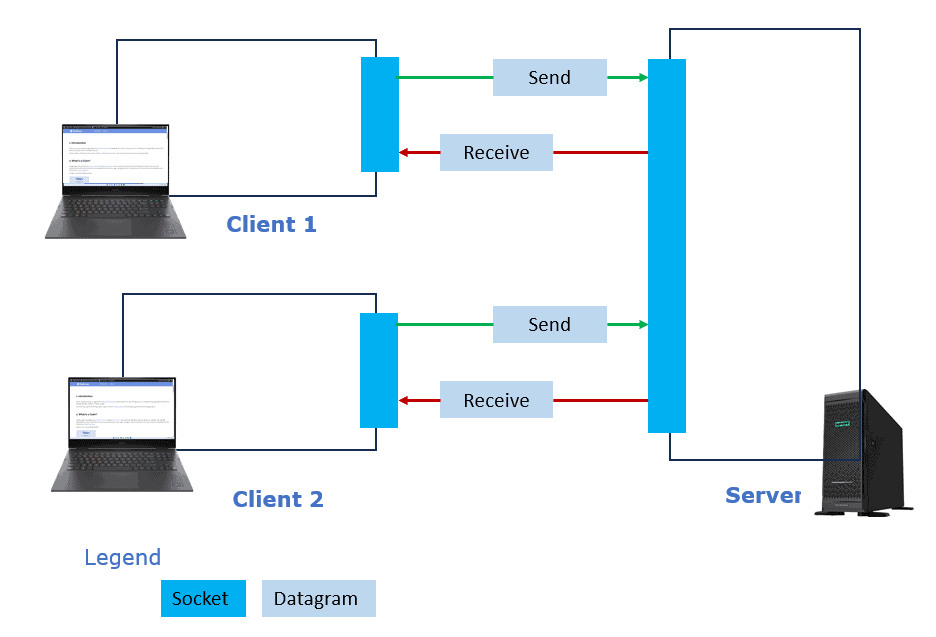

UDP is a datagram socket-based unidirectional communication protocol. Here, the server uses only one socket to communicate with multiple clients:

The server modifies the remote socket address for each new client connection.

Further, parallel calls to send/recv on the same socket are possible. The POSIX standard, however, doesn’t define how a parallel call will behave. So, we may produce unexpected results.

Additionally, few systems may enable multiple threads to send and receive datagrams on the same socket. However, many will not allow it.

Therefore, allowing parallel calls to send/recv on the same socket using UDP isn’t a good practice. Only when we know how the target system handles the datagrams can we use UDP.

5.1. Advantages

UDP comes with less overhead because sockets aren’t involved in connection establishment. UDP is ideally suited for real-time applications requiring excellent performance.

UDP can address and route data from multiple locations using multicast or broadcast.

5.2. Disadvantages

In the UDP protocol, the order and accuracy of the data exchange aren’t guaranteed. UDP may overburden the network or receiver due to the lack of congestion and flow control, leading to performance issues.

At the application layer, UDP requires more complex processes such as packet boundaries, error detection, reassembly, and fragmentation.

6. Summary

Let’s summarize the advantages and disadvantages of parallel calls and list scenarios for which they are suitable:

| Protocol | Benefits | Drawback |

| TCP | Accurate data is delivered in the correct order | Overhead and latency |

| Adaptable to network and receiver’s need | Not suitable for low-latency applications | |

| The transport layer manages exchange overheads. | Inflexible for routing and addressing | |

| UDP | Less overhead and delays | Unpredictable order and accuracy of data |

| High performance for real-time applications | Uncontrolled data traffic leads to congestion. | |

| Multicast or broadcast support | Application to manage transmission overhead |

The choice of whether to use parallel calls depends on the requirements and characteristics of an application. However, the following are some generic suggestions that can assist us in making an informed decision:

- We can use parallel calls if our application demands low latency and high throughput, such as playing online games, streaming music or video, and so forth

- A hybrid approach of TCP for control messages and UDP for data messages is beneficial.

7. Conclusion

In this article, we discussed whether parallel calls to send/recv on the same socket are valid.

Parallel calls to send/recv on the same socket are possible with TCP as long as each thread uses its buffer. However, when using UDP, parallel calls may not work correctly and can lead to unexpected results.