Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: February 28, 2025

Padding is a technique widely used in Deep Learning. As the name refers, padding adds extra data points, such as zeros, around the original data. It plays an important role in various domains, including image processing with Convolutional Neural Networks (CNNs) and text processing with Recurrent Neural Networks (RNNs) or Transformers.

In Python, you can easily implement padding using libraries like TensorFlow or PyTorch.

In this tutorial, we’ll demonstrate how to apply different types of padding in image and text processing in Python.

In image processing, we leverage convolutional filters to extract useful information from the image. However, the size of the image reduces while calculating the convolution, especially with networks that contain multiple layers. Padding can help keep the original input size or maintain the output at a desired size.

In addition, without padding, the pixels on the edge of the image are less used than the central pixel. This will cause the network to lose capturing the information on the edge.

Therefore, padding could improve the performance of the network. There are multiple approaches for image padding.

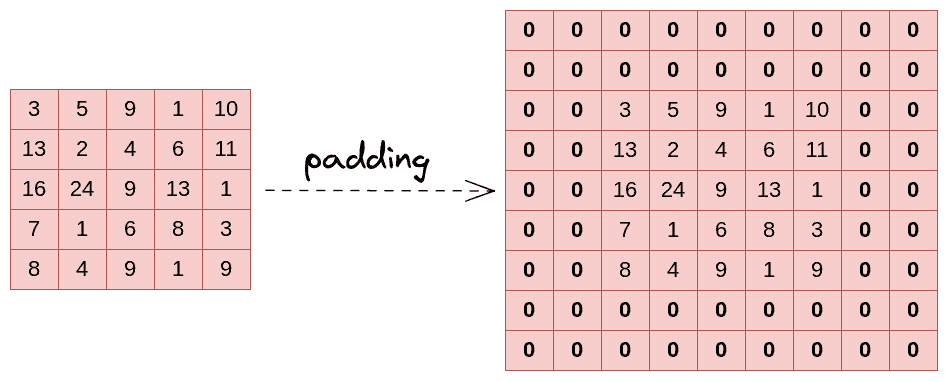

Zero Padding, also known as ‘Same’ Padding, adds layers of zero around the input image, as shown in the figure below:

In TensorFlow, the zero padding can be adjusted from the convolutional layer using the function tf.keras.layers.Conv2D as follows:

import tensorflow as tf

model = tf.keras.models.Sequential([

# Convolutional Layer

tf.keras.layers.Conv2D(32, (3, 3), padding='same', activation='relu', input_shape=(28, 28, 1)),

# Pooling Layer

tf.keras.layers.MaxPooling2D((2, 2)),

# Flatten the output to feed into a dense layer

tf.keras.layers.Flatten(),

# Dense Layer

tf.keras.layers.Dense(128, activation='relu'),

# Output Layer

tf.keras.layers.Dense(10, activation='softmax') # Assuming 10 classes for classification

])

# Compile the model

model.compile(optimizer='Adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])The codes demonstrate a CNN for classification. It starts with a Conv2D layer that uses the ‘same’ padding, meaning the output of this layer will have the same spatial dimensions as the input.

We can also use PyTorch to build the same network as follows:

import torch

import torch.nn as nn

import torch.nn.functional as F

class SimpleCNN(nn.Module):

def __init__(self):

super(SimpleCNN, self).__init__()

# Convolutional Layer

self.conv = nn.Conv2d(1, 32, kernel_size=3, padding=1)

# Pooling Layer

self.pool = nn.MaxPool2d(2, 2)

# Dense layer

self.dense = nn.Linear(64 * 7 * 7, 128) # Assuming input images have 28x28 pixels

# Output Layer

self.fc = nn.Linear(128, 10) # Assuming 10 classes for classification

def forward(self, x):

# Apply convolutional layer and pooling

x = self.pool(F.relu(self.conv(x)))

# Flatten the output to feed into a dense layer

x = torch.flatten(x, 1)

# Dense layer

x = F.relu(self.dense(x))

# Output layer

x = self.fc(x)

return x

# Instantiate and summarize the model

model = SimpleCNN()In PyTorch, we can specify the padding size using nn.Conv2D. In the code above, padding=1 means that one layer of padding is added around the input data.

Valid padding means that no padding is added to the input data. In TensorFlow, we can adjust the padding mode by changing the convolutional layer:

# Convolutional Layer

tf.keras.layers.Conv2D(32, (3, 3), padding='valid', activation='relu', input_shape=(28, 28, 1))

For PyTorch, we can set the layer of padding to 0 so that no padding is added to the data:

# Convolutional Layer

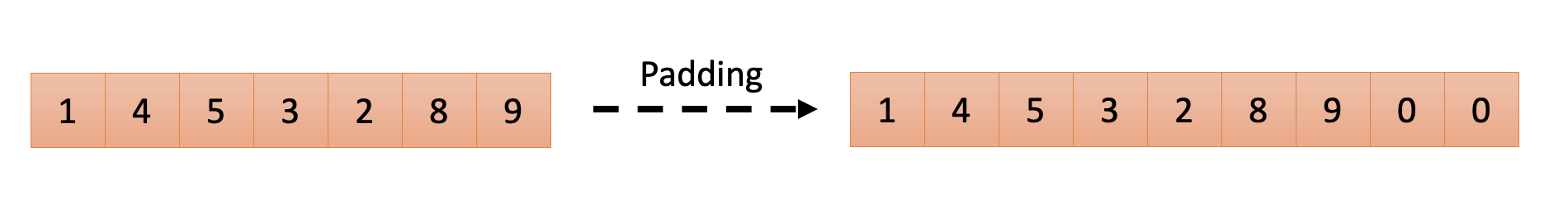

self.conv = nn.Conv2d(1, 32, kernel_size=3, padding=1)Padding is also widely used in Natural Language Processing (NLP), especially when using models such as RNNs, Long Short-Term Memory Networks (LSTMs), or Transformers. While we process sentences and documents, they always come with varying lengths. Hence, padding (special tokens or zeros) is usually added to the beginning or the end of the text to ensure all the input sequences for the neural network have the same length.

The figure below gives an example of padding to a sequence. The number in the figure indicates the numerical representation of the tokens/words:

In Tensorflow, we can add the padding using the pad_sequences function:

from tensorflow.keras.preprocessing.sequence import pad_sequences

# Example list of tokenized sentences (each sentence is a list of integers representing tokens)

sequences = [

[1, 2, 3, 4],

[1, 2],

[1, 2, 3, 4, 5, 6]

]

# Pad sequences to the length of 10

padded_sequences = pad_sequences(sequences, padding='post', maxlen=10, value=0)When padding=’post’, the paddings (0 in this case) are added at the end of the sequence. To pad at the beginning of the sequence, we can set padding=’pre’ instead. The ‘maxlen’ argument indicates the length of the sequence after padding. If maxlen=None, the sequences are padded to match the length of the longest sequence in the dataset.

We can leverage the same padding technique in PyTorch as well:

import torch

from torch.nn.utils.rnn import pad_sequence

# Example list of tokenized sentences (each sentence is a tensor of integers representing tokens)

sequences = [

torch.tensor([1, 2, 3, 4]),

torch.tensor([1, 2]),

torch.tensor([1, 2, 3, 4, 5, 6])

]

# Pad sequences

padded_sequences = pad_sequence(sequences, padding='post', padding_value=0)

By default, pad_sequence adds the padding at the left/beginning of the sequence. We can also use padding=’pre’ to indicate it. Note that by using pad_sequence, the padded sequence’s length equals the longest sequence in the dataset.

In this tutorial, we walked through the concept of padding in image and text processing.

With its wide applications in deep learning, multiple existing libraries, such as PyTorch and TensorFlow, have built-up functions for adding paddings to our data. We gave examples of applying zero/valid padding to the image and pre/post padding to the text.