Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: March 18, 2024

Part-of-speech (POS) tagging is a core task in natural language processing (NLP), and notably, Hidden Markov Models (HMMs) play a crucial role in it.

In this tutorial, we’ll show how to use HMMs for POS tagging. Starting with the basics of POS, we’ll transition to the mechanics of HMMs and explore their advantages, challenges, and practical applications in tagging.

In this section, we’ll present the definition of POS and provide an illustrative example.

POS tagging refers to marking each word in a text with its corresponding POS.

Doing this, we identify a word as a noun, verb, adjective, adverb, or another category.

We use this information to analyze the grammatical structure of sentences, which aids in understanding the meaning and context of words in various scenarios.

So, efficient and accurate POS tagging can improve the solutions to various other NLP tasks, such as text analysis, machine translation, and information retrieval.

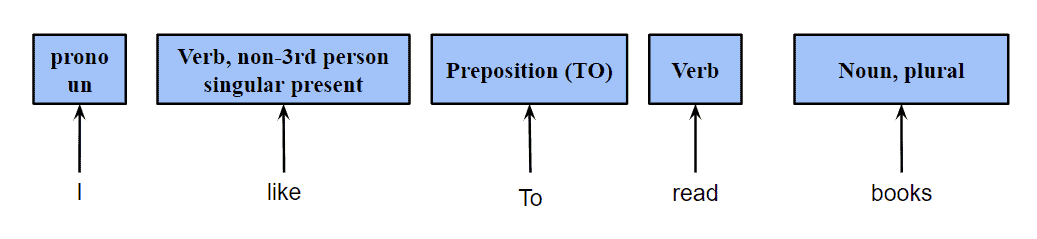

For the input sentence “I like to read books,” a potential output of POS tagging would be as follows:

We assigned each word a category it belongs to. However, POS models usually have more nuanced tags. For example, IBM’s model uses for the base form of a verb,

for the present tense, third person singular, and

for all the other forms of the present tense.

So, the exact POS tags differ depending on the specific POS tagging system or model.

Historically, HMMs have paved the way for significant advancements in various fields, showcasing their vast relevance. The components of HMMs are states, observations, transition probabilities, and emission probabilities.

To apply an HMM to a sequence of observations, we need to determine the likelihood of the input sequence and find the most probable sequence of (hidden) states that can generate it.

Firstly, we treat POS tags as the hidden states and individual words as the observations. By doing so, we aim to predict the grammatical role of each word based on its context within a sentence. Transition probabilities represent the likelihood of one POS tag following another, while emission probabilities gauge the likelihood of a word emitted from a given POS tag.

Now, here’s where it gets interesting. We estimate both the transition and emission probabilities directly from a provided corpus.

This means we analyze large volumes of text to find how likely a particular word follows another and estimate the likelihood of a word being tagged with a specific POS.

The Viterbi algorithm aids us in pinpointing the most probable sequence of tags for any sequence of words.

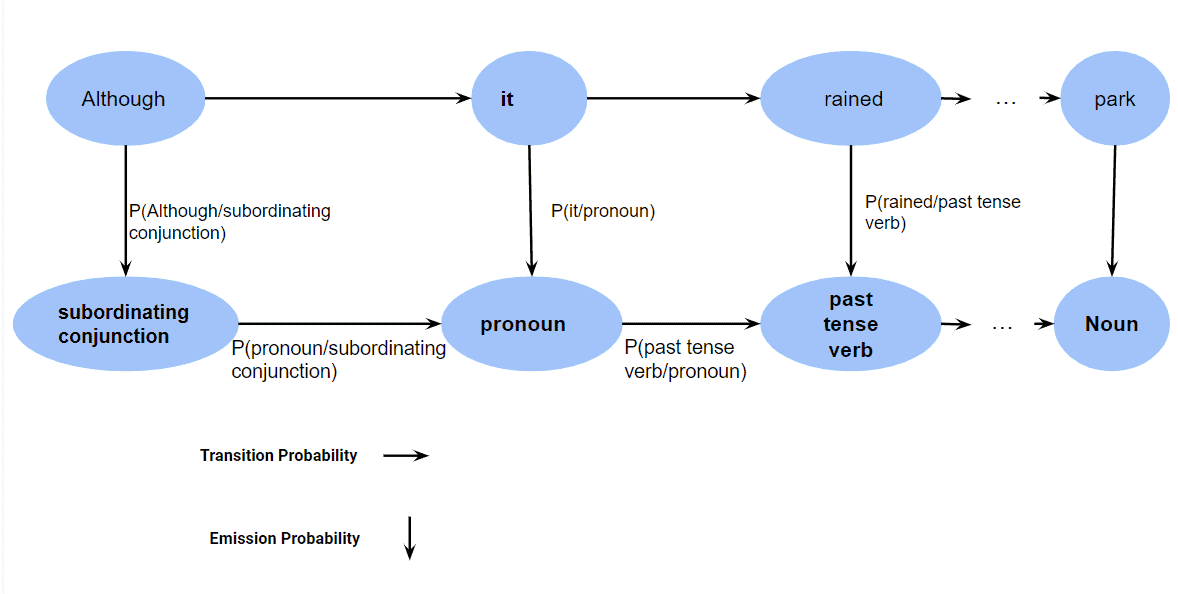

We’ll use the sentence “Although it rained, we went to the park.” as an example.

Beginning with , our HMM might first predict that this word is a subordinating conjunction (

) based on its emission probability. Subsequently, for

, the model evaluates the transition probability from a subordinating conjunction to a pronoun (

) and couples it with the emission probability of

being a

. This calculation yields a specific probability value for this sequence. Moving to the word

, the transition from

to a verb (

) is considered along with the emission probability of

being a VBD:

The model obtains a cumulative likelihood for the sequence by multiplying these probabilities.

To begin with, one prominent challenge in language is ambiguity. For instance, the word “lead” can be a verb (“to guide”) or a noun (“a type of metal”). Here’s where HMMs come into play: they effectively handle the words that can have multiple POS tags by examining the likelihood of each tag based on historical data and the surrounding words.

Moving on, let’s talk about scalability. Let’s say we have vast amounts of text data, from novels to newspapers. The beauty of HMMs is that they can be trained with these large corpora. Moreover, if we stumble upon previously unseen words in new texts, an HMM can guess their tags based on similar contexts encountered during training.

The first problem is data sparsity.

What if we come across the word “flibbertigibbet” in a text? Given its rarity, it’s unlikely that this word appears in most training corpora. So, HMMs might not see some word-tag pairs during training. We use techniques like smoothing, which allocates a small probability to the unseen pairs, ensuring that our model doesn’t falter on rare words.

Next, HMMs use the Markov assumption. It suggests that each word influences only its immediate successor in a sentence, but we know that, in reality, context spans across sentences or even chapters. Despite its success in many applications, the Markov assumption implies that only the previous state is relevant, potentially missing broader contextual clues.

While HMMs offer a robust approach to POS tagging, newer methods like neural networks or decision trees have emerged in the NLP realm.

With their deep learning capabilities, the networks can capture intricate patterns and nuances, often outperforming HMMs. Conversely, decision trees provide a transparent and interpretable structure, making them favorable for applications where transparency is paramount.

However, these advanced methods come with trade-offs. Neural networks, for instance, demand vast amounts of data and computational power, while decision trees can become overly complex and less accurate with too many branches.

Let’s delve into the practical applications of HMM-based POS tagging:

In this article, we talked about the integral relationship between HMMs and POS tagging. We delved into practical applications, highlighting their use in speech recognition and machine translation.

HMMs excel in POS tagging due to their adept handling of sequential data. However, rare data points can sometimes hinder their performance. Also, the Markov assumption that each word influences only the next doesn’t account for context relationships between distant words.