Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: February 28, 2025

In this tutorial, we’ll explore the environmental impact of transformers, with a focus on their carbon footprint.

We’ll first discuss the basics of what contributes to these models’ carbon footprint. Then, we’ll review the methods for calculating it and strategies for reducing it.

A transformer is a neural network architecture proposed by Vaswani in the “Attention Is All You Need.” Unlike RNNs, transformers don’t rely on recurrence but on self-attention.

The self-attention mechanism allows the model to weigh the importance of different input tokens when making predictions, enabling it to capture long-range dependencies without sequential processing. Transformers consist of encoder and decoder layers, employing multi-head self-attention mechanisms and feed-forward neural networks.

For a detailed explanation of the Transformer model’s functions and architecture, please refer to our articles on the ChatGPT model and transformer text embeddings.

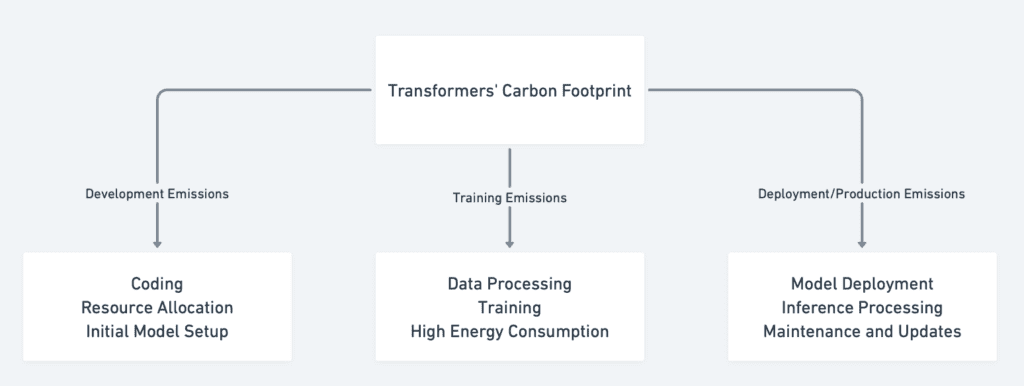

A carbon footprint of an entity represents the total amount of greenhouse gases (GHGs) it emits directly or indirectly. These gases, primarily carbon dioxide (CO2), contribute to global warming and climate change and affect our urban environments. Transformers’ carbon footprint includes development, training, and deployment emissions:

To understand the origin of transformers’ carbon footprint, we should remember that developing models such as BERT and GPTs requires substantial computational resources. The initial phase of development involves designing the model architecture, conducting numerous experiments to test the designs at a lower scale, and optimizing hyperparameters. Each of these activities involves running multiple training cycles, which consumes a significant amount of electricity. For example, training a large model like GPT-4 can take several days to weeks using high-performance GPUs or TPUs, resulting in considerable energy consumption.

The energy required for these computational tasks translates directly into GHG emissions, especially if the electricity comes from non-renewable sources. A study by Strubell, Ganesh, and McCallum highlighted that training a single AI model like BERT can emit as much CO2 as five cars over their entire lifespans.

Additionally, the infrastructure supporting training and deployment, such as cooling systems and power supply in data centers, contributes significantly to the overall carbon footprint. According to a report by OpenAI, the computational power used for training large AI models has been doubling approximately every 3.4 months since 2012, leading to increased energy demands.

To calculate the carbon footprint of a transformer, we should consider the following:

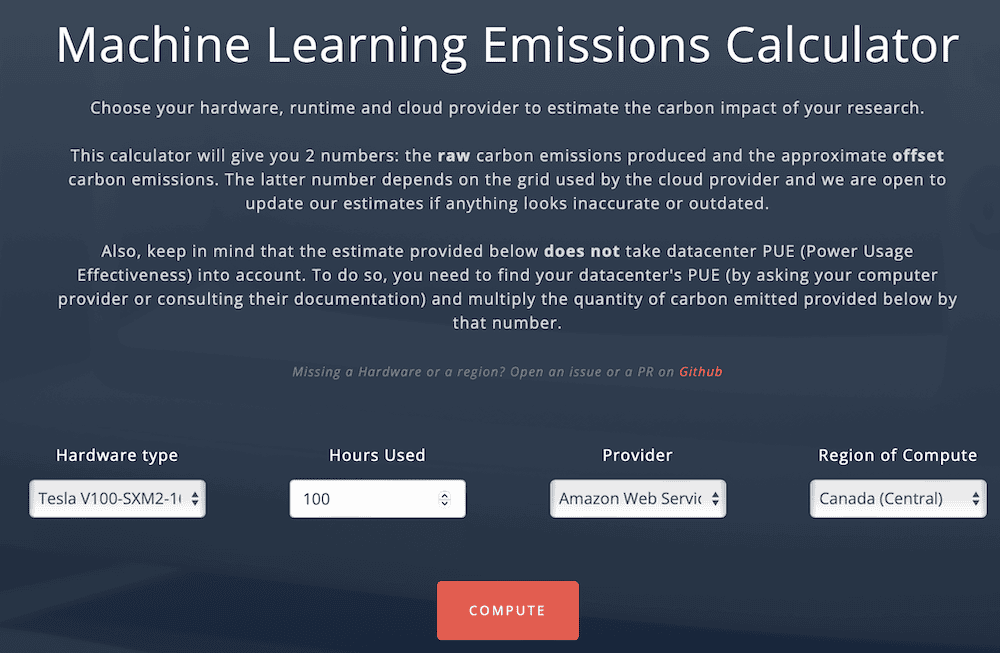

We can use freely available online calculators and select the time and resources we used to train or test our models to get some estimates:

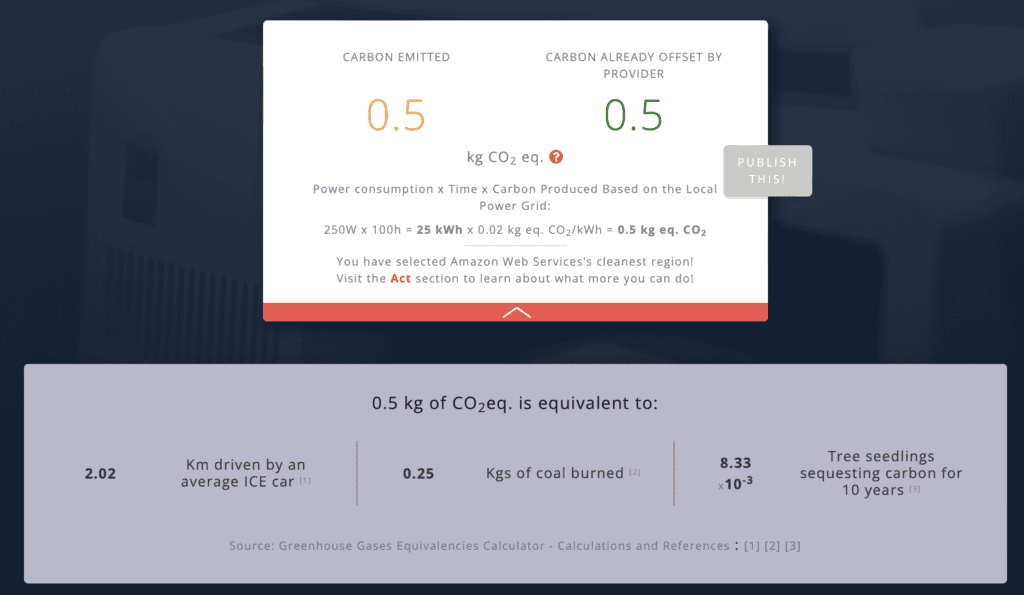

Let’s see how much carbon we would use to train our AI model during 100 hours of training using a Tesla V100 instance of GPU-optimized server in the Central Canadian region of AWS:

As we can also see, the provider offsets the 0.5 kg of CO2 because we selected a green energy-based region.

Let’s discuss a few strategies for minimizing the impact on the environment.

First, we can use techniques such as Bayesian optimization to explore hyperparameter spaces, reducing the required training runs efficiently. These methods help find the optimal configuration more quickly, thereby saving computational resources and reducing energy consumption. We can also try simpler models to validate the direction and then scale up once we are sure all the “alfa” version bugs are being dealt with and the performance is adequate.

We can also implement early stopping techniques during training to prevent unnecessary computations. If the model’s performance stops improving, the training process is halted, saving time and energy. This is especially effective in reducing the number of epochs needed to achieve the desired accuracy.

Finally, we can leverage pre-trained models and fine-tune them for specific tasks, drastically cutting down the computational resources required compared to training models from scratch. This approach, known as transfer learning, reduces the training time and lowers the carbon footprint associated with model development.

Another important detail is using energy-efficient hardware like GPUs and TPUs to reduce power consumption. These specialized chips are designed for high-performance machine-learning tasks and are more efficient than traditional CPUs. For example, Google’s TPUs are tailor-made for TensorFlow and can handle large-scale AI tasks with greater efficiency.

We can also select regions in which data centers are powered by renewable energy sources to reduce our carbon footprint. Companies like Amazon, Google, and Microsoft promise to use 100% renewable energy for their data centers, setting an example for sustainable AI development.

Finally, we can deploy models closer to the data source (edge computing) to reduce latency and energy consumption. This approach minimizes the need for extensive data transfer and central processing, making it more energy-efficient.

In this article, we explored the carbon footprint of transformer models, a crucial topic given the increasing computational demands of modern AI research.

The development, training, and deployment stages are key contributors to transformers’ carbon footprint. To reduce the footprint, we should optimize the model development, use simpler and smaller methods to test before scaling up once validated and use energy-efficient hardware.