1. Introduction

In this tutorial, we’re going to learn about the cost function in logistic regression, and how we can utilize gradient descent to compute the minimum cost.

2. Logistic Regression

We use logistic regression to solve classification problems where the outcome is a discrete variable. Usually, we use it to solve binary classification problems. As the name suggests, binary classification problems have two possible outputs.

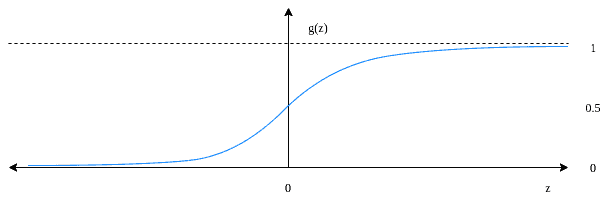

We utilize the sigmoid function (or logistic function) to map input values from a wide range into a limited interval. Mathematically, the sigmoid function is:

This formula represents the probability of observing the output of a Bernoulli random variable. This variable is either

or

(

).

It squeezes any real number to the open interval. Thus, it’s better suited for classification. Moreover, it’s less sensitive to outliers, unlike linear regression:

Applying the sigmoid function on gives

. The output becomes

as the input approaches

. Conversely, sigmoid becomes

as the input approaches

.

More formally, we define the logistic regression model for binary classification problems. We choose the hypothesis function to be the sigmoid function:

Here, denotes the parameter vector. For a model containing

features, we have

containing

parameters. The hypothesis function approximates the estimated probability of the actual output being equal to

. In other words:

and

More compactly, this is equivalent to:

3. Cost Function

The cost function summarizes how well the model is behaving. In other words, we use the cost function to measure how close the model’s predictions are to the actual outputs.

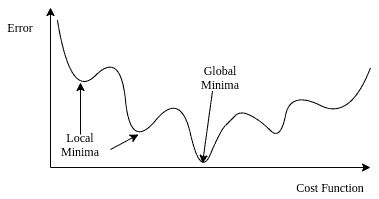

In linear regression, we use mean squared error (MSE) as the cost function. But in logistic regression, using the mean of the squared differences between actual and predicted outcomes as the cost function might give a wavy, non-convex solution; containing many local optima:

In this case, finding an optimal solution with the gradient descent method is not possible. Instead, we use a logarithmic function to represent the cost of logistic regression. It is guaranteed to be convex for all input values, containing only one minimum, allowing us to run the gradient descent algorithm.

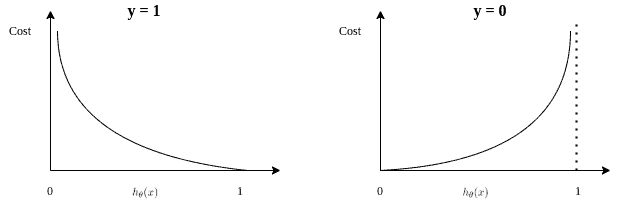

When dealing with a binary classification problem, the logarithmic cost of error depends on the value of . We can define the cost for two cases separately:

Which then results in:

Because when the actual outcome , the cost is

for

and takes the maximum value for

. Similarly, if

, the cost is

for

.

As the output can either be or

, we can simplify the equation to be:

For observations, we can calculate the cost as:

4. Minimizing the Cost with Gradient Descent

Gradient descent is an iterative optimization algorithm, which finds the minimum of a differentiable function. In this process, we try different values and update them to reach the optimal ones, minimizing the output.

In this article, we can apply this method to the cost function of logistic regression. This way, we can find an optimal solution minimizing the cost over model parameters:

As already explained, we’re using the sigmoid function as the hypothesis function in logistic regression.

Assume we have a total of features. In this case, we have

parameters for the

vector. To minimize our cost function, we need to run the gradient descent on each parameter

:

Furthermore, we need to update each parameter simultaneously for each iteration. In other words, we need to loop through the parameters ,

, …,

in vector

.

To complete the algorithm, we need the value of , which is:

Plugging this into the gradient descent function leads to the update rule:

Surprisingly, the update rule is the same as the one derived by using the sum of the squared errors in linear regression. As a result, we can use the same gradient descent formula for logistic regression as well.

By iterating over the training samples until convergence, we reach the optimal parameters leading to minimum cost.

5. Conclusion

In this article, we’ve learned about logistic regression, a fundamental method for classification. Moreover, we’ve investigated how we can utilize the gradient descent algorithm to calculate the optimal parameters.