Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: December 20, 2024

Accessing data on traditional hard disks might seem straightforward at first glance. We store files, and when we need them, we request the data from the corresponding disk. However, behind the scenes, a complex process determines the order in which these requests are serviced. This process is known as disk scheduling.

Disk scheduling is a fundamental concept in operating systems and storage management. It deals with arranging the order of read and write requests to a disk to improve overall performance. Since mechanical hard disks rely on a spinning platter and a moving read/write head, the time it takes to read or write data depends heavily on the positions of that data on the disk. Different scheduling algorithms have various goals, such as minimizing delays, reducing wear and tear, and enhancing throughput.

In this tutorial, we’ll explore disk scheduling, why it matters, the underlying principles behind it, the common algorithms used, and how these approaches relate to modern storage technologies.

Hard disk drives (HDDs) are mechanical devices. They contain spinning platters coated with magnetic material and an actuator arm holding read/write heads that move over the surface of these platters.

When we request data from an HDD, the disk must perform three main actions:

Among these steps, seek time and rotational latency are the most time-consuming, often dominating the overall disk access time. Since physical movements are slow compared to electronic components, optimizing the order of requests can significantly improve performance.

If the disk receives multiple requests spread out across different areas, serving them in the order they arrive might cause the head to jump back and forth. This random movement leads to long delays for some requests and reduces the system’s throughput.

Disk scheduling tries to minimize these unnecessary movements. By reordering requests, we can reduce the average seek time, provide fairer service, and ensure that no single request waits indefinitely. Without careful scheduling, a queue of disk requests can become chaotic, leading to poor response times.

Before we dive into common scheduling algorithms, let’s consider what we aim to optimize. The typical goals of disk scheduling include:

Some algorithms focus on pure performance, while others try to maintain fairness, but we usually want to balance these goals. The best approach depends on the environment and context. Is the machine a single-user desktop or a multi-user server? Does it run specialized applications, such as database management systems, or not? We must factor in all those details when deciding which algorithm to choose.

Over the years, several disk scheduling algorithms have been developed. Although modern systems and solid-state drives (SSDs) have changed how we think about storage performance, it’s still valuable to understand these classic algorithms.

The simplest method is first-come, first-served. As its name suggests, we service disk requests in the order they arrive, without any reordering. While this is fair (no request gets priority over another), it can lead to poor performance:

For example, say we have requests at track 10, then track 190, then track 20. If the head is currently on track 0, servicing the requests in the arrival order means we go from 0 to 10, then jump all the way to 190, only to return to 20. The overall seek time is large, and we might waste time crisscrossing the disk surface.

The advantage of FCFS is its simplicity. However, in practice, FCFS often leads to high average seek times and is generally inefficient.

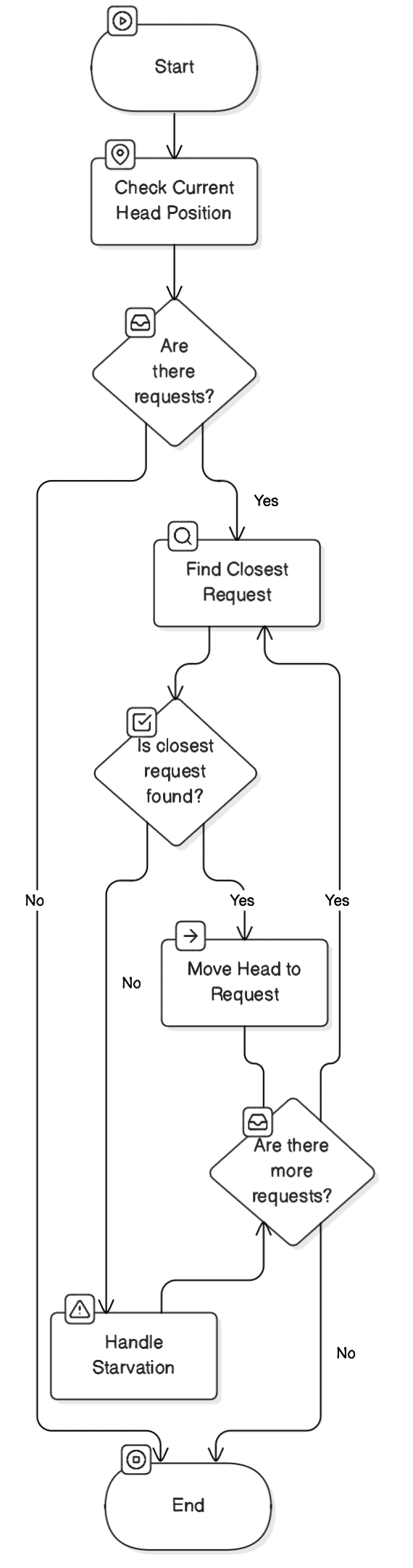

The shortest seek time first (SSTF) tries to reduce overall movement. At any given time, it chooses the requests closest to the current head position. By always processing the nearest request, this algorithm reduces the seek time:

Continuing our earlier example, if the head is at track 0 and we have requests at 10, 190, and 20, SSTF first goes to track 10 (since 10 is closest to 0). Then, of the remaining two (20 and 190), the closest is 20. After processing the request at 20, only 190 remains. This saves significant seek time compared to FCFS.

However, SSTF can cause starvation. If there’s always another request closer to the head than a specific distant request, the distant request might wait indefinitely. So, while SSTF improves average performance, it can be unfair in certain workloads.

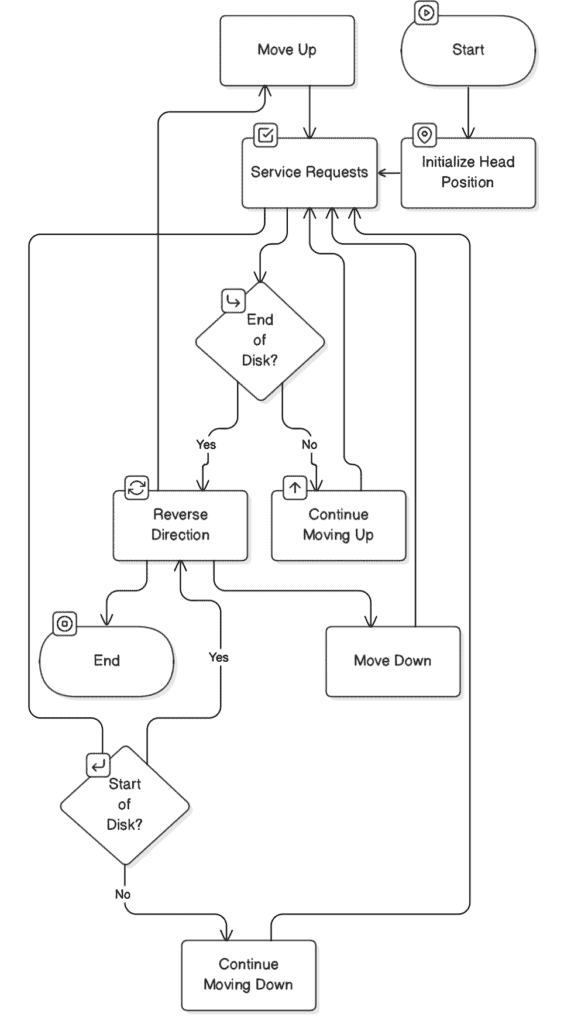

SCAN is often described as the elevator algorithm because it works similarly to how an elevator moves in a building. The head moves continuously across the disk in one direction, servicing all requests in its path. Upon reaching the end, it reverses its direction and services the requests on the way back. This pattern repeats, just like an elevator going up and down, picking up passengers along the way:

For example, if our disk is laid out from track 0 to track 200, and the head starts at track 50, moving towards higher-numbered tracks, SCAN will service all the requests from the current position up to the maximum track number, then reverse and service requests on the way down.

SCAN reduces the worst-case waiting time and provides a better sense of fairness than SSTF. Requests are serviced in an orderly manner as the head passes by. However, requests near the edges might still wait longer since the head only reaches them once per full pass.

C-SCAN, or circular SCAN, modifies SCAN to provide more uniform wait times. Instead of reversing direction after reaching the end, the head jumps back to the start (like a circular buffer) and continues in the same direction.

This way, all requests wait, at most, one full pass of the disk. C-SCAN provides a more consistent performance profile and is often considered fairer than SCAN.

LOOK and C-LOOK are variants of SCAN and C-SCAN that don’t travel to the edges of the disk if there are no requests out there. The head goes only as far as the furthest outstanding request in the current direction, then reverses or jumps back. This optimization can save time if no requests are waiting at extreme ends.

So, LOOK behaves like SCAN but stops where the last request in a direction is located, while C-LOOK behaves like C-SCAN but only travels between the extremes of the actual requests. This makes them more efficient in many real-world scenarios.

No single algorithm is suitable for all situations. Each approach has strengths and drawbacks, and the choice often depends on the workload, priorities, and specific environment.

Here, we summarize the key differences and trade-offs:

| Algorithm | Key Advantages | Key Disadvantages | Best Use Cases |

|---|---|---|---|

| FCFS | Simple and fair in terms of the arrival order | Can lead to long wait times and inefficient head movement | Environments where simplicity and strict arrival order fairness are a priority |

| SSTF | Minimizes average seek time by serving closest request first | Starvation risk for distant requests | Systems where reducing seek time is crucial, and workloads are well-distributed |

| SCAN | Predictable pattern, reduced worst-case waiting time, more fairness than SSTF | Requests at extremes may still wait longer | General-purpose systems needing balanced performance and fairness |

| C-SCAN | More uniform wait times than SCAN, better fairness for all requests | May not always minimize seek times as efficiently as SSTF | Environments where fairness and uniform waiting are more important than raw performance |

| LOOK/C-LOOK | Avoids unnecessary travel to disk ends, and can be more efficient in many real situations | Complexity is slightly higher than SCAN/C-SCAN | Workloads where requests cluster in certain areas, improving performance by not scanning empty regions |

Modern computers frequently use SSDs rather than HDDs, or at least a combination.

SSDs don’t have moving parts. Instead, they store data in flash memory cells. This changes the nature of disk scheduling because seek time and rotational latency are essentially zero on SSDs. The position of data doesn’t matter as much as it does on a spinning disk.

However, disk scheduling can still play a role. Certain operations on SSDs, such as writes, can be more expensive and wear down the cells. Therefore, we need scheduling strategies to distribute writes evenly or batch them to reduce overhead.

Additionally, in multi-user or server environments, even with SSDs, some form of scheduling may help balance load, ensure quality of service, or handle priorities.

Traditional scheduling algorithms were designed to solve problems of mechanical latency. While we may not need SCAN or SSTF in the same way for SSDs, the concept of managing I/O requests intelligently remains valuable.

In real operating systems, disk scheduling isn’t just about the algorithm. Other factors come into play:

Modern OS schedulers incorporate these factors to optimize disk performance dynamically. So, the disk scheduling algorithm is only part of a bigger puzzle that includes memory management, file system structure, and driver optimizations.

As storage technology evolves, the role of traditional disk scheduling might diminish in importance for SSD-based systems. With no mechanical parts, most of the complexity that motivated these algorithms disappears. However, scheduling might still matter for other reasons:

In technologies like NVMe-based SSDs, where multiple I/O queues are supported, scheduling is about managing queues and priorities rather than handling mechanical latencies. Some scheduling algorithms have been designed specifically for these devices. In this subfield, the focus is on quality of service, latency guarantees, and device lifetime instead of minimizing seek time.

We might wonder how much difference disk scheduling can make. On a system with a fast SSD, the possible improvement might seem negligible, but on traditional HDDs or in large-scale systems where disks handle thousands of requests per second, a good disk scheduling strategy can significantly improve throughput and response times.

Disk scheduling can influence how quickly queries return results in database servers, for instance. Busy file servers can affect how long users wait to open files. Even on personal computers, a more efficient scheduler can mean smoother multitasking and less waiting when multiple applications access the disk simultaneously.

In this article, we discussed the concept of disk scheduling.

In short, disk scheduling is all about making our storage subsystems more efficient. By understanding what it means and how it’s done, we gain insight into the intricate interplay between hardware capabilities and software intelligence. Ultimately, disk scheduling helps us get data off the disk and into memory as fast and fairly as possible, improving the overall computing experience.