1. Introduction

We often study how one variable influences another. For example, we may want to determine if older persons have more chances of having pneumonia than younger ones or find out how house prices vary depending on the number of bedrooms.

In this tutorial, we’ll discuss two statistical concepts: correlation and regression. Although they share the goal of studying the relationship between variables, they have different approaches and applications.

2. Correlation

A correlation coefficient quantifies the strength of association between two variables and indicates the direction of their relationship.

For example, the Pearson coefficient ranges from -1 to +1. When

is close to 1, we have a positive and strong linear relationship between the variables: if one variable increases, the other will also increase.

On the other hand, as approaches -1, we have a strong negative linear relationship. This means that if one of the variables increases, the other decreases. Finally, a correlation coefficient equal to zero indicates no linear relationship between the variables.

Studying nonlinear dependencies between variables requires a more complex analysis, so we won’t cover them in this article. We have Spearman’s rank correlation and Kendall’s tau coefficients as examples of coefficients capable of capturing nonlinear relationships.

3. Regression

Regression estimates the functional relationship between the dependent and independent variables.

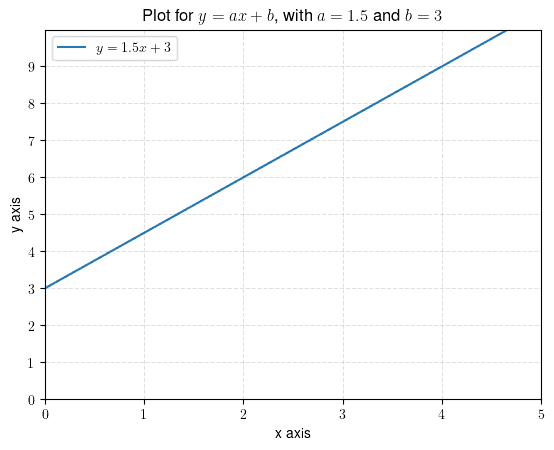

In the case of linear regression, we get a line fitted to the original data. The regression equation describes this line:

So, linear regression finds the equation for computing the dependent variable from the independent variable

. In this equation, the value of

indicates the slope of the line, and

indicates the point where the line intercepts the

axis:

Furthermore, the regression can handle the case with multiple independent variables :

In this equation, represents the y-intercept when all independent variables equal zero. Each

represents the change in

relative to a change in

.

We can also fit nonlinear functions, e.g.:

To do so, we need to choose the form of functional relationship before fitting.

4. Examples

We’ll show the difference between correlation and regression with two examples.

4.1. Example 1

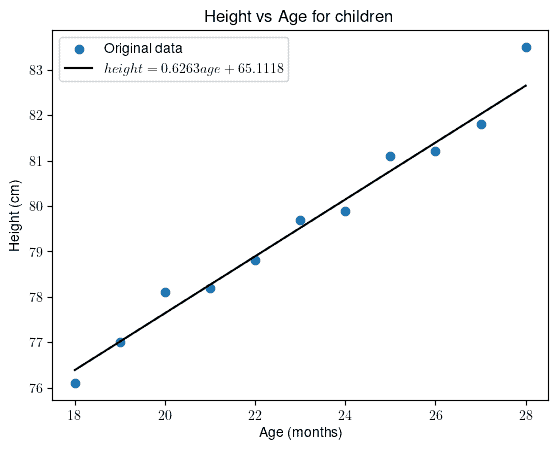

In the first scenario, we’ll consider the children’s age as the independent variable and average height as the dependent variable:

First, let’s calculate the Pearson correlation coefficient. For this data, we get . This indicates, as expected, that height increases with age. Or in other words, the two variables have a strong and positive linear relationship.

But what if we want to estimate the expected height for a 32-month-old or find the mathematical form of the relationship?

For that, we need regression. The linear regression gives us the following:

Visually:

Although we couldn’t perfectly fit the regression line to the data, it’s clear that the fit is satisfactory.

By setting , we obtain

.

4.2. Example 2

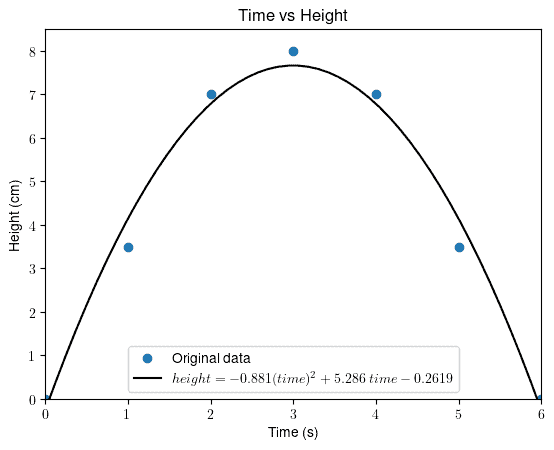

Let’s say we want to model the trajectory of a ball after it a throw. Our independent variable is the time in seconds, and our dependent variable is the height in centimeters:

Let’s start by computing the Pearson correlation coefficient. Then, we find that , which indicates that there is no linear relationship between the two studied variables. However, we’ll see a clear quadratic relationship if we plot the data and fit a (quadratic) model:

Since we have a nonlinear and non-monotonic relationship, the traditional correlation coefficients won’t provide any insights into the data.

If we had a strictly increasing or decreasing behavior (i.e., monotonic), Spearman’s or Kendall’s tau coefficients would easily prove the correlation between them.

5. Summary

Let’s summarize the main differences between correlation and regression.

6. Conclusion

In this article, we discussed correlation and regression. They provide different ways of analyzing the relationship between variables.

A correlation coefficient is a number that indicates how strongly two variables are related. In contrast, regression describes the relationship with an actual equation we can use to estimate the value of the dependent variable.

We should remember that these are tools that can be used together. Once we start working with some data, we can compute the correlation coefficient first. If it’s strong, we can invest more time in fitting a linear model to the data.