1. Overview

In this tutorial, we’ll see how Leadership Election with Consul helps to ensure data stability. We’ll provide a practical example of how to manage distributed locking in concurrent applications.

2. What Is Consul?

Consul is an open-source tool that provides service registry and discovery based on health checking. Furthermore, it includes a Web Graphical User Interface (GUI) to view and easily interact with Consul. It also covers extra capabilities of session management and Key-Value (KV) store.

In the next sections, we’ll focus on how we can use Consul’s session management and KV store to select the leader in applications with multiple instances.

3. Consul Fundamentals

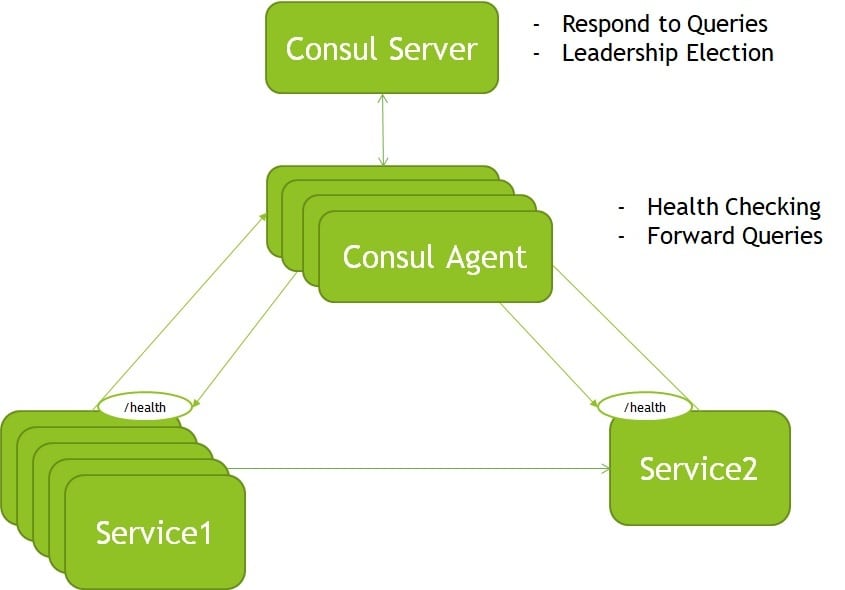

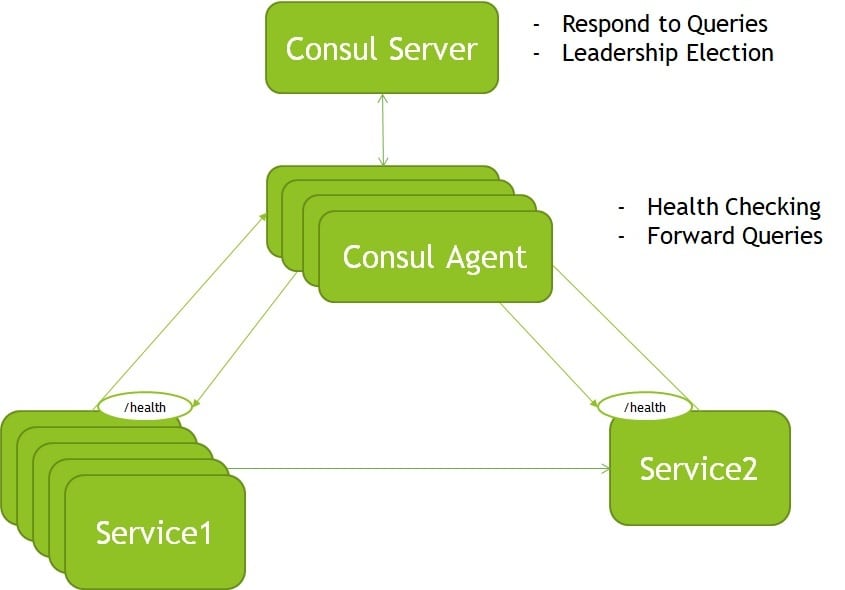

The Consul agent is the most important component running on each node of a Consul cluster. It’s in charge of health checking; registering, discovering, and resolving services; storing configuration data; and much more.

Consul agent can run in two different modes — Server and Agent.

The main responsibilities of the Consul Server are to respond to the queries coming from the agents and to elect the leader. The leadership is selected using the consensus protocol to provide Consistency (as defined by CAP) based on the Raft algorithm.

It’s not in the scope of this article to go into detail on how the consensus works. Nevertheless, it’s worth mentioning that the nodes can be in one of three states: leader, candidate, or follower. It also stores the data and responds to queries coming from the agents.

Agent is more lightweight than the Consul server. It’s responsible for running the health checking of the registered services and forwarding queries to the server. Let’s see a simple diagram of a Consul cluster:

Consul can also help in other ways — for instance, in concurrent applications in which one instance must be the leader.

Let’s see in the coming sections how Consul, through session management and KV store, can provide this important capability.

4. Leadership Election With Consul

In distributed deployments, the service holding the lock is the leader. Therefore, for highly available systems, it is critical to managing locks and leaders.

Consul provides an easy-to-use KV store and session management. Those functionalities serve to build leader election, so let’s learn the principles behind them.

4.1. Leadership Contention

The first thing all the instances belonging to the distributed system do is compete for the leadership. The contention for being a leader includes a series of steps:

- All the instances must agree on a common key to contend.

- Next, the instance creates a session using the agreed key through Consul session management and KV capabilities.

- Third, they should acquire the session. If the return value is true, the lock belongs to the instance, and if false, the instance is a follower.

- The instances need to continually watch for the session to acquire the leadership again in case of failure or release.

- Finally, the leader can release the session, and the process begins again.

Once the leader is elected, the rest of the instances use Consul KV and session management to discover the leader by:

- Retrieving the agreed key

- Getting session information to know the leader

4.2. A Practical Example

We need to create the key and the value together with the session in Consul with multiple instances running. To help with this, we’ll use the Kinguin Digital Limited Leadership Consul open-source Java implementation.

First, let’s include the dependency:

<dependency>

<groupId>com.github.kinguinltdhk</groupId>

<artifactId>leadership-consul</artifactId>

<version>${kinguinltdhk.version}</version>

<exclusions>

<exclusion>

<groupId>com.ecwid.consul</groupId>

<artifactId>consul-api</artifactId>

</exclusion>

</exclusions>

</dependency>

We excluded the consul-api dependency to avoid collisions on the different versions in Java.

For the common key, we’ll use:

services/%s/leader

Let’s test all of the process with a simple snippet:

new SimpleConsulClusterFactory()

.mode(SimpleConsulClusterFactory.MODE_MULTI)

.debug(true)

.build()

.asObservable()

.subscribe(i -> System.out.println(i));

Then we create a cluster with multiple instances with asObservable() to facilitate access to events by subscribers. The leader creates a session in Consul, and all the instances verify the session to confirm leadership.

Finally, we customize the consul configuration and session management, and the agreed key between the instances to elect the leader:

cluster:

leader:

serviceName: cluster

serviceId: node-1

consul:

host: localhost

port: 8500

discovery:

enabled: false

session:

ttl: 15

refresh: 7

election:

envelopeTemplate: services/%s/leader

4.3. How to Test It

There are several options to install Consul and run an agent.

One of the possibilities to deploy Consul is through containers. We’ll use the Consul Docker image available in Docker Hub, the world’s largest repository for container images.

We’ll deploy Consul using Docker by running the command:

docker run -d --name consul -p 8500:8500 -e CONSUL_BIND_INTERFACE=eth0 consul

Consul is now running, and it should be available at localhost:8500.

Let’s execute the snippet and check the steps done:

- The leader creates a session in Consul.

- Then it is elected (elected.first).

- The rest of the instances watch until the session is released:

INFO: multi mode active

INFO: Session created e11b6ace-9dc7-4e51-b673-033f8134a7d4

INFO: Session refresh scheduled on 7 seconds frequency

INFO: Vote frequency setup on 10 seconds frequency

ElectionMessage(status=elected, vote=Vote{sessionId='e11b6ace-9dc7-4e51-b673-033f8134a7d4', serviceName='cluster-app', serviceId='node-1'}, error=null)

ElectionMessage(status=elected.first, vote=Vote{sessionId='e11b6ace-9dc7-4e51-b673-033f8134a7d4', serviceName='cluster-app', serviceId='node-1'}, error=null)

ElectionMessage(status=elected, vote=Vote{sessionId='e11b6ace-9dc7-4e51-b673-033f8134a7d4', serviceName='cluster-app', serviceId='node-1'}, error=null)

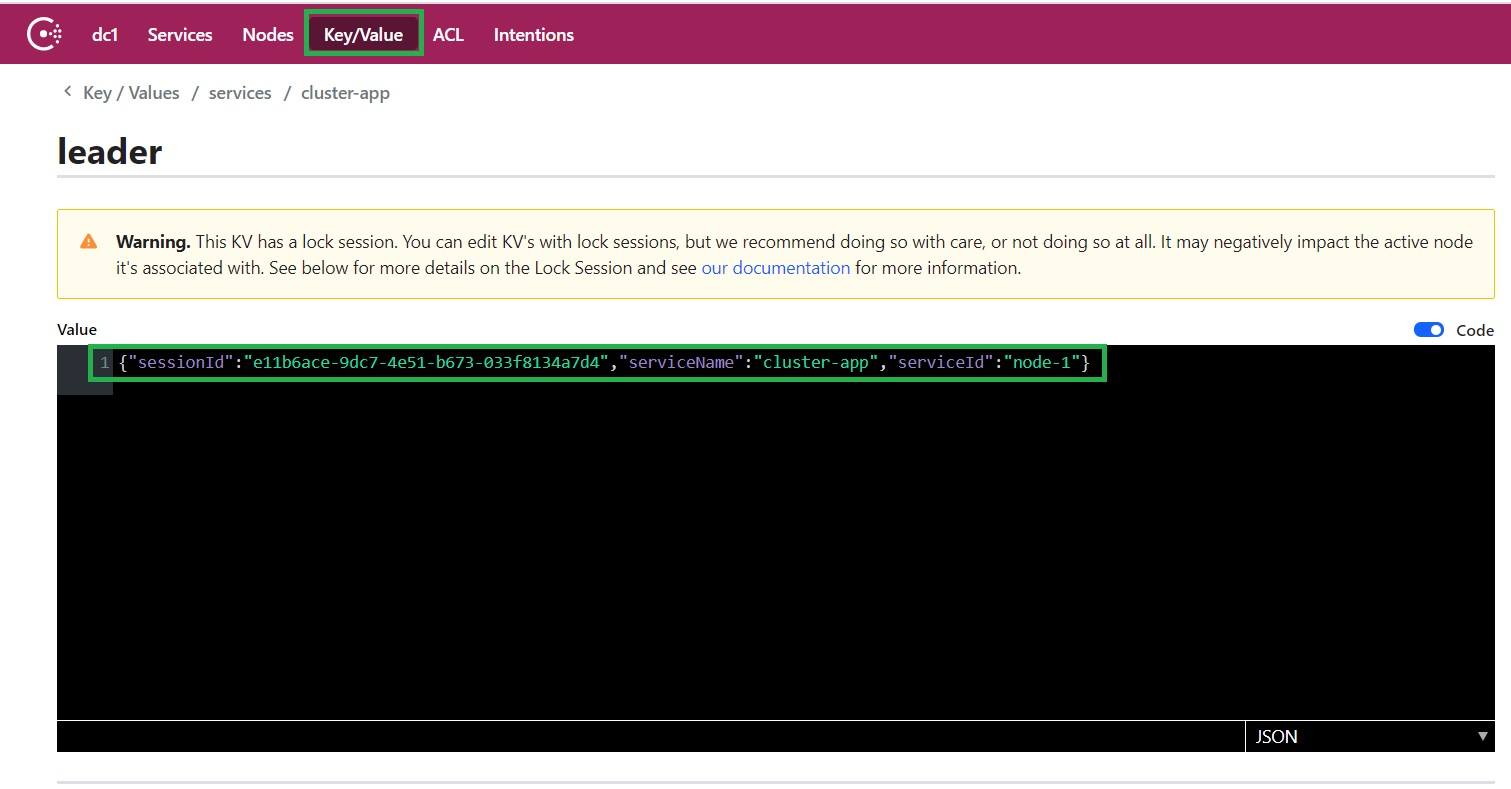

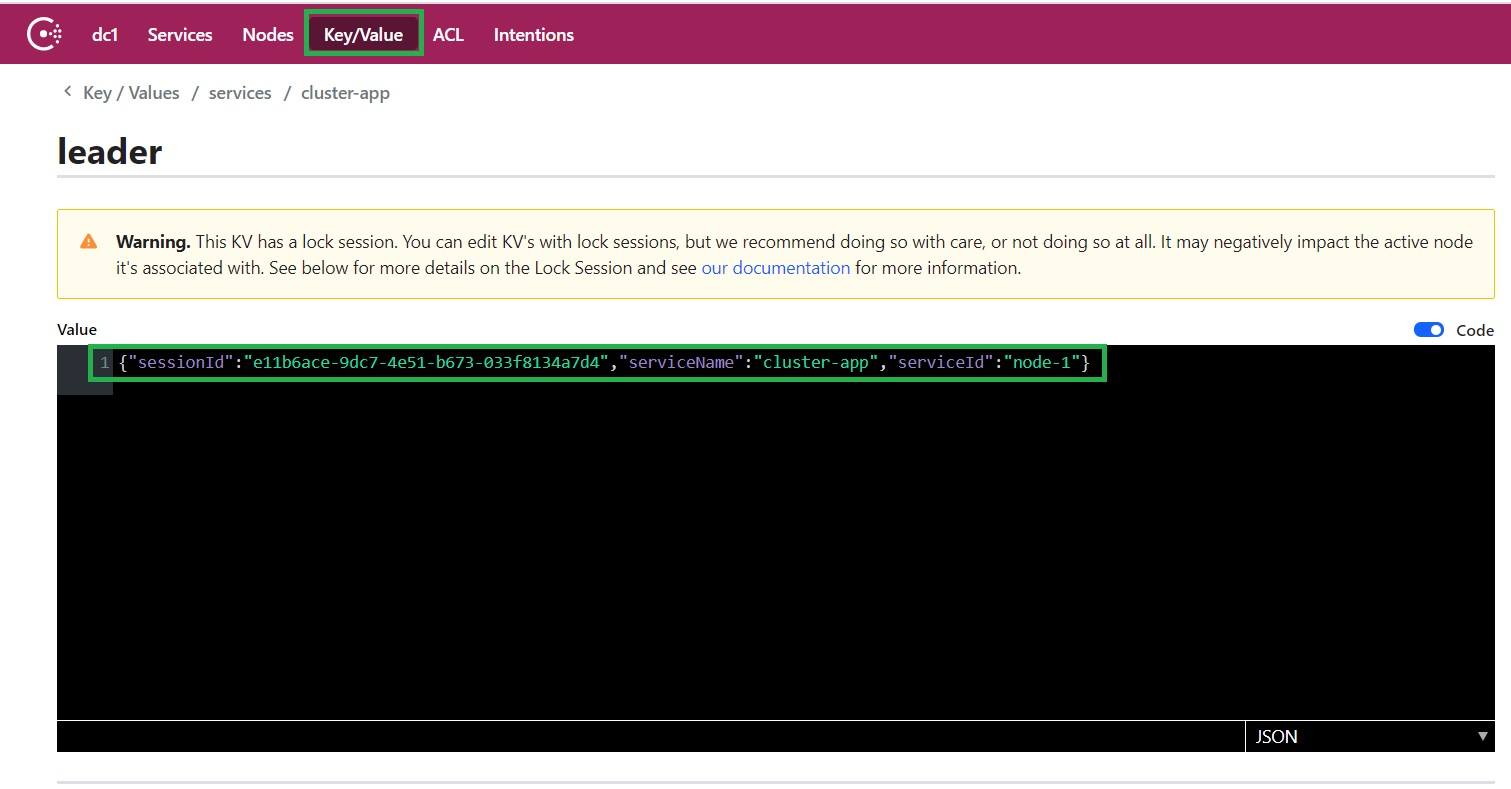

Consul also provides a Web GUI available at http://localhost:8500/ui.

Let’s open a browser and click the key-value section to confirm that the session is created:

Therefore, one of the concurrent instances created a session using the agreed key for the application. Only when the session is released can the process start over, and a new instance can become a leader.

5. Conclusion

In this article, we showed the Leadership Election fundamentals in high-performance applications with multiple instances. We demonstrated how session management and KV store capabilities of Consul can help acquire the lock and select the leader.

As always, the code is available over on GitHub.