Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: February 13, 2025

Support Vector Machines are a powerful machine learning method to do classification and regression. When we want to apply it to solve a problem, the choice of a margin type is a critical one. In this tutorial, we’ll zoom in on the difference between using a hard margin and a soft margin in SVM.

Let’s start with a set of data points that we want to classify into two groups. We can consider two cases for these data: either they are linearly separable, or the separating hyperplane is non-linear. When the data is linearly separable, and we don’t want to have any misclassifications, we use SVM with a hard margin. However, when a linear boundary is not feasible, or we want to allow some misclassifications in the hope of achieving better generality, we can opt for a soft margin for our classifier.

Let’s assume that the hyperplane separating our two classes is defined as :

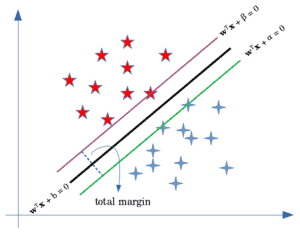

Then, we can define the margin by two parallel hyperplanes:

They are the green and purple lines in the above figure. Without allowing any misclassifications in the hard margin SVM, we want to maximize the distance between the two hyperplanes. To find this distance, we can use the formula for the distance of a point from a plane. So the distance of the blue points and the red point from the black line would respectively be:

As a result, the total margin would become:

We want to maximize this margin. Without the loss of generality, we can consider and

. Subsequently, the problem would be to maximize

or minimize

. To make the problem easier when taking the gradients, we’ll, instead, word with its squared form:

This optimization comes with some constraints. Let’s assume that the labels for our classes are {-1, +1}. When classifying the data points, we want the points belonging to positives classes to be greater than , meaning

, and the points belonging to the negative classes to be less than

, i.e.

.

We can combine these two constraints and express them as: . Therefore our optimization problem would become:

This optimization is called the primal problem and is guaranteed to have a global minimum. We can solve this by introducing Lagrange multipliers () and converting it to the dual problem:

This is called the Lagrangian function of the SVM which is differentiable with respect to and b.

By substituting them in the second term of the Lagrangian function, we’ll get the dual problem of SVM:

The dual problem is easier to solve since it has only the Lagrange multipliers. Also, the fact that the dual problem depends on the inner products of the training data comes in handy when extending linear SVM to learn non-linear boundaries.

The soft margin SVM follows a somewhat similar optimization procedure with a couple of differences. First, in this scenario, we allow misclassifications to happen. So we’ll need to minimize the misclassification error, which means that we’ll have to deal with one more constraint. Second, to minimize the error, we should define a loss function. A common loss function used for soft margin is the hinge loss.

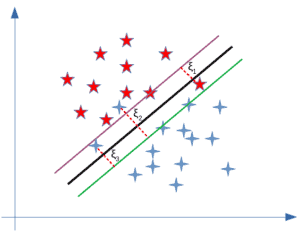

The loss of a misclassified point is called a slack variable and is added to the primal problem that we had for hard margin SVM. So the primal problem for the soft margin becomes:

A new regularization parameter controls the trade-off between maximizing the margin and minimizing the loss. As you can see, the difference between the primal problem and the one for the hard margin is the addition of slack variables. The new slack variables (

in the figure below) add flexibility for misclassifications of the model:

Finally, we can also compare the dual problems:

As you can see, in the dual form, the difference is only the upper bound applied to the Lagrange multipliers.

The difference between a hard margin and a soft margin in SVMs lies in the separability of the data. If our data is linearly separable, we go for a hard margin. However, if this is not the case, it won’t be feasible to do that. In the presence of the data points that make it impossible to find a linear classifier, we would have to be more lenient and let some of the data points be misclassified. In this case, a soft margin SVM is appropriate.

Sometimes, the data is linearly separable, but the margin is so small that the model becomes prone to overfitting or being too sensitive to outliers. Also, in this case, we can opt for a larger margin by using soft margin SVM in order to help the model generalize better.

In this tutorial, we focused on clarifying the difference between a hard margin SVM and a soft margin SVM.