Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: April 1, 2022

In this tutorial, we’ll discuss the differences between strong-AI and weak-AI, as represented in the scientific literature on artificial intelligence.

At the end of the tutorial, we’ll be able to distinguish between the science fiction aspects of the discourse and the ones retrieved from scientific or philosophical literature; and at the same time, we’ll be able to point out the most important differences that are associated with these two approaches towards the conceptualization of AI.

In popular culture, as well as in the scientific discourse, a lot of attention is given to the developments that take place in the sector of artificial intelligence. From the killer-robot Skynet in Terminator to the wise android R. Daneel Olivaw in Asimov’s series Robots, there is barely any cultural product in science fiction that doesn’t deal with the theme of artificial intelligence and its interaction with society:

This is important because, as we study in this article, the distinction between strong and weak AI isn’t only a matter of engineering. It has to do, rather, with the relationship between AI and human groups.

As the computer systems and the software that runs on them become more complex, so does the interaction between those computers and that software, on one hand, and human society, on the other, become more complex and more difficult to study. In the scientific discourse, different journals such as Information, Frontiers in Psychology, Technology in Society, and Cognitive Systems Research, all cover various aspects of the interaction between human societies and strong AI systems:

But what exactly is a strong AI, and why do we need to conceptualize it in order to study the interaction between people and technology and thus forecast the future of this interaction?

In many of those venues, both in the popular and in the scientific discourse, the question emerges as to what would a future society that uses AI look like. Would the artificial intelligence systems be kind and nice towards humankind, or would they want to enslave and subdue it? Would they try to promote the intellectual development of humankind, or would they attempt to impede it and wage more senseless wars?

We could reply to these questions: of course neither, since a machine can’t think nor feel. Therefore, it’s illegitimate to ask them in the first place: a program can only do insomuch as it has been programmed to do. Are we so sure, though, that there can’t be any machines who think?

It’s exactly here that lies the importance of distinguishing between strong and weak artificial intelligence: we’d like to decide if machines think like humans, or not, and in either case why is that the case. To decide that, it’s useful to conceptualize what would the answer to this problem look like.

Now, two possible answers can be given to the previous question about machine cognition: according to some, machines can think in principle in the same manner as humans do; according to others, this is impossible, and a computer remains qualitatively different from a human, no matter how sophisticated.

From this, it follows that there are two approaches we can now take, in order to make sense of the distinction between weak and strong AI. One is based upon the idea that “strong” implies “weak”, and thus comparison; and that the comparison between human and machine intelligence is therefore possible. This corresponds to the modern distinction between the two concepts.

The other approach, based upon the philosophy of mind and the works of Searle, corresponds instead to the traditional definition of strong AI. It first assumes the existence of a qualitative difference between the human brain and mind and then discusses whether this can also be found in AI systems. Let’s now see both approaches, in turn.

We may be tempted to think that whatever these words mean, “strong-AI” and “weak-AI”, they define some characteristics of AI systems. And if we believe that, we could argue, if we want to distinguish between strong and weak AI systems we then need to study the AI systems themselves.

This idea is incorrect, though, since the concept of weak versus strong systems implies comparison. So what exactly should we compare, when deciding whether some AI system is strong or weak? Do we compare two AI systems with one another? If an AI system is “stronger” than a “weak” AI system, whatever this may mean, does it imply that the stronger AI system is a “strong AI”?

The subjects that we compare, counter-intuitively, aren’t AI systems with one another; rather, AI systems with people in relation to some tasks that they both can perform.

We can argue that it’s a good idea to believe that humans have an out-of-ordinary capacity to affect their environment and modify it, and to call that capacity “intelligence”. It’s also a good idea to think that, whatever this intelligence is, humans have more of it than other animals; say, a beaver or a woodpecker. If we didn’t postulate that humans are intelligent, and more so than other animals, we’d have a hard time explaining why the environment around them changes much more rapidly and in many more sophisticated ways than the environment around those same animals.

It, therefore, makes sense to rank systems, and biological systems in particular, in terms of their intelligence; even though the identification of a well-defined metric is still an open subject of research. It also makes sense to think that, if a given biological system is capable to perform each and every function performed by another, such that the set of functions performed by the two is identical, then the systems are equally intelligent. If indeed, for any given task two distinct systems performed equally when evaluated with the same metric, it would be a good idea to think of those systems as having the same degree of intelligence.

This last point is needed because the intra-species distribution of intelligence among humans seems to have a relatively low variance when compared to the inter-species distribution between animals; though some argue that things aren’t so neat and clear.

One reason why things aren’t so clear is that humans are generally considered more intelligent than other biological systems, whenever we ask the human themselves to self-assess their intelligence. They also score themselves higher when we ask them to compare themselves with AI systems: as a consequence, if there exist metrics according to which the humans score higher than AI, they tell us, then that AI is a weak AI.

Of course, the identification of some specific task or some specific metric for comparison is needed, before we can score AI systems against humans. In the past, we used to think that playing chess was an inherently complex task, unsolvable via AI, by reason of the dimensionality of its search space. Now that chess has been solved, and that Go has followed suit, we have identified other tasks which humans can perform well and AI systems don’t.

Some of these tasks involve playing videogames, driving vehicles, and the identification of legal norms that apply in concrete legal judgments. However, as the progress in automating these tasks continues, we may observe that these, too, might disprove the idea that AI is weaker than humans.

Conversely, we could also consider an AI system to be stronger than humans, if it scored higher on some specific tasks or even better in the set of all possible tasks. In this context, if we agree that an enumeration of human cognitive functions is in principle possible, we can then equate the definition of strong AI with the definition of general artificial intelligence.

If we apply this approach, the term strong AI can therefore be used as a synonym for general AI, while the term weak AI can be used as a synonym for narrow AI.

It’s unclear whether general artificial intelligence; i.e., the performance of all human tasks or functions via automated means, is indeed possible. Even if the identification of all human tasks were possible, however, the performance of humans in conducting those tasks would still matter in order to determine who is stronger and whether the AI or the humans.

Therefore, we’d still need a benchmark or metric for assessing the relative performances of AI vis-à-vis humans, which would bring us back to the problem of asking the humans to score themselves against AI systems.

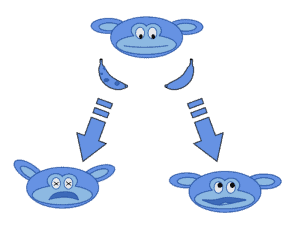

There is one way out of the bias that derives from this arbitrary selection of metrics for assessing intelligence. Let’s assume for a second that the set of tasks or functions that a biological organism performs, and that we use to assess intelligence and to compare the intelligence in AI systems with that of humans, comprises exclusively of the identification of rotten food in a set of food items.

There are good evolutionary reasons why, if we’re born primates, we may want to learn how to distinguish fresh fruit from spoiled fruit. In this manner, in fact, we can decide to eat the former and thrive and survive, and avoid the latter which would cause us stomachache and disease:

The process of identification of spoiled or rotten food, when conducted exclusively on the basis of images, corresponds largely to the identification of odd-colored spots in the texture of the food items. This task is being studied by researchers in machine learning, and humans might be outperformed by AI in the near future.

Let’s now also assume that we can play with the genetic endowment of humans, and change two of their attributes. We can then conduct two thought experiments:

In the first experiment, we can see that the AI system performs better than humans in a task that isn’t needed for the survival of human groups. If humans fed exclusively on water, and if AI systems were better than humans in identifying spoiled fruit, this would contribute nothing to the fitness of the humans that develop those systems. Human tribes would not grow or perish according to the computed output of the machines, since the task is built in such a manner as to not contribute to human fitness.

We would subsequently expect that, if we asked a human expert to score the relative intellectual capacities of humans and of an AI that performs spoiled food recognition tasks, the expert would score the AI as a specialist or narrow AI, and therefore classify it as a weak AI.

Notice that this is irrespective of the accuracy of the AI system in identifying rotten food; and also irrespective of the fact that, in our real world, this task has indeed evolutionary advantages. We can think, mutatis mutandis, about how little the capacity of AI to outperform humans in chess has impacted the consideration that AI systems perform narrow cognitive tasks and not general tasks.

In the second experiment, we can argue that the AI system that uses color and identifies fresh food accurately, is better than humans in a task that contributes to human fitness. If we accept the assumption that the only task that humans perform is eating fruit, and if the AI system fares better at it, then this implies that the AI system is stronger than the humans against which we compare it.

Notice also that, in making this consideration, we aren’t introducing any bias with the arbitrary selection of metrics upon which we judge the relative strength of biological and computational systems. While the AI system doesn’t necessarily require fresh food to survive, if indeed it can identify fresh food better than humans, then the AI system is fitter than humans on the basis of the same metric upon which humans are certainly judged: that of fitness and of natural selection.

This argument, less treated by the literature on the subject but nonetheless relevant, could be a way out of the problem of choosing metrics according to which we evaluate the relative cognitive capacities of human and AI systems.

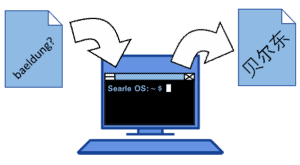

The last point to consider, for completion’s sake, is that the current definition of weak versus strong AI isn’t the one originally employed by the creator of the terminology. The very first time the concepts of weak versus strong AI were introduced, in fact, the idea came out of the work conducted by Searle on the conceptualization of AI and its relationship with human intellect.

Searle’s most famous gedankenexperiment is the Chinese room, in relation to which the idea of strong AI was developed. In the experiment, Searle imagined having a book that contained a set of rules that associated, for any given question in Chinese, with a corresponding answer in the same language:

Searle argued that, if a human is inserted in the process of translation, then the translation itself is certainly conducted by a conscious entity, the human being so. If however, one removed the human from the translation loop, while at the same time having no identifiable difference in the output obtained through the translation procedure, the question as to whether this procedure remained conscious was much harder to answer.

This line of thought led him to develop the original formulation of the difference between weak versus strong AI. According to this idea, a weak AI is an AI system that doesn’t have a mind or consciousness. A strong AI, in contrast, is an AI system that possesses a similar state of mind or consciousness as that of the human who translates texts.

Notice that this definition is more common in philosophy of mind, and less common in computer science since the latter must rely on things that can be observed or computed. This approach also becomes less defensible unless we subscribe to Cartesian dualism; because if we don’t, the distinction between brain activity and mind becomes much fuzzier than the one contained in Searle’s original definition.

For this reason, when we treat the differences between strong and weak AI it’s important to clarify first whether we believe that a biological brain is needed to be conscious, or whether consciousness is independent of the substratum that’s used in biological neural networks. It may thus be more useful to think in terms of the relative cognitive functions of humans and AI systems, as we did earlier, rather than focusing on the subjective and conscious experiences of people and extending them to computers. This is because the latter approach requires much stronger assumptions on the nature of consciousness and intelligence.

In this article, we discussed the differences between strong-AI and weak-AI.