1. Overview

In this tutorial, we’ll talk about occlusions in image processing. First, we’ll define the term occlusion, and then we’ll discuss the importance of taking into account occlusion situations in image processing, providing some examples.

2. Definition

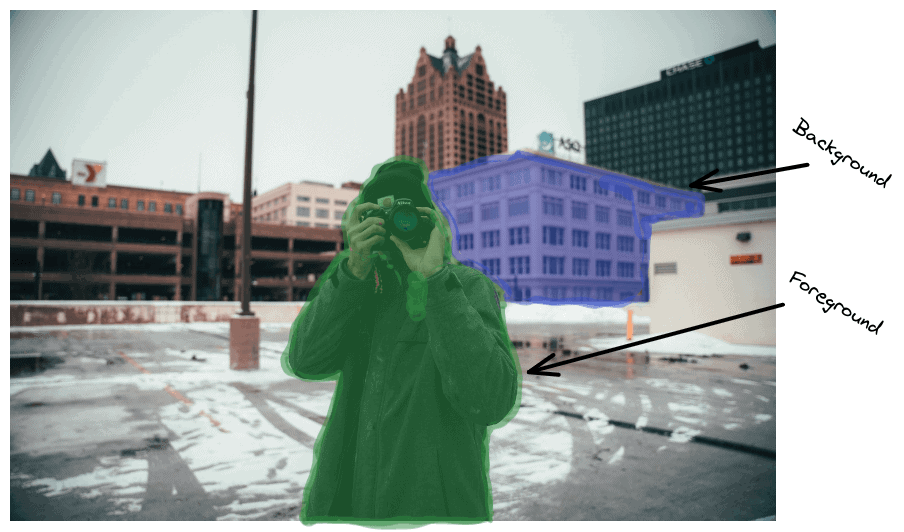

Let’s suppose that we are in a 3-dimensional scene that contains some objects, and we take a picture of this scene. By definition, the picture corresponds to a projection of that part of the scene into a 2-dimensional space. In this space, the foreground objects of the scene will occlude the background surfaces.

Put simply, occlusion in an image occurs when an object hides a part of another object. The areas that are occluded depend on the position of the camera relative to the scene.

For example, in the image below, the photographer (foreground object) occludes a part of the building (background surface):

3. Importance

Occlusion is considered one of the most common events that reduce the available visual information. Since a lot of visual information is hidden and cannot be captured, occlusion is one of the main reasons many tasks in image processing and computer vision are still very hard to solve.

Let’s think of the problem of object tracking, where our goal is to identify and track a single or multiple objects in an environment. Its applications are numerous, including car and pedestrian tracking in autonomous driving. However, if the object we are tracking is at some point occluded by another object, the system might lose track of the first object. In cases like autonomous driving, this problem can have catastrophic effects. Therefore, tracking algorithms should always include steps or techniques that take into account possible occlusions.

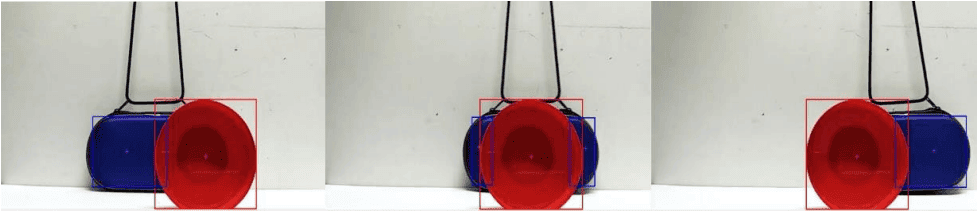

In the images below, we can see a common problem in object tracking due to occlusion when we use a tracker based only on the color of the objects:

In the left and right image, the system tracks both objects correctly but neglects the occluded regions of the blue object. In the center image, the system wrongly perceives the blue object as two separate objects.

4. Examples

Now we’ll present some cases where occlusion is important and should be taken into account when designing a system.

4.1. Facial Recognition

In facial recognition, our goal is to identify the identity of a person using their face. The input is a facial image, and the output is the name of that person. People often wear accessories, hats, glasses, and other objects that occlude important parts of their faces. So, a facial recognition system should be robust to this kind of occlusions in order to perform well.

In the image below, we can see a woman that wears a mask. A facial recognition system may fail to identify her since her nose, and her mouth is hidden:

4.2. Augmented Reality

The goal of an augmented reality system is to enhance the user’s vision with computer-generated imagery. The main problem is how to realistically blend virtual imagery with real-world objects. So, we realize that occlusion between real and virtual objects is very common and handling these situations is very important for an effective augmented reality system.

In the image below, we can see the interface of an augmented reality system for shopping. The virtual labels occlude some parts of the real-world objects:

5. Conclusion

In this tutorial, we talked about occlusion. We started with a definition of the term, and then we talked about its important role in many computer vision and image processing tasks.