Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: February 28, 2025

In this tutorial, we’ll go over the regressor and use examples to illustrate how to interpret the regressor in different regression models. Furthermore, we’ll go over what the regression analysis is and why we need it.

A regressor is a statistical term. It refers to any variable in a regression model that is used to predict a response variable. A regressor is also known as:

We use all of these terms depending on the type of field we’re working in: machine learning, statistics, biology, and econometrics.

Let’s take a look at regression analysis to get a better understanding of the regressor.

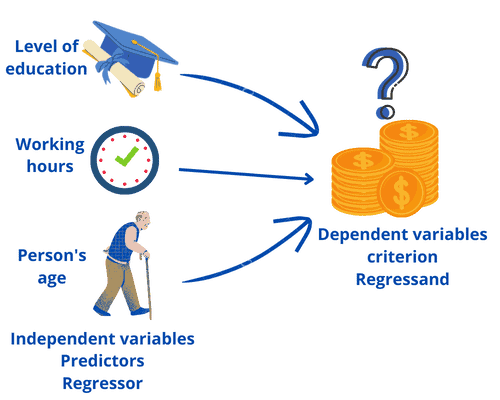

A regression analysis allows us to infer or predict a variable on the basis of one or more other variables. Let’s say we want to find out what influences the salary of people:

We can predict a person’s salary by taking the highest level of education, the weekly working hours, and the person’s age. The variable that we want to predict is called the dependent variable, regressand, or criterion. The variables we use for the prediction are called regressors, independent variables, or predictors.

Regression analysis can be used to achieve two goals. Let’s take a look at these goals.

So, the first goal is the measurement of the influence of one or more variables on another variable:

The second goal is the prediction of a variable by one or more other variables:

To construct a regression model, we need to understand how changes in a regressor cause changes in a regressand (or “response variable”).

These models can have one or more regressors. So, the model is referred to as a simple linear regression model when there is only one regressor. When there are multiple regressors, we refer to the model as a multiple linear regression model to indicate that there are multiple regressors.

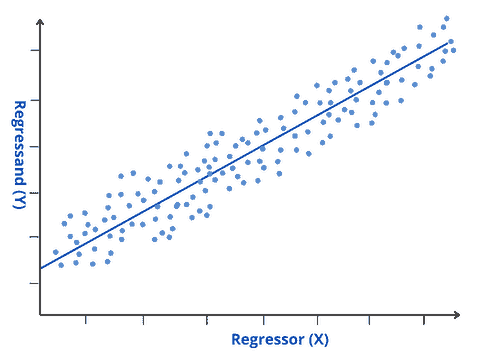

Simple Linear Regression is a linear approach that plots a straight line within data points in order to minimize the error between the line and the data points. It is one of the most fundamental and straightforward types of machine learning regression. So, the relationship between the regressor and regressand is considered to be linear. Let’s take a look at the simple linear regression model:

where:

This method is straightforward because it is used to investigate the relationship between one regressor and the regressand. The image below represents how linear regression approximates the relationship between a regressor on the and a regressand on the

axis:

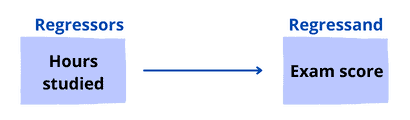

Let’s take a look at how the number of hours studied affects exam scores. So, we collect data and build a regression model:

Let’s see a bloc representation of the model:

As we can see, our model has only one regressor: . The coefficient for this regressor indicates that for every additional

, the

increases by an average of

points.

We use the multiple linear regression approach when we have more than one regressor. Polynomial regression is an example of a multiple linear regression approach. So, when multiple regressors are involved, we achieve a better fit than simple linear regression. Let’s take a look at the multiple regression model:

where:

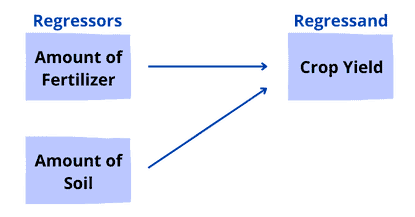

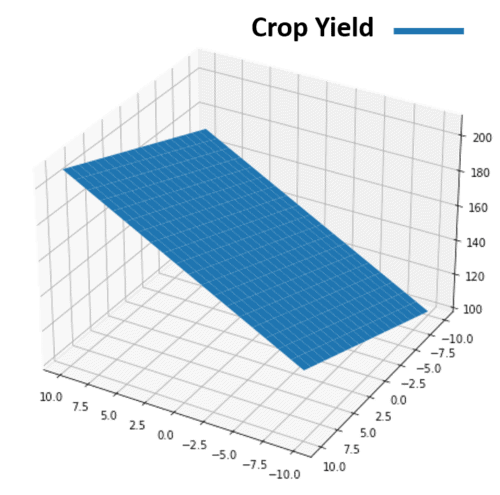

Let’s take a look at what elements may influence total crop yield (in pounds). So, we collect data and build a regression model:

This model has two regressors: Fertilizer and Soil. Let’s see a bloc representation of the model:

Let’s interpret these two regressors:

The image below represents how multiple linear regression approximates the relationship between regressors and the regressand

:

In machine learning, regression models are trained to understand the relationship between various regressors and a regressand. The model can therefore understand several factors that may lead to the desired regressand.

Let’s take a look at some applications for machine learning regression models:

In this article, we’ve explored the terms regressor and regressand. We’ve also gone over the regression analysis and its types. Then we’ve used examples to unlock their mechanism.