1. Introduction

In this tutorial, we’ll discuss the unique features of MPEG-4 and various compression methods of it.

We’ll provide helpful tips and share best practices.

2. MPEG-4

MPEG-4 introduces advancements and capabilities that surpass what earlier techniques could achieve. Some of these include employing sophisticated algorithms for video and audio compression, which lead to higher compression ratios while still maintaining acceptable quality. This capability allows for efficient storage and transmission of multimedia content.

Additionally, MPEG-4 supports interactive multimedia content by enabling users to interact with and manipulate objects within the video, reaching a level of interactivity that wasn’t achievable with earlier techniques.

This format allows content to be encoded at different quality and resolution levels, adjusting to varying network conditions and device capabilities. In contrast, earlier methods had a limited scalability. MPEG4 incorporates advanced error correction techniques, enhancing resilience to transmission errors, packet loss, and other challenges that could compromise the quality of previously compressed videos.

Its progressive content encoding, real-time quality adjustments, and adaptability to varying network bandwidth make it ideal for network streaming. Additionally, it offers support for an extensive array of devices and platforms, ensuring consistent multimedia experiences across diverse devices, from mobile phones to high-definition televisions.

MPEG4 efficiently manages intricate scenes combining textures, motion, and shapes, leading to superior compression and rendering compared to previous techniques.

3. Various Compression Techniques for MPG-4

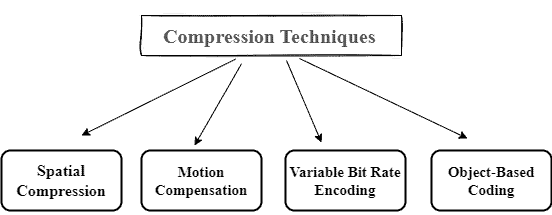

We’ll cover four compression techniques:

3.1. Spatial Compression

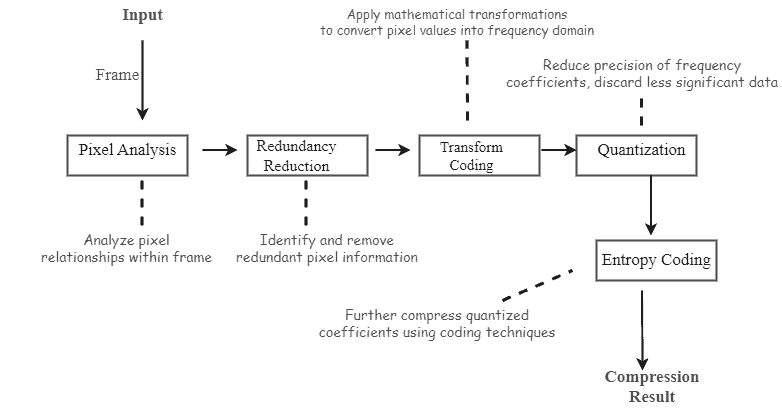

The process involves analyzing pixel relationships to reduce redundancy in frames, particularly suitable for static images or videos with minimal motion. This technique leverages the similarity of neighboring pixel values in image or video frames, that arise from inherent patterns, textures, and structures:

This approach effectively reduces the amount of data while upholding quality.

3.2. Motion Compensation

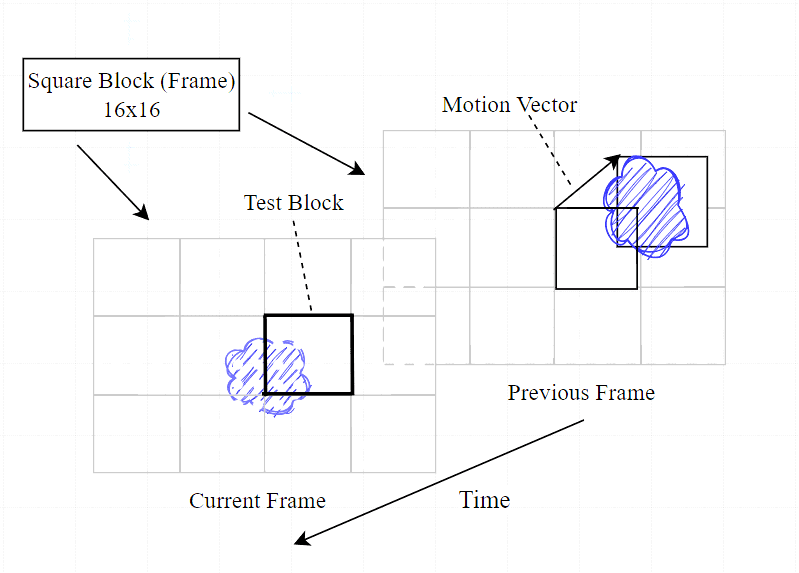

It’s a video compression technique that minimizes frame redundancy by capturing predictable motion between consecutive frames, reducing data duplication.

The selection of a reference frame, which is frequently an encoded one, creates a foundation for comparing with the current frame. The analysis of the current frame identifies object motion through pixel block comparison with the reference frame. This process of motion estimation identifies pixel blocks that have shifted due to object movement:

Then, we calculate a motion vector for each pixel block that has shifted to indicate the direction and extent of the movement. With this vector, we predict the pixels of the current frame from the reference frame. Subtracting the predicted pixel values from the actual pixel values generates a “residual” block that illustrates the differences. This residual block contains differences of smaller magnitudes, which ultimately reduces the data.

3.3. Variable Bit Rate Encoding (VBR)

VBR optimizes video/audio compression by adjusting the bit rate based on content complexity. In contrast to CBR’s fixed bit rate, VBR enhances quality by dynamically varying rates for diverse scenes.

VBR encoder analyzes content complexity before encoding. Complex scenes get more bits due to fast motion, details, or high activity. During encoding, bits vary by scene complexity: more for complex, fewer for simple scenes. VBR maintains uniform perceptual quality across video, prioritizing higher bit rates for intricate scenes and detail preservation.

VBR encoders adjust to scene complexity shifts, assigning fewer bits in simpler scenes after complex ones. With more bits in complex scenes, VBR minimizes artifacts like blocking and blurring, enhancing visual quality and reducing distortion.

3.4. Object-Based Coding

Object-based coding is a multimedia compression method that encodes individual objects within scenes separately. Unlike traditional video compression, which treats frames as a whole, this technique permits versatile manipulation, editing, and focused transmission of objects or regions within videos.

The process starts with segmenting the scene into separate objects or regions, which can range from simple shapes to complex elements like people, animals, or vehicles. Each object is encoded on its own, including visual features, audio, motion, and metadata. Effective motion representation, prediction, and compensation are enabled by tracking object movement across frames.

Objects employ appropriate compression techniques such as motion compensation, texture, and shape coding. Metadata encodes attributes and behaviors, facilitating dynamic content. During decoding, objects are integrated for real-time interaction and dynamic scene composition.

4. Audio Compression in MPEG-4

Audio compression decreases data while preserving audio quality, and MPEG-4 provides various audio compression methods.

- Advanced Audio Coding (AAC)

- Low Complexity AAC (AAC-LC)

- High Efficiency AAC (AAC-HE)

- Scalable AAC (S-AAC)

- Bit-Sliced Arithmetic Coding(BSAC)

The most prominent one is Advanced Audio Coding (AAC). It utilizes a transformation process, often the Modified Discrete Cosine Transform ( MDCT), to convert audio samples from the time domain to the frequency domain. This separation enables audio signals to be divided into frequency components.

4.1. Audio Compression Steps

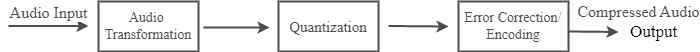

MPEG-4 audio compression, like other methods, utilizes a transformation process to convert audio samples from the time domain to the frequency domain. This separation of audio signals into frequency components is often facilitated by the Modified Discrete Cosine Transform (MDCT).

Following this transformation, the transformed audio data undergoes quantization. This quantization step entails decreasing the precision of the frequency coefficients. Less perceptually significant details are discarded in this phase, leading to a reduction in data volume:

It involves advanced psychoacoustic models that analyze human auditory perception properties, aiding in identifying less perceptually noticeable audio signal parts for aggressive quantization. Subsequently, they employ error correction and channel encoding techniques to safeguard compressed audio data from transmission errors or data loss during storage and transmission.

The quantized and channel-coded audio data undergoes additional compression through entropy coding techniques like Huffman coding or arithmetic coding. These methods allocate shorter codes to common symbols or patterns, aiding in the reduction of the overall data size.

5. Conclusion

In this article, we discussed unique features and MPEG-4 core mechanisms, from motion compensation and object-based coding to adaptive bit rate allocation, which epitomize the art of data optimization.

By identifying redundancies, capturing motion, and dynamically adjusting encoding parameters, MPEG-4 achieves the seemingly magical feat of delivering high-quality audiovisual experiences while conserving precious bandwidth and storage resources.