Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: May 27, 2024

Generative artificial intelligence (AI) has emerged as a significant technological advancement, raising questions about its implications and risks.

In this tutorial, we’ll examine the impact and risks of using generative AI and examine how to avoid them.

Generative AI systems can create new content in the form of text, images, and even voices. These models, including well-known examples like ChatGPT and DALL-E, have gained significant attention for their ability to produce human-like outputs. While the technology holds immense promise, it also raises concerns about the misuse and ethical implications.

For instance, generative AI can be used to create fake news articles that appear legitimate, leading to the rapid spread of misinformation, causing public panic, or damaging reputations. Therefore, we need to consider the potential risks alongside the technological advances.

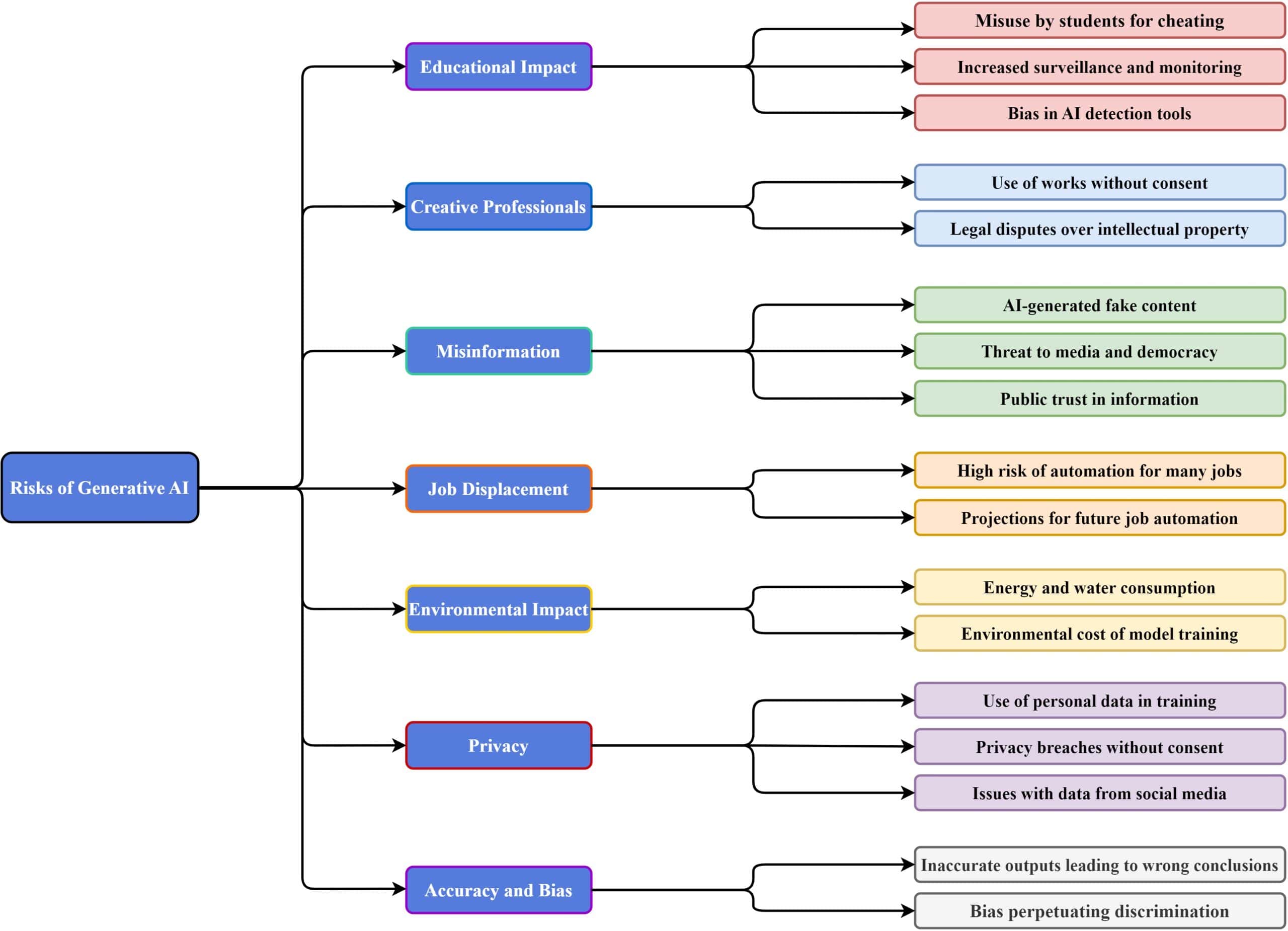

There are various risks associated with generative AI. We categorize them into distinct areas such as educational, job displacement, privacy concerns, and more:

Let’s delve deeper into the details.

The impact of generative AI on education has been profound, particularly with the introduction of tools like ChatGPT. However, there is a growing concern that students might misuse these tools to cheat on assignments, leading to increased surveillance and monitoring of students.

Moreover, the reliability of AI detection tools is questionable, often disproportionately affecting non-native English speakers. A study at Stanford University found that AI detection tools were significantly more likely to flag work written by non-native English speakers as AI-generated due to differences in language complexity.

The creative industry faces unique challenges, as generative AI models are typically trained on large datasets scraped from the Internet. These datasets often include works by artists and writers without their consent.

Further, DALL-E can generate images in the style of specific artists, leading to legal disputes over intellectual property. Artists have filed lawsuits seeking compensation and recognition for the use of their work in training these models.

The proliferation of AI-generated fake images, videos, and voices poses a significant threat to media and, ultimately, democracy. The ability to create convincing phony content undermines public trust in information, making it challenging to distinguish between real and fake news.

For instance, a widely circulated AI-generated image of the Pope in a Balenciaga jacket created confusion and sparked debates about authenticity.

The implementation of generative AI is projected to enhance AI-related productivity and foster economic growth significantly. However, many jobs are highly susceptible to automation through AI. A recent report by the UK House of Commons Library revealed that 7% of jobs are at high risk of automation within the next 5 years, with this figure projected to rise to 30% over the next 20 years.

The environmental impact of generative AI is substantial. Training large models like GPT-3 consumes vast amounts of energy and water, equivalent to driving a car to the moon and back. Water is primarily used to cool the servers that run these models. The University of California estimated that each interaction with ChatGPT consumes around 500 milliliters of water. With millions of daily users, the cumulative environmental impact is immense.

Generative AI systems, such as large language models, are trained on massive datasets that often include personal data. This raises significant privacy concerns, mainly when personal data is processed without individuals’ knowledge or consent. For example, an AI model trained on publicly available social media posts might inadvertently include personal information, leading to privacy breaches.

Generative AI models can produce inaccurate or biased outputs based on the data they are trained on. Inaccurate data can lead to incorrect conclusions or decisions, while biased data can perpetuate stereotypes and discrimination.

For instance, an AI system trained on biased hiring data might favor specific demographics over others, resulting in unfair hiring practices.

For example, let’s say the training data overrepresents certain groups, such as young males from a particular ethnicity. In that case, the AI may learn to associate these characteristics with being more qualified, regardless of the actual qualifications of the candidates. This can cause it to overlook equally or more qualified candidates from other demographics.

Now that we know the risks, let’s examine how to mitigate them.

First, we can implement robust policies and guidelines to prevent the misuse of generative AI in education. It’s important to promote ethical practices in using AI within educational settings. We should incorporate AI literacy into curricula to help students learn to use these technologies appropriately.

We should increase transparency in AI development and hold companies accountable for their technologies’ impacts. This includes clear labeling of AI-generated content and robust data governance frameworks. For example, AI-generated content should have metadata specifying the source and training data.

Companies should invest in energy-efficient technologies to reduce the environmental impact of generative AI. They should optimize algorithms and explore renewable energy sources for data centers to reduce their carbon footprint. Additionally, we should raise awareness about the environmental costs of AI and promote responsible usage.

Further, we need to include diverse voices in discussions about AI governance, especially those from impacted communities. This is vital for creating fair and effective regulations. For instance, including children and educators in discussions about the use of AI in schools can help address concerns and develop better policies.

To avoid job displacement, we should develop retraining and upskilling programs for affected workers. Industries should be encouraged to create new roles focused on managing and working alongside AI technologies.

We also need to implement robust security measures and conduct regular audits of AI systems. We should develop AI systems that can detect and respond to cyber threats. Furthermore, we must educate organizations on AI-related security risks and best practices.

Last but not least, we should establish and enforce ethical guidelines for AI use. Promoting transparency in AI development and deployment is a key aspect of these guidelines.

Let’s check what we can do for each risk:

| Risk | Mitigation Strategy |

|---|---|

| Educational Impact | Implement robust policies and guidelines. Promote AI literacy. |

| Creative Professionals | Establish clear guidelines and Implement copyright laws that recognize the contributions of artists and writers. |

| Misinformation | Develop advanced detection systems for AI-generated fake content. Collaborate with governments and tech companies to establish regulations. Educate the public on identifying AI-generated content. |

| Environmental Impact | Invest in energy-efficient technologies and sustainable practices. Optimize algorithms to require less computational power. Promote responsible AI usage. |

| Privacy | Enhance data governance and ethical training practices. Anonymize personal data and use it responsibly. Ensure clear consent mechanisms and transparency about data usage. |

| Accuracy and Bias | Use diverse and representative datasets for training. Implement fairness metrics and bias detection tools. Monitor and evaluate AI systems regularly. |

| Job Displacement | Develop retraining and upskilling programs for affected workers. Promote AI-human collaboration rather than replacement. Encourage industries to create new roles focused on managing and working alongside AI technologies. |

| Security Risks | Implement robust security measures and regular audits for AI systems. Develop AI systems that can detect and respond to cyber threats. Educate organizations on AI-related security risks and best practices. |

| Ethical Use | Establish and enforce ethical guidelines for AI use. Engage with diverse stakeholders to ensure AI technologies align with societal values and ethical standards. |

Each AI-related challenge can be addressed with strategies that include policy enhancements, technological improvements, and educational initiatives to ensure the responsible use of generative AI systems.

In this article, we looked into the impact and risks of using generative AI and different ways of avoiding those risks. Generative AI has many applications in various fields but also poses substantial risks that must be addressed through collaborative efforts.

We can harness the benefits of generative AI if we address the main risks: misinformation, bias, and environmental impact. Mitigation strategies include increasing transparency, holding companies accountable, and promoting inclusive governance.

We need to adopt a balanced approach that considers technological advancements and ethical implications to ensure that generative AI benefits society while minimizing potential harm.