Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: February 2, 2024

In this tutorial, we’ll talk about garbage collection along with its working, and will also discuss automatic reference counting.

Memory management is an essential element of computer systems. It’s the art of effectively allocating, managing, and reclaiming memory space. It’s vital to ensure that programs and processes can efficiently access the necessary memory space, contributing to optimal system performance.

Understanding and practicing good memory management practices is essential for writing efficient, stable, and reliable programs.

When a program needs space for a new object, it requests memory from the system. It tracks which programs use parts of the whiteboard and which are free. When objects are no longer needed, they release their memory space back to the system for other programs.

Different programming languages handle memory management differently; proper memory management is crucial for several reasons:

Memory leaks arise when programs allocate resources but fail to reclaim them, like accumulating unused apartments in a city. This gradual encroachment consumes available memory, leading to program crashes. However, efficient memory allocation and reuse practices are resourceful builders, minimizing requests for new apartments and optimizing utilization.

Through effective memory management strategies, programs become responsible tenants, preventing leaks and contributing to a stable, well-managed computing environment. This proactive approach ensures smooth program execution, maximizes their lifespan, and mitigates performance bottlenecks associated with inefficient memory handling.

Uncontrolled memory usage can lead to memory corruption, where programs access and modify data in the wrong areas, causing unpredictable behavior and crashes.

Modern systems manage processes concurrently, meaning multiple programs run simultaneously. Proper memory management ensures each program gets its fair share of the available memory and doesn’t interfere with others.

The purpose of garbage collection (GC) in memory management is to automatically reclaim memory that is no longer used by the program. Reclaiming memory occupied by unreachable objects, Garbage Collection helps prevent memory fragmentation, where the available memory becomes fragmented into small, unusable chunks over time.

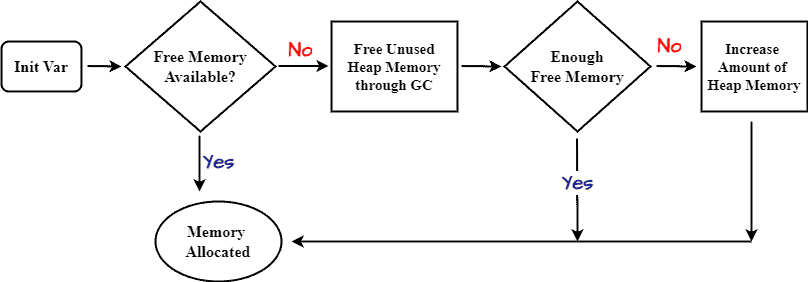

The image below illustrates how variables are allocated memory:

The Garbage Collector locates in-use objects in memory, known as reachable objects. It traces object connections from roots, marking each visited object as reachable until it identifies all of them.

Once all reachable objects are marked, the garbage collector identifies any objects in memory that were not marked during the traversal. We consider these unmarked objects as unreachable and candidates for garbage collection.

The GC proceeds to reclaim memory occupied by unreachable objects. This involves releasing the memory allocated to these objects, making it available for future allocations.

Garbage collection automates memory management, helping developers avoid manual memory allocation and deallocation and reducing the risk of memory leaks and dangling pointers.

It introduces performance overhead in terms of CPU usage and memory consumption, which can impact application performance and responsiveness.

Choosing the right GC algorithm and tuning parameters requires understanding the characteristics of the application, the runtime environment, and the trade-offs between performance and memory usage.

The timing of garbage collection is non-deterministic, meaning developers have less control over when memory is reclaimed, which can lead to unpredictable performance behavior, particularly in applications with strict latency requirements.

GC algorithms detect unreferenced objects in memory by tracing the program’s data structures to identify those still in use. Algorithm selection hinges on factors like programming language, runtime environment, application traits, and memory limits.

It’s a classic garbage collection algorithm used in many programming languages. It consists of two main phases, $marking$ and $sweeping$.

The marking process ensures it identifies all objects reachable from the roots, effectively distinguishing them from unreachable objects.

Once the Mark Phase identifies and marks all reachable objects, the garbage collector traverses the entire memory space or heap. During this traversal, the garbage collector identifies objects not marked as reachable.

We consider these unmarked objects as dead or unreachable because they are not reachable from any root objects. The garbage collector then deallocates the memory occupied by these unmarked objects, making it available for future allocations.

It reclaims memory by copying live objects from one memory space to another, effectively compacting memory and reclaiming unused space. The memory space is divided into two equal-sized regions: the $from-space$ and the $to-space$.

During garbage collection, the algorithm traces live objects starting from the root set and recursively follows references to all reachable objects. As the system encounters live objects, it copies them from the $from-space$ to the $to-space$.

Once the system copies all live objects to the $to-space$, it considers the entire $from-space$ as garbage, as no live objects remain in this region. The garbage collector can then reclaim the entire $from-space$, making it available for future allocations.

This algorithm divides the heap into two or more generations, typically young and old. Typically, the system allocates newly created objects to the young generation. The young generation further divided into two spaces: the $Eden space and the $Survivor space.

The system first assigns objects to the $Eden space$. Once the $Eden space$ reaches its capacity, the system initiates a minor garbage collection. This procedure involves relocating live objects from the $Eden space$ and those that have survived previous minor collections to one of the $Survivor spaces$.

Objects that survive multiple minor collections in the Survivor spaces eventually get promoted to the old generation. When the old generation becomes full, the system triggers major garbage collection (full heap collection). This usually occurs less frequently than minor garbage collection.

The algorithm initiates with an initial state where it considers the entire memory heap live, and it hasn’t performed any garbage collection yet. Additionally, during program execution, the garbage collector performs a small, incremental step of the garbage collection process at certain predefined points or intervals.

Designers have ensured that each incremental garbage collection step is short enough to minimize its impact on program responsiveness. In each incremental step, the garbage collector traverses through the live objects in the memory heap, marking them as reachable. During this marking phase, the system identifies which objects the program is still using and, therefore, should not reclaim.

After marking the live objects, the garbage collector proceeds to a sweep phase, identifying and reclaiming memory occupied by objects that it has not marked as reachable. We safely deallocate these unreferenced objects.

Automatic Reference Counting (ARC) serves as a memory management method implemented in certain programming languages for the automatic tracking and supervision of object lifetimes. ARC’s primary objective is to autonomously ascertain when an object becomes unnecessary and initiate the release of the corresponding memory.

When ARC creates an object, it assigns a reference count of 1. The count increases by 1 each time a new reference is created to the object. When a reference is removed or goes out of scope, the count decreases by 1.

ARC meticulously monitors these reference count changes throughout the program’s execution. It uses compiler-generated code to insert appropriate increment and decrement instructions at the right moments.

When an object’s reference count reaches zero (no more borrowers), ARC considers it unreachable and eligible for deallocation. It automatically reclaims the memory occupied by the object, making it available for future use.

ARC performs memory management deterministically. The system deallocates memory as soon as an object’s reference count drops to zero, ensuring predictable memory behavior and avoiding unpredictable pauses.

Its deterministic memory management and low overhead result in predictable performance characteristics, making it suitable for real-time and low-latency applications where consistent performance is critical.

ARC prevents memory leaks by automatically deallocating objects when they are no longer referenced, thereby reducing the risk of memory leaks compared to manual memory management.

While ARC automatically manages strong references, developers must manually manage weak and unowned references to break retain cycles and prevent memory leaks. This adds complexity and potential for errors in code.

In large-scale systems with complex object graphs and frequent object creation and destruction, ARC’s fine-grained reference counting can lead to performance overhead and increased memory fragmentation.

Different programming languages offer various GC algorithms, each with its trade-offs in terms of throughput, latency, and memory overhead. It is important to understand the characteristics of available GC algorithms and select the one that best suits our application’s requirements.

Minimize unnecessary object creation by reusing objects or employing object pooling techniques, especially for frequently allocated objects or those with a short lifespan. This will reduce the frequency of GC cycles and improve overall performance.

Concurrent GC algorithms perform garbage collection concurrently with application execution, thereby reducing pause times and improving application responsiveness. Considering the criticality of minimizing pause times for applications, we recommend exploring the use of concurrent GC algorithms.

Employ profiling tools and monitoring mechanisms to scrutinize GC behavior, encompassing heap utilization, GC cycle durations, and occurrence frequency. Analyzing real-time GC metrics can detect performance bottlenecks and optimization prospects.

In this article, we discussed Garbage Collection and Automatic Reference Counting and their uses during memory management. Garbage Collection is a powerful memory management technique used in many programming languages.

The purpose of garbage collection is to free up memory resources. Automatic Reference Counting streamlines memory management by automatically monitoring the number of references linked to a specific object.