Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: March 18, 2024

In this tutorial, we’ll show how to plot the decision boundary of a logistic regression classifier. We’ll focus on the binary case with two classes we usually call positive and negative. We’ll also assume that all the features are continuous.

Let be the space of objects we want to classify using machine learning. The decision boundary of a classifier is the subset of

containing the objects for which the classifier’s score is equal to its decision threshold.

In the case of logistic regression (LR), the score of an object

is the estimate of the probability that

is positive, and the decision threshold is 0.5:

Visualizing the boundary helps us understand how our classifier works and compare it to other classification models.

To plot the boundary, we first have to find its equation.

We can derive the equation of the decision boundary by plugging in the formula of LR into the condition .

We’ll assume that is an

vector with

to make the LR equation more compact. In preprocessing, we can always prepend

to any

-dimensional

.

Consequently, we have:

where is the

parameter vector of our LR model

. From there, we get:

If , the boundary equation becomes:

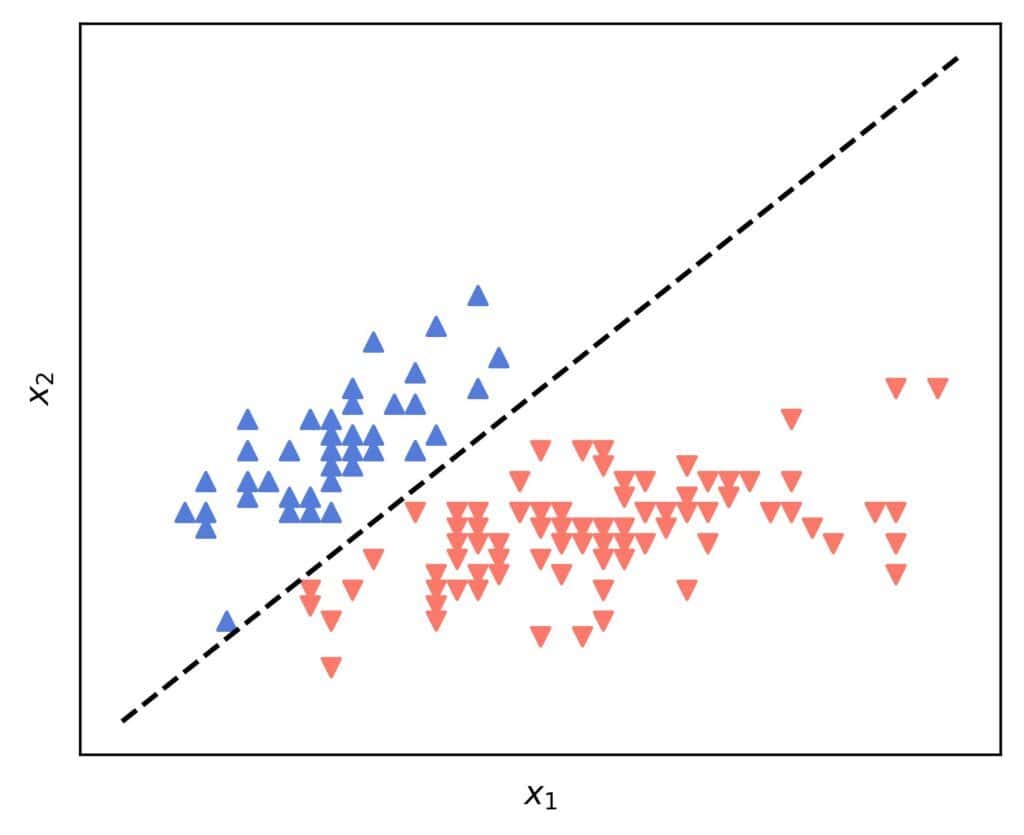

That’s a line in the ) plane. For example, if we use the iris dataset with

and

being the sepal length and width, and with versicolor and virginica classes blended into one, we’ll get a straight line:

We don’t have to consider the degenerate case where . That implies that no features of

are used, so we won’t use such a model anyway. Let’s say

. The explicit boundary’s equation is then:

If , we have a plane given by:

Let . Then, we can write the equation in the explicit form:

We can use any plotting tool to visualize lines and planes corresponding to these equations. If we have to do it from scratch, we can iterate over the independent features in small increments and calculate the dependent feature using the explicit forms:

The limits and

determine the part of the boundary we want to focus on.

We have two questions at his point:

Let’s find out.

If our objects have more than three features, we can visualize only the boundary’s projections onto the planes and spaces defined by pairs and triplets of features.

One way we deal with this is to choose the features for visualization and keep the others at constant values, such as means or constants of interest we know from theory. Values that mean “the feature is absent or neutral” can also be helpful. In most, but not all cases, that would mean setting those other features to zeros.

With 10 features , we have

feature pairs. Let’s say we choose

and

for visualizing the boundary. In that case, we set

to some constant values. Let them be

.

Then, is another constant. We add it to

and proceed as if

and

are the only two features of interest:

This can work for any pair or triplet of .

However, a disadvantage of this approach is that the boundary depends on the chosen constants .

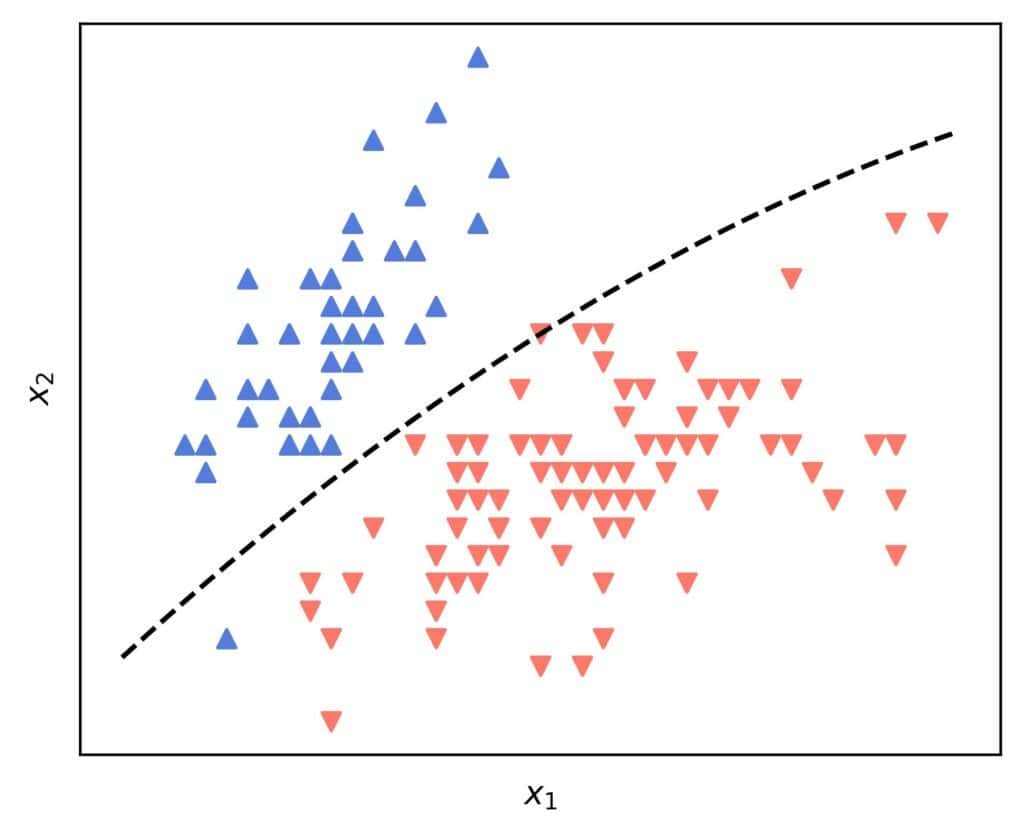

We can introduce curvatures with feature engineering.

Let’s say that our original features are . Before pretending

, we can add

. Then, the decision boundary becomes:

which is a curve in the original space. For instance:

However, the same boundary is a plane in the augmented space.

In this article, we showed how to visualize the logistic regression’s decision boundary. Plotting it helps us understand how our logistic model works.