Yes, we're now running our Black Friday Sale. All Access and Pro are 33% off until 2nd December, 2025:

What Is a Direct Mapped Cache?

Last updated: March 18, 2024

1. Introduction

In this tutorial, we’ll discuss a direct mapped cache, its benefits, and various use cases.

We’ll provide helpful tips and share best practices.

2. Overview of Cache Mapping

Cache mapping refers to the technique used to determine how data is stored and retrieved in cache memory. It establishes the mapping between memory addresses and cache locations.

3. Understanding Direct-Mapped Cache

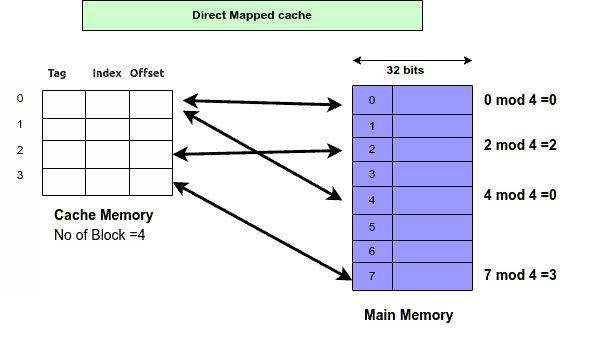

In the direct mapping scheme, we map each block of main memory to only one specific cache location.

In this scheme, memory blocks are mapped to cache lines using a hashing or indexing mechanism. When accessing the main memory address, we consider its three components. Those are the tag, the index, and the offset.

The number of bits allocated to each component depends on the size of the cache, main memory, and blocks.

We use the tag to represent the high-order bits of the memory address and uniquely identify the memory block. We determine the cache line to which the memory block is mapped based on the index bits, while the offset bits specify the position of the data within the line:

In the above example, each address in the main memory is mapped to one of the four cache lines. To achieve this, we use the modulus operation.

So, if there are lines in the cache, direct mapping computes the index using

, and the index takes

bits.

3.1. Search

To search for a block, we compare the tag stored in a cache line of the selected set with the tag derived from the address. If the tags match, a cache hit occurs, and the requested data receives from the cache.

If the tags don’t match, it’s a cache miss. In this case, the requested memory block isn’t present in the cache. The cache controller fetches the data from the main memory and stores it in the cache line of the selected set. It evicts the existing block from that cache line and replaces it with the new block.

Once the data is fetched from the cache (cache hit) or main memory (cache miss), it’s returned to the requesting processor or core.

3.2. Example

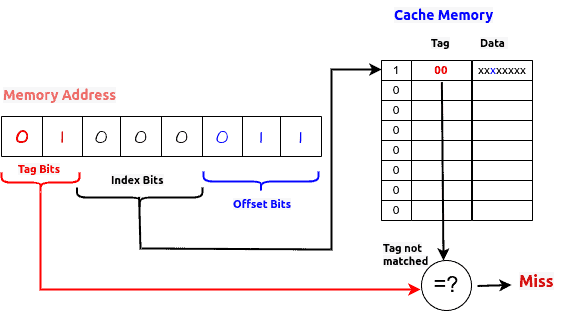

Let’s consider an 8-bit memory address 01000011 and cache with 8 lines, each containing 8 blocks. We use three bits for the index and three for the offset (since cache lines hold blocks). The remaining two bits are for the tag:

The cache controller retrieves the tag associated with the cache line at the first index because that’s where the index bits 000 point. Then, it compares it to the tag derived from the memory address and present in the cache, which is 01 in this example. The tags don’t match, which means the requested memory block is not in the cache, so we have a cache miss.

Now, the cache controller fetches the requested data from the main memory address = 01000011 and stores it in the cache line corresponding to the first index. If there is already data present in that cache line, it will be replaced with the new block.

If the tags matched, the controller would retrieve the third word from the first cache line because that’s what the offset bits (011) specify.

4. Advantages of Direct-Mapped Cache

Direct-mapped caches are straightforward to implement compared to other mapping schemes. The mapping logic is simple and requires minimal hardware complexity, which can lead to cost savings and easier design.

Due to their simplicity, direct-mapped caches typically consume less power compared to more complex cache designs. This can be beneficial in power-constrained systems, such as battery-powered devices or mobile devices, where energy efficiency is crucial.

Direct mapping provides a constant and deterministic access time for a given memory block. It guarantees to map each memory block to a specific cache line, enabling efficient and predictable cache access latency.

Direct-mapped caches have a fixed mapping between memory blocks and cache lines. When a cache miss occurs and a cache line needs to be changed, it follows a straightforward replacement procedure. The incoming block replaces the existing block in the cache line corresponding to the mapped set without the need for complex decision-making algorithms.

Direct-mapped caches require fewer resources compared to other cache types. This characteristic enables direct-mapped caches to be cost-effective, particularly in situations where there are limitations on cache size or hardware budget.

5. Limitations of Direct-Mapped Cache

If multiple memory blocks frequently map to the same cache set, cache lines within that set will experience frequent evictions and replacements, resulting in a higher cache miss rate. This limitation can have a significant impact on cache performance, particularly in scenarios with high memory access contention.

Direct mapping places each memory block into a specific cache line within a set, allowing no alternative placements. This lack of flexibility can lead to inefficient cache utilization, particularly when memory blocks with high temporal or spatial locality are assigned to the same cache set.

Uneven access distribution in a direct-mapped cache may occur if memory blocks aren’t evenly spread across the address space. This can result in some cache sets being heavily loaded, increasing the likelihood of conflict misses, while other cache sets may remain underutilized. This limitation can result in suboptimal cache performance and inefficiencies.

Compared to more complex cache mapping schemes like set-associative or fully associative caches, direct-mapped caches may provide limited opportunities to improve cache hit rates or reduce cache conflicts. The fixed mapping limits the ability to exploit additional levels of associativity to accommodate more memory blocks.

6. Use Cases and Applications

Processors often use direct-mapped caches as instruction caches. Instructions tend to exhibit good locality of reference, and the deterministic mapping of direct-mapped caches effectively exploits the property of instructions exhibiting good locality of reference, resulting in fast and predictable instruction fetches.

One of the major use cases is in embedded systems where power consumption, simplicity, and determinism are critical considerations. These applications often operate with limited resources and require efficient memory access with predictable timing.

Direct-mapped caches offer constant access times and predictable cache behavior, making them suitable for real-time systems like aerospace, automotive, or industrial control applications.

We also use them when low latency is a critical requirement. Due to their simple and straightforward design, direct-mapped caches can offer low access times for specific memory access patterns.

7. Summary

Here’s a summary of this mapping scheme:

| Advantages | Disadvantages |

|---|---|

| Simple and straightforward implementation. | High conflict misses. |

| Low hardware complexity. | Poor handling of spatial locality. |

| Low access time. | Inefficient use of cache space. |

| Typically held for a longer duration. | Unpredictable cache performance. |

The direct-mapped cache is simple and fast, but it may not be suitable for all memory access patterns and workloads due to its higher conflict and miss rates and limited associativity.

8. Conclusion

In this article, we discussed direct-mapped cache, where each block of main memory can be mapped to only one specific cache location. It’s commonly used in microcontrollers and simple embedded systems with limited hardware resources.