Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: January 11, 2024

Caching is a powerful technique for speeding up applications. We most likely use it in expensive operations like reading data. Storing frequently accessed data in a cache can avoid costly operations like database queries or API calls.

But real life means physical limits, so caches have limited size. Hence, we must manage what stays in and what doesn’t. This removal process is called eviction, for which there are many strategies.

The Least Recently Used (LRU) algorithm is a popular strategy for cache eviction. In that strategy, we remove the least recently accessed item when the cache is full.

In this tutorial, we’ll implement an LRU cache in Scala 3, using Scala’s robust collections library for efficient cache management.

It’s worth noting that the LRU Cache is just one of many strategies for cache eviction. Others include Random Replacement, FIFO, and LFU (Least Frequently Used).

An LRU (Least Recently Used) cache is a fixed-size data structure that prioritizes keeping the most recently accessed items. It’s based on the principle of temporal locality: Items used recently are more likely to be used again soon.

A cache is about managing two core actions: querying and updating. In an LRU cache, when an item is accessed, it’s promoted to the front of the cache, marking it as recently used. Conversely, when the cache is at capacity and a new item is added, the item at the back of the cache, or the one that has gone the longest without access, will be evicted.

When writing a LRU cache, the choice of data structure is paramount. It must allow efficient reading, addition, and removal of elements. It should also maintain an insertion or access order to identify which items to evict when the cache reaches capacity.

For an LRU cache, we need a structure that combines a hash table’s direct access with a list’s ordered characteristics. A LinkedHashMap is well-suited because it maintains insertion order, is a proxy for usage order in our cache, and provides constant-time performance for basic operations.

Our LRU cache will need to support three operations:

We’ll use Scala’s LinkedHashMap to implement our LRU Cache because it maintains the insertion order of elements.

We’ll write our implementation using Test Driven Development, which means we will write in cycles of adding one or more tests, then write an implementation that will pass those tests, rinse, and repeat until we have a complete implementation.

In SaclaTest, we can write tests that are easier to read for non-technical team members. This style is usually referred to as BDD.

Caches only make sense if they have a positive capacity; hence, we should test that we can’t construct instances with zero or negative capacity by checking it throws an IllegalArgumentException if we try to create a cache with a non-positive capacity:

class LRUCacheSpec extends AnyFlatSpec with Matchers:

"An LRU Cache" should "throw IllegalArgumentException if created with zero or negative size" in {

an[IllegalArgumentException] should be thrownBy {

new LRUCache[Int, String](0)

}

an[IllegalArgumentException] should be thrownBy {

new LRUCache[Int, String](-1)

}

}

}Then, we’ll write the LRUCache class, which takes a capacity parameter. To prevent the construction of illegal instances, we’ll add a requirement for the capacity parameter:

class LRUCache[K, V](val capacity: Int):

require(capacity >= 1, "A Cache of negative or 0 capacity makes no sense")

private val cache = mutable.LinkedHashMap.empty[K, V]

In the above code, we used composition, hiding the underlying mutable LinkedHashMap using the private modifier.

Next, let’s write tests for the basic operations of adding and getting elements from the cache. For brevity’s sake, we won’t be repeating the test or class headers, nor will we include any imports.

We’ll write a test expecting an empty Option if we look for a key not in the cache, then for the case when we find the element. We’ll finish with a test defining the expectations when we add an element to a full cache:

it should "return None for missing values" in {

val cache = new LRUCache[String, Int](2)

cache.get("missing") should be(None)

}

it should "return the value for a key if present" in {

val cache = new LRUCache[String, Int](2)

cache.put("key1", 1)

cache.get("key1") should be(Some(1))

}

it should "not grow bigger than its capacity" in {

val cache = new LRUCache[String, Int](2)

cache.put("key1", 1)

cache.put("key2", 2)

cache.put("key3", 3)

cache.size() should be(2)

}

Now, let’s write simple implementations, delegating the put(), get(), and size() methods to the LinkedHashMap variable:

def size(): Int = cache.size

def get(key: K): Option[V] = cache.remove(key)

def put(key: K, value: V): Unit = cache.put(key, value)This simple implementation is enough to pass the first two tests. Still, the third test will fail because the put() method in the LinkedHashMap is not written to maintain the capacity invariant.

We’ll use case analysis to improve this implementation. When adding elements, we should handle the following scenarios:

We can simplify the first two cases using the LinkedHashMap‘s remove() method. Since we don’t need the return value, we can pattern match to the wildcard:

private def evict(): Unit = {

cache.remove(cache.head._1) // Evict the least recently used item

}

def put(key: K, value: V): Unit = synchronized {

cache.remove(key) match // First remove the lement from the cache

case _ if cache.size >= capacity =>

evict() // Evict an element if we are at the capacity

cache.put(key, value) // Add new element at the end

case _ =>

cache.put(key, value)

}Next, we need to ensure our cache maintains the other LRU invariant: When adding an element to a full cache, the entry accessed least recently is removed to prevent the size of the cache from growing beyond its capacity.

Since we would like to maintain the LinkedHashMap encapsulated and opaque to our users, we should write our tests without using any knowledge of the internal implementation, using simply the interface methods get() and put().

We start by verifying that our cache handles the single-element cache case correctly:

it should "handle a cache size of 1 correctly" in {

val cache = new LRUCache[String, Int](1)

cache.put("key1", 1)

cache.get("key1") should be(Some(1))

// Adding another element should evict "key1"

cache.put("key2", 2)

cache.get("key1") should be(None)

cache.get("key2") should be(Some(2))

// Accessing "key2" and then adding a new key should evict "key2"

cache.get("key2")

cache.put("key3", 3)

cache.get("key2") should be(None)

cache.get("key3") should be(Some(3))

}

After running the tests to ensure we correctly handle one-element caches, we’ll check that we keep the LRU characteristic for bigger caches and different usage sequences:

it should "evict the least recently used item when exceeding maxSize" in {

val cache = new LRUCache[String, Int](2)

cache.put("key1", 1)

cache.put("key2", 2)

cache.put("key3", 3) // This should evict "key1"

cache.get("key1") should be(None)

cache.get("key2") should be(Some(2))

cache.get("key3") should be(Some(3))

}

it should "update the position of a key when accessed" in {

val cache = new LRUCache[String, Int](2)

cache.put("key1", 1)

cache.put("key2", 2)

cache.get("key1") // This should move "key1" to the end

cache.put("key3", 3) // This should evict "key2"

cache.get("key1") should be(Some(1))

cache.get("key2") should be(None)

cache.get("key3") should be(Some(3))

}

it should "update the position of a key when updated" in {

val cache = new LRUCache[String, Int](2)

cache.put("key1", 1)

cache.put("key2", 2)

cache.put(

"key1",

10

) // This should update "key1" value and move it to the end

cache.put("key3", 3) // This should evict "key2"

cache.get("key1") should be(Some(10))

cache.get("key2") should be(None)

cache.get("key3") should be(Some(3))

}

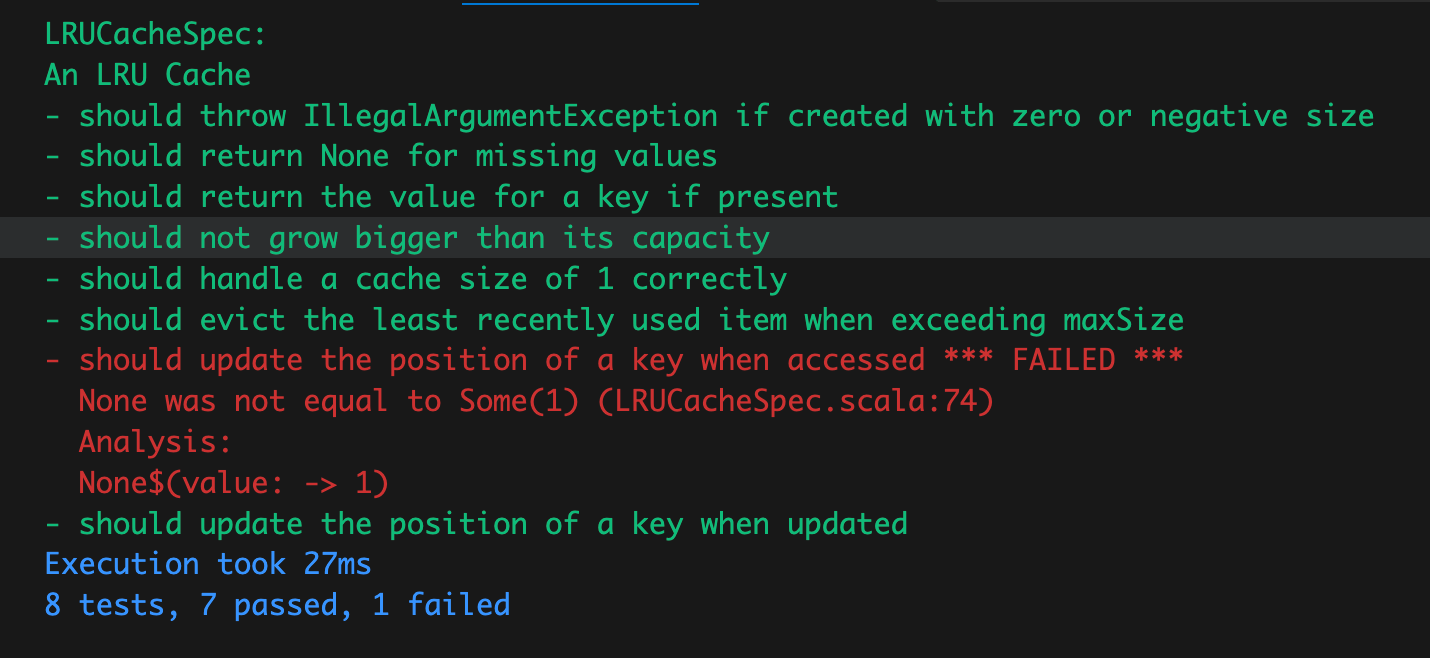

When running the tests, we’ll notice the last one fails:

This failure hints that we need to improve our implementation for the get() method by marking the recently accessed element when reading it:

def get(key: K): Option[V] = synchronized {

// When reading, we attempt to remove the value

cache.remove(key) match

case Some(value) =>

cache.put(

key,

value

) // Put it back at the end to indicate recent access

Some(value)

case None => None

}

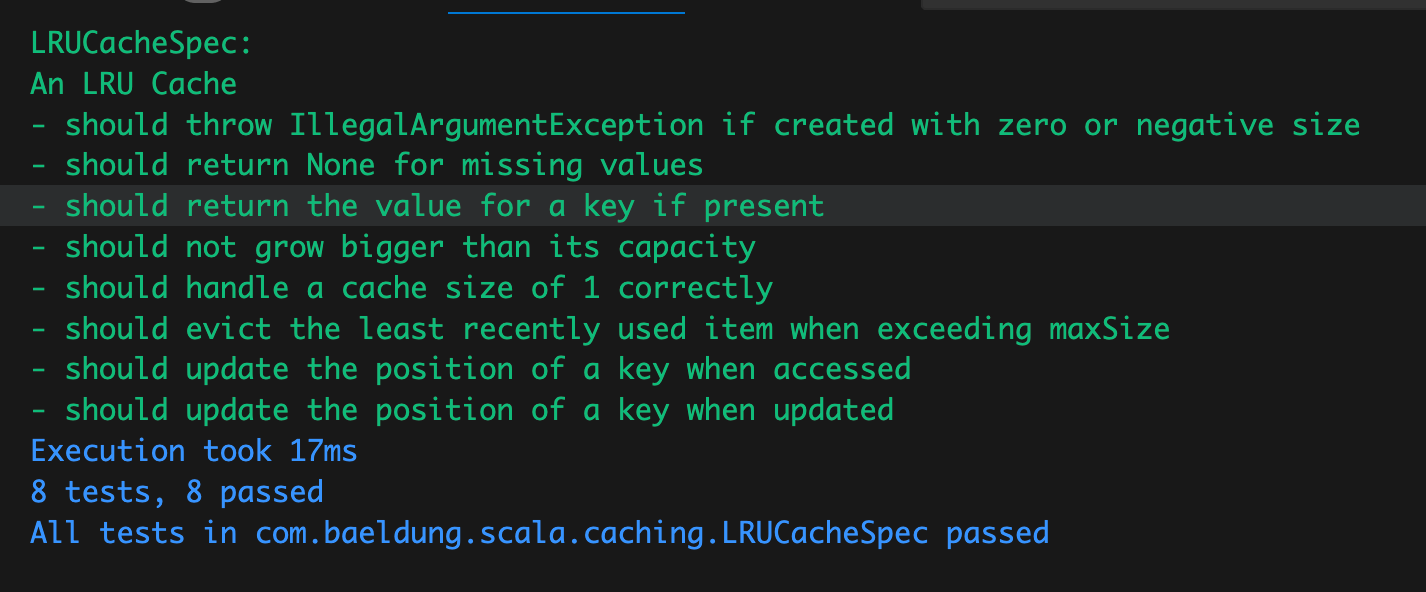

After improving our implementation, we rerun the tests:

In this journey, we’ve explored the intricacies of implementing an LRU Cache in Scala 3. We started by understanding the concept of caching and the eviction strategy called Least Recently Used (LRU).

Next, we implemented it in Scala 3 using a BDD style, leveraging the power of LinkedHashMap to maintain the order of elements and ensure efficient cache operations.

Writing our tests has the benefit that when we complete our implementation, we’ll have a robust suite of tests using ScalaTest, verifying our cache’s behavior across various scenarios. From handling edge cases like a single-element cache to ensuring that our eviction policy holds under different access patterns, our tests have established a safety net alerting us to deviations from the expected behavior.