1. Introduction

In this tutorial, we’ll talk about TinyML. We’ll show how it works and present its advantages, challenges, and future.

2. Why Do We Need TinyML?

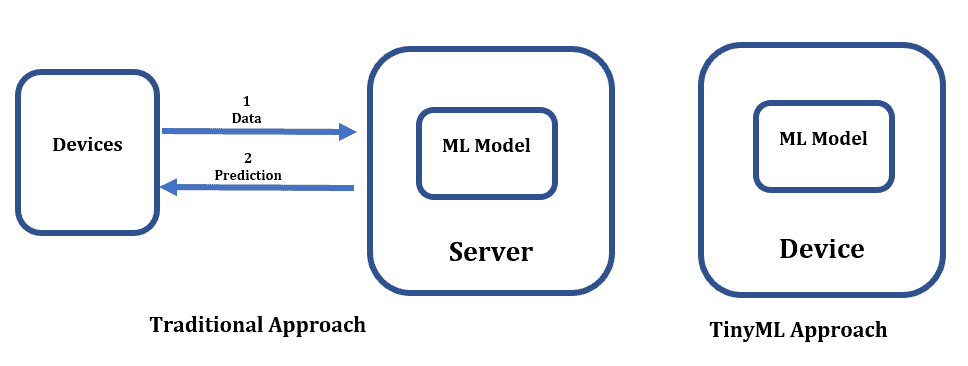

In the TinyML approach, all the steps described in the traditional approach are done on the device. This can result in faster response times, reduced latency, and improved privacy and security:

In addition, TinyML enables the development of new applications and use cases that were previously impossible due to the limitations of traditional ML approaches. For example, TinyML can be used for predictive maintenance in industrial settings, real-time health monitoring, and intelligent agriculture.

3. TinyML: How Does It Work?

TinyML uses specialized algorithms and techniques designed to run efficiently on small, low-power devices. These algorithms are often based on simplified versions of traditional ML models, such as neural networks or decision trees, that can be optimized for the limited processing power and memory of microcontrollers and sensors.

Creating a TinyML model is similar to fitting a traditional ML model. First, we collect data from sensors or other sources and process them using specialized software tools. Then, we use the data to train our model.

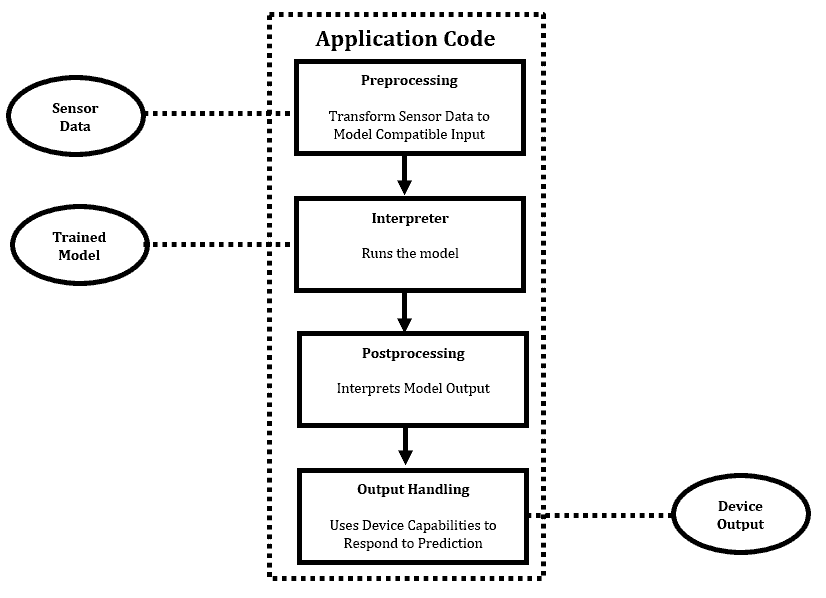

Finally, we deploy our model on the target device, where it can analyze data in real-time and make predictions (decisions) that the device is programmed to react to:

This enables various applications, from detecting manufacturing equipment anomalies to monitoring medical patients’ vital signs.

3.1. Techniques

There are many techniques for preparing a tinyML model. We’ll cover two.

For example, to create a TinyML version of a neural network, we can train it on available data as in the traditional approach. Then, we can reduce its size by rounding its parameters to the nearest 8-bit integers. This technique is called post-training integer quantization.

The pruning technique identifies and removes the connections and neurons with little impact on the output. During the model training, if a particular weight gets close to zero, we remove it from the model without significantly affecting accuracy. Additionally, if a neuron consistently outputs values close to zero, we remove it from the model. As a result, we reduce the size and complexity of neural networks.

4. Advantages

4.1. Low Latency and Real-Time Processing

TinyML can operate on small microcontrollers, enabling edge computing and reducing the need for cloud connectivity.

If a low-power device can’t provide enough processing power or memory, then connecting to a cloud with a model is necessary.

4.2. Energy Efficiency

TinyML enables low-power and energy-efficient data processing, which reduces devices’ power consumption.

We design TinyML algorithms to be lightweight and efficient, requiring less computing power and consuming less energy.

4.3. Security

Instead of transmitting sensitive data to the cloud, which exposes devices to possible cyber risks like hacking and privacy violations, TinyML empowers edge devices to conduct real-time analysis and decision-making.

So, if devices are secure enough, the risk of network-based attacks is reduced.

5. Challenges

5.1. Limited Memory and Processing Power

One of the biggest challenges in TinyML is the limited memory and processing power of tiny devices. This makes it difficult to implement complex machine-learning algorithms and models.

As a result, developers must optimize their models and algorithms to fit these devices’ constraints, which can be a significant challenge.

5.2. Optimization and Compression of Machine Learning Models

This requires developers to find ways to reduce the size and complexity of their models without sacrificing performance or accuracy.

Techniques such as pruning and quantization can be used to achieve this goal. However, these methods require careful consideration and experimentation to find the best approach for a given application.

5.3. Security Risks

TinyML can also face security risks. Indeed, if the devices are not adequately secured, keeping the ML models on devices can increase the risk of security breaches. For example, if a device was stolen or lost, a hacker could access and use the ML model for malicious purposes.

Moreover, hackers could exploit software vulnerabilities to gain access to the ML model if we don’t update the device regularly with security patches.

6. Applications

Hardware improvements, such as developing low-power and high-performance microcontrollers, have enabled more complex models to run on tiny devices.

TinyML has a broad range of applications:

- Healthcare monitoring and diagnosing systems: We use TinyML in medical devices to diagnose diseases, monitor patient health, and provide personalized treatment recommendations.

- Self-driving cars: In autonomous vehicles, we use TynyML to improve perception and decision-making capabilities.

- Drone vision: Today, TinyML models can be trained to detect objects such as animals, people, vehicles, or obstacles in drone footage, enabling efficient and safer navigation.

- Edge computing: TinyML brings machine-learning capabilities to edge devices such as cameras, smartphones, and drones, enabling real-time decision-making without relying on cloud services.

7. Short Summary of Advantages and Disadvantages

Here is the summary table of advantages and disadvantages of TinyML:

8. Conclusion

In this article, we talked about TinyML. Overall, TinyML allows for deploying machine learning models on small and resource-constrained devices, enabling real-time decision-making and reduced latency.

However, these devices’ limited processing power and memory can lead to decreased model accuracy.