Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: March 18, 2024

In this tutorial, we’ll talk about the concepts of local and global optima in an optimization problem. First, we’ll make an introduction to mathematical optimization. Then, we’ll define the two terms and briefly present some algorithms for computing them.

First, let’s briefly introduce the basic terms of optimization.

A mathematical optimization problem consists of three basic components:

So, the goal is to find the optimal values of the decision variables that satisfy the constraints and optimize (maximize or minimize) the value of

. However, as the problem becomes more complex, finding the optimal values becomes more difficult. So, sometimes it is easier to find some values that are not optimal but are good enough for the problem. In this case, we have a local optimal.

A local optimum is an extrema (maximum or minimum) point of the objective function for a certain region of the input space. More formally, for the minimization case is a local minimum of the objective function

if:

for all values of x in range .

A global optimum is the maximum or minimum value the objective function can take in all the input space. More formally, for the minimization case is a global minimum of the objective function

if:

for all values of x.

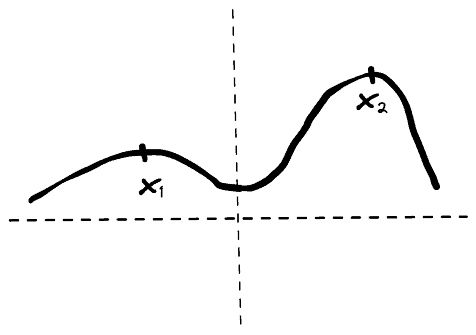

In the image below, we can see an example of a local and a global maximum:

We observe that is the global maximum of the function since in

, the function takes the higher value. However, if we consider only the region of negative numbers,

is the maximum in this region. So,

is a local maximum.

In case the objective function contains more than one global optima, the optimization problem is referred to as multimodal. In this case, there are multiple values of the decision variables that optimize the objective function.

Also, in optimization, we are interested only in cases when the objective function presents a global optimum. For example, consider the following optimization problem:

where we can see below the plot on the square function:

We can observe that there is no global optimum for this problem. For every with objective value

there is always a value

where the objective value

is greater because:

Generally, finding the local and global optima of a function is a very important problem, and new algorithms are proposed all the time.

In terms of local optima, some famous methods are the BFGS Algorithm, the Hill-Climbing Algorithm, and the Nelder-Mead Algorithm. In global optima computation, Simulated Annealing and Genetic Algorithms are the most successful.

However, to choose an algorithm, we should always first understand the requirements of our optimization problem since there are algorithms that aim to be fast while losing a bit of their accuracy and adversely.

In this article, we presented the terms of global and local optima. First, we briefly presented how an optimization problem is defined, and then we discussed the two terms along with some algorithms for computing them.