Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: February 12, 2025

One issue that we might have is the data availability for training. In many computer vision applications, this isn’t a problem anymore since we can find large datasets. For example, that’s the case for object classification, face recognition, and handwriting recognition. However, in some fields, such as medicine, it’s hard to collect new samples or find large-enough labeled datasets.

In this tutorial, we’ll review data augmentation. It aims to improve the quality of our dataset when we don’t have hundreds of thousands of samples.

There are several types of data augmentation techniques depending on the chosen domain. To mention a few, we have methods for audio, natural language processing, and images.

For instance, image data augmentation methods range from elementary manipulations to deep learning approaches.

The simplest techniques for image data augmentation apply geometric transformations. These include flipping, scaling, cropping, rotation, translation, and brightness transformations:

We should consider applying this type of data augmentation when we don’t have a robust sample distribution. For example, if we want to recognize dogs in pictures taken by other users in an app. Most likely, these users will take pictures in different orientations and lighting conditions.

If our dataset consists of samples collected under similar scenarios, we won’t be able to recognize these different samples. To keep it short, we should use geometric transformations when we have enough unique samples that are collected without many variations.

Whichever transformation we use, we should make sure that our application isn’t sensitive to it. For instance, if we vertically flip the number 9, the image will become a 6, so the initial label will be wrong. For this reason, we usually need domain-specific functions and methods to augment our dataset.

Sometimes, we have samples collected under different scenarios and conditions, but there aren’t enough unique samples.

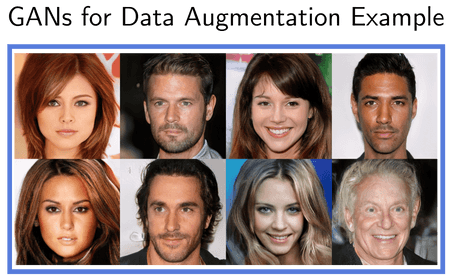

To augment such data, we can use deep-learning approaches such as Generative Adversarial Networks (GAN). They create synthetic data trying to mimic and expand the training samples. A typical use case is the face detection problem, where GANs synthesize the faces of people that don’t exist:

To summarize, we should consider Deep Learning approaches when we need to create new unique samples. This might be necessary for applications in which is hard to find a large amount of data or to label it. Some examples are human poses or image-to-text translations.

The most common practice is to apply data augmentation only to the training samples. The reason is that we want to increase our model’s generalization performance by adding more data and diversifying the training dataset. However, we can also use it during testing.

In all cases, we should keep in mind that the augmentation parameters and strategy have to be appropriate to the application. This is true no matter in which phase we augment the data. The choice of data augmentation strategy should be application-specific and in line with the real-world data distribution.

An elementary reason to not use data augmentation on the test data is to have a realistic evaluation of the model. To check how our model behaves on unseen data, test samples should come from the same distribution as those we expect in our real-world application. Data augmentation techniques may distort the test data distribution and skew the results.

Still, we can find in the literature some evidence that argues for the use of augmentation on the test set. In fact, the method called Test-Time Augmentation (TTA) improved the accuracy in certain applications.

For instance, let’s suppose our task is to develop a model for facial recognition regardless of the image orientation. But, our dataset is quite small with just a few hundred samples. Also, all the photos were taken in only one position and direction.

We can carry out data augmentation only in the training phase. But, in that case, we wouldn’t accurately assess our model for our target application, which can include rotated images.

Validation sets are used during training, but their primary goal isn’t to fit our models’ parameters. Instead, we use them to assess the performance of various hyper-parameter settings. So, what we said about augmenting test data holds for validation datasets as well.

In this article, we talked about data augmentation. Mostly, we use it to make our train dataset larger and more diverse. In some cases, it might be necessary to apply augmentation in the test and validation subsets. This is especially when we don’t have enough samples.