1. Introduction

In this tutorial, we’ll show how to multiply a matrix chain using dynamic programming. This problem frequently arises in image processing and computer graphics, e.g., animations and projections.

2. Matrix Chains

Let’s start with an example. We have four matrices: and

. Their shapes are

,

,

, and

, respectively.

Since each matrix’s number of columns corresponds to the next one’s number of rows, their product is defined.

We can calculate it sequentially. First, we multiply and

to get an intermediate

-matrix

. Then, we multiply

with

and get a

-matrix

. Finally, we get the result by multiplying

with

:

How many operations do we perform this way?

To multiply two matrices and

of shapes

and

, we must compute

elements. For each, we perform

scalar multiplications and

additions. So, the total number of operations,

, is

.

In our example:

That’s 460 operations. However, since matrix multiplication is associative, we can compute the chain in a different order:

This multiplication order involves 236 operations:

The reduction is almost 50%.

2.1. Formulation

So, in problems of this type, we have matrices

of the shapes

such that

for all

.

The goal is to find the multiplication order that minimizes , the number of scalar multiplications and additions in computing the product

.

3. Dynamic Programming

We can use dynamic programming to do this. So, let’s start with the recursive relation.

3.1. Recursion

Let (where

). No matter how we order multiplications, the final product will always result from multiplying two matrices.

So, we compute by splitting it into the “left” and “right” products

(of shape

) and

(of shape

):

The choice of determines the cost:

Therefore, we should choose the that minimizes the number of operations:

The base case is , and its cost is zero because we don’t perform any operation to compute

.

3.2. Tabular Algorithm

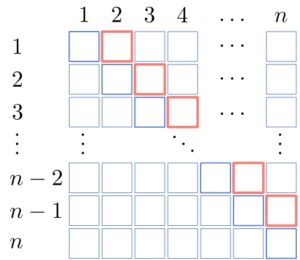

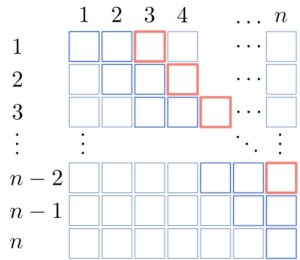

Let’s check out the tabular approach to computing .

In this method, we start from the base cases and work up to one diagonal at a time. So, after the base cases on the main diagonal, we compute the costs

for

of multiplying pairs:

Then, we compute the for

:

Continuing this way, we calculate in the end:

We keep track of the splitting indices by storing them in a separate data structure as we compute the costs.

We can use matrices for

and

, but half of the reserved space will be unused. Another option is to use a hash map.

3.3. Example

Let’s apply these algorithms to our initial example with matrices and

of shapes

,

,

, and

, respectively.

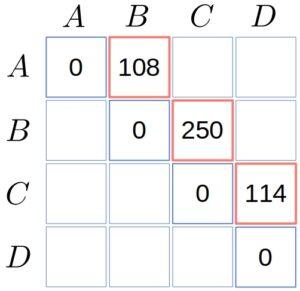

First, we set the costs on the main diagonal to zero. Then, we compute the costs of ,

, and

.

There will be only one way to split each pair. For example:

The same goes for and

:

We used matrix names instead of indices for readability.

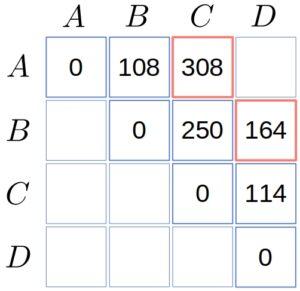

Now, we focus on and

:

So:

Finally, we compute :

4. Reconstructing the Solution

A way to reconstruct the solution is to follow the indices in recursively.

If , where

, that means that

should be computed as

. So, we need

and

to split

and

optimally:

The result of is the solution for the entire input chain

.

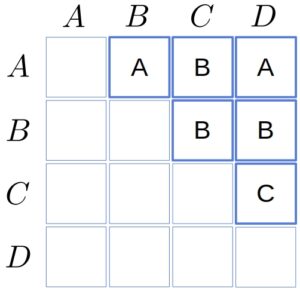

4.1. Example

In our example, is a

matrix with the empty lower triangle:

Since , the first split comes after

, so we have:

Since the left product is a single matrix, we focus on the right one. , so we get:

5. Memoization

Memoization is another approach. It computes recursively following its definition. However, it avoids repeated calculations by memorizing the intermediate results:

For each pair , we first check in

if we’ve already computed

. If so, we return

. Otherwise, we calculate

.

This approach is usually easier to implement than the tabular method. However, the excessive depth of the recursion tree for large can cause stack overflow.

6. Conclusion

In this article, we showed how to multiply a chain of matrices using dynamic programming.

The main difference between the tabular approach and memoization is the order in which the sub-problems are solved. The former is a bottom-up iterative approach that starts from the base cases, whereas the latter is a top-down recursive approach.