Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: March 18, 2024

In this tutorial, we’ll discuss several ways of quantifying the extent to which an array is sorted.

We have an array and want to quantify how close it is to its sorted permutation.

So, whatever we define this measure, a completely sorted array such as:

should have a better score than a partially sorted one:

The metric should be defined in a way that allows for comparing two partially sorted arrays and concluding which one is more sorted.

It should also apply to any data type with an ordering relation, e.g., numbers and or strings and lexicographic ordering. We’ll cover the general case and denote the order as

.

An inversion in an array is a pair whose elements are out of order:

A natural way to define sortedness is to count inversions:

For example, contains three inversions:

,

, and

. We consider it more sorted than

that has four inversions and less than

with two.

By definition, the metric’s higher values correspond to less sorted arrays. So, a completely sorted array has zero inversions. An array sorted in the opposite direction has inversions:

inversions containing the first element,

inversions with the second, and down to one inversion with

.

We can compare any two arrays by their inversion counts, but the comparison will be fair only if they have the same number of elements. For instance, three inversions in a -element array don’t denote the same degree of sortedness as three in a

-element sequence.

To consider different sizes, we can divide the count with its maximum value of . This way, we get a metric whose values are in the range

, where

corresponds to a completely sorted array and 1 to a completely inverted sequence.

Let’s check out a few ways of computing this metric.

The straightforward way is to traverse the array with two loops:

algorithm SortednessInQuadraticTime(a, preceq):

// INPUT

// a = an array [a_1, a_2, ..., a_n]

// OUTPUT

// The scaled number of inversions in a

count <- 0

for i <- 1 to n-1:

for j <- i+1 to n:

if not (a[i] <= a[j]):

count <- count + 1

return 2 * count / (n * (n-1))We compare each with the elements to the right of it.

This approach is intuitive but has a quadratic time complexity. So, it isn’t practical for very large arrays.

One way to avoid the performance issue is to count the inversions stochastically. That means sampling a predefined number of pairs and counting inversions among them:

algorithm StochasticSortedness(a, preceq, m):

// INPUT

// a = an array [a_1, a_2, ..., a_n]

// m = the number of pairs to draw

// OUTPUT

// The scaled number of inversions in a sample of the pairs of a

count <- 0

l <- 0

while l < m:

i <- draw an integer uniformly from {1, 2, ..., n}

j <- draw an integer uniformly from {1, 2, ..., n}

if i != j:

// We check only the pairs with elements at different positions

l <- l + 1

left <- min{i, j}

right <- max{i, j}

if not (a[left] <= a[right]):

count <- count + 1

return count / m

When sampling, we need to ensure we don’t draw the same index twice and get as a pair to check. To see why, let’s recall that the condition

is in the definition of an inversion, which implies that

. This seems obvious, but it’s easy to overlook.

In practice, this approach will be faster than the solution but has several drawbacks:

The first issue can be addressed by running the algorithm several times and returning the average result. Alternatively, we can use a larger if it doesn’t cause the method to run too long. Although the algorithm can run forever, it rarely happens in practice, especially with large values of

and

.

To fix the last drawback, we can sample the pairs without repetition.

That means we should store the sampled pairs and ignore them if we draw them again:

algorithm StochasticSortednessWithoutRepetition(a, preceq, m):

// INPUT

// a = an array [a_1, a_2, ..., a_n]

// m = the number of pairs to draw

// OUTPUT

// The scaled number of inversions in a sample of the pairs of a

count <- 0

l <- 0

memory <- an empty data structure

while l < m:

i <- draw an integer uniformly from {1, 2, ..., n}

j <- draw an integer uniformly from {1, 2, ..., n}

if i != j and (i, j) not in memory:

// We check only the pairs with elements at different positions

// that we haven't already drawn

l <- l + 1

Insert (i ,j) into memory

left <- min{i, j}

right <- max{i, j}

if not (a[left] <= a[right]):

count <- count + 1

return count / m

Whenever we draw a new index pair , we check if we considered it before. If yes, we’ll skip it. Otherwise, we’ll insert it into

to ignore it in the future.

For this approach to be efficient, the storage should be a data structure with efficient insertions and lookups, such as a hash table. Because of the overhead, this will be slower than sampling with repetition but more accurate.

Another way is to tweak a sorting algorithm to count inversions.

Not all algorithms will be easy to modify since we aren’t supposed to count swaps done during sorting but only the inversions in the original array.

Here’s a modification of Merge Sort:

algorithm MergeCount(a, preceq):

// INPUT

// a = an array [a_1, a_2, ..., a_n]

// OUTPUT

// The sorted permutation of a and the number of inversions in a

if n = 1:

return (a, 0)

else if n = 2:

if not(a[1] <= a[2]):

return ([a[2], a[1]], 1)

else:

return ([a[1], a[2]], 0)

// Recursion

// Split a into two halves

left <- a[1 : n/2]

right <- a[n/2 + 1 : n]

// Count the inversions in both halves and sort them, recursively

(left, count_left) <- MergeCount(left)

(right, count_right) <- MergeCount(right)

// Check if there are inversions with the elements in different halves

// and merge them into a sorted whole

i <- 1

j <- 1

k <- 0

count_cross <- 0

b <- make a temporary array with space for n elements

while i <= n/2 and j <= n:

k <- k + 1

if a[i] <= a[j]:

b[k] <- a[i]

i <- i + 1

else:

b[k] <- a[j]

j <- j + 1

count_cross <- count_cross + (j - n/2)

Append the remainders of left and right to b

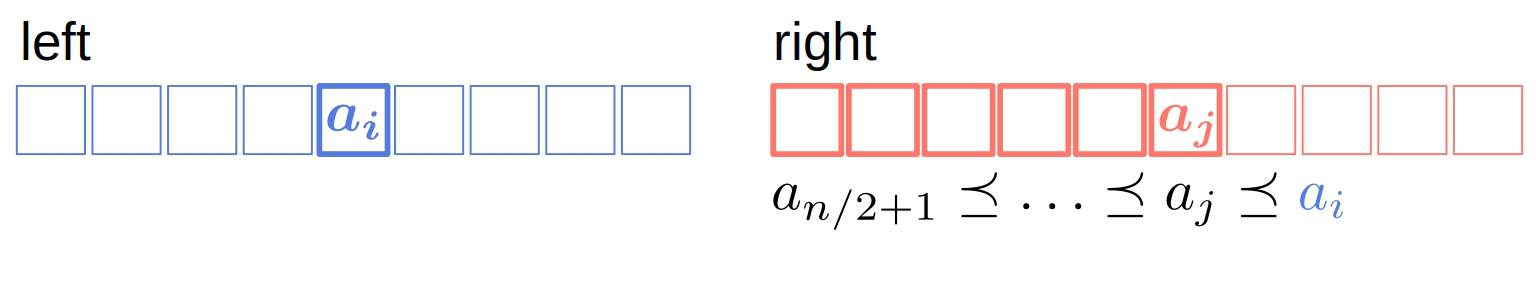

return (b, count_left + count_right + count_cross)To scale the count, we divide it with . The trick is to realize that the total number of inversions in

is the sum of the counts of three types of inversions:

We count cross-inversions in the merge step. Since and

are sorted after the recursion, if

and

, it holds that

is in inversions with

and its predecessors in

:

Since the indices in

Since the indices in start with

, we subtract that offset from

and add the difference to the count.

The algorithm’s time complexity is like that of Merge Sort, which beats the straightforward

method.

It’s usually slower than the sampling approach but returns the same value over multiple runs. Therefore, this implementation might be the middle ground between the sampling’s performance and the quadratic algorithm’s exactness.

Unlike the previous two approaches, this one solves an additional problem (sorting an array) to handle the original one. So, it does more work than necessary.

A computationally lightweight approach would be to consider only the inversions with successive elements:

There are at most such inversions, and we need only one traversal over the array to count them, checking the successive pairs. So, the time complexity will be

, but this measure might not give us a clear picture of an array’s sortedness. The reason is that out-of-order elements at the beginning of

can participate in more inversions than those near the end, but this metric treats all pairs equally.

Another approach is to calculate the rank distance. Let be the sorted permutation of

, and let

be the rank of

in the

, which we can find by looking it up in

(we use averages for non-unique elements). Then, the rank distance can be computed as the Euclidean distance between the original indices and ranks:

or the average absolute difference:

A shortcoming of this approach is that we need to sort to find the ranks.

In this article, we showed how to define and compute the sortedness of an array. A natural measure of it is the count of inversions. There are stochastic and deterministic ways to calculate it.

We should use the sampling approaches if we’re more concerned about performance.