Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: March 18, 2024

Over the past decade, significant advancements have been made in the field of Artificial Intelligence (AI), leading to the utilization of algorithms for solving a diverse array of real-world problems. To achieve these milestones, increasingly complex models and opaquely functioning AI systems have come into play.

In response, Explainable AI (XAI) has emerged as a solution to enhance transparency in AI applications and foster acceptance across critical domains.

In this tutorial, we’ll delve into the realm of Explainable AI, exploring its importance, methodologies, and practical applications.

XAI, in simple words, is a way to advance toward more transparent AI without restricting its use in essential sectors. Furthermore, XAI generally focuses on creating easy-to-understand, trustworthy strategies, and effectively managing the new generation of AI systems.

It’s critical for people to understand the rationale behind the judgments made when AI systems provide outcomes. This knowledge is essential for establishing trust in AI systems as well as for adhering to legal obligations that demand justice and accountability.

Besides, the black box issue can be resolved through XAI, which delivers transparent and understandable insights into the reasoning behind the conclusions reached by AI models. This increases the adoption and acceptability of AI technology by enabling stakeholders, such as developers, end users, and regulatory agencies, to thoroughly grasp the factors impacting AI judgments.

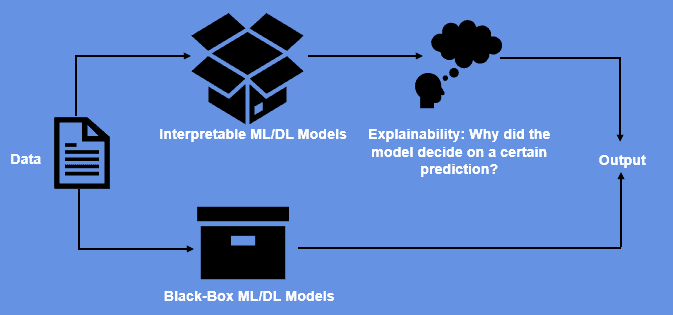

The following figure shows the difference between XAI and black-box Machine Learning (ML) and Deep Learning (DL) models:

Several approaches have been proposed to address the drawbacks of explaining AI models, each proposing a different strategy for illuminating their thought processes. Among the well-known methods are:

Explainable AI finds uses in a variety of fields, enhancing accountability and decision-making:

Although Explainable AI has come a long way, there are still problems. It can be difficult to balance model performance and interpretability because doing so might cause accuracy to decline. Additionally, it is difficult to provide an all-encompassing definition of “explanation” that meets the needs of many stakeholders.

Explainable AI has a bright future. In order to enable models to generate accurate predictions while providing clear explanations, researchers are striving to create hybrid models that combine the strength of deep learning with interpretable components.

It will be essential to continue working together with AI practitioners, ethicists, and politicians to develop standardized procedures to ensure ethical and understandable AI.

In conclusion, explainable AI (XAI) shines as a beacon of transparency. XAI builds trust, encourages accountability, and promotes the ethical adoption of AI technologies across industries by revealing the mysteries of the black box and offering understandable insights.

Researchers and practitioners work to achieve the delicate balance between accuracy and interpretability as the path to completely intelligible AI continues, ensuring a future where AI systems make intelligent judgments and communicate the reasons behind them.