Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: November 4, 2022

This tutorial introduces the Direct Linear Transform (DLT), a general approach designed to solve systems of equations of the type:

This type of equation frequently appears in projective geometry. One very important example is the relation between 3D points in a scene and their projection onto the image plane of a camera. That is why we’re going to use this setting to motivate the usage of DLT.

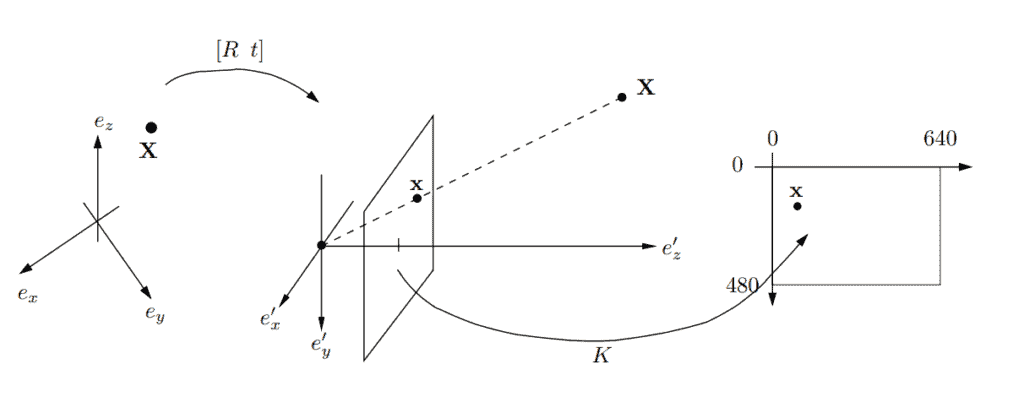

The most commonly used mathematical model of a camera is the so-called pinhole camera model. Since the idea of a camera is to map between real-world objects and 2d representations the camera model consists of several coordinate systems:

To encode camera position and movement we use the reference coordinate system (left part of the diagram). In this system, the camera can undergo translation and rotation. The translation is represented as a vector

and the rotation is represented as a

rotation matrix

.

Usually, all the scene point coordinates are also specified in the global coordinate system and and

are used to relate them to the camera coordinate system as so:

Which can be neatly represented in matrix form if we add 1 as an extra dimension.

The camera coordinate system (in the middle of the diagram) has an origin

which represents the camera center, or pinhole. To generate a projection

of a scene point

we form the line between

and

and intersect it with the plane

.

This plane is also called the image plane and the line intersecting it is the viewing ray. One might note that unlike a physical camera the projection plane is in front of the pinhole. This is done for convenience and has the effect that the image will not appear upside down as in the real model.

In the pinhole camera model, the image plane lies in , meaning that the projections are given in the length of real-world units. But when we are talking about images, we use pixels in a specified dimension. In our example diagram, we ‘re using

pixels. To convert it we use a mapping (right side of the diagram) from the image plane embedded in

to the real image.

This pixel mapping is represented by an invertible triangular matrix

which contains the inner parameters of the camera, that is, focal length, principal point, aspect ratio, and axis skew.

And finally, we can relate all three parts of the camera model into one equation:

or more succinctly:

where P is called the camera matrix.

The above equation now looks like the exact type that DLT can help us find the matrix P if we wanted to.

But why do we need to solve it in the first place? Most of the time we’re interested in finding the part of the camera matrix

. Because if

is known we can see that the camera is calibrated. And with a calibrated camera we can do things like lens distortion correction, measuring an object from a photo, or even estimating 3d coordinates from camera motion.

To do that we’ll first need at least 6 data points measured by hand, we can then compute the camera matrix P using the DLT method and finally factorize into

using RQ-factorization.

The first step of the DLT method formulates a homogeneous linear system of equations and solves it by finding an approximate null space. To do that we first express P in terms of row vectors:

Then we can write the camera equation as:

which in turn can be put into matrix form as such:

Note that since is a

vector each

actually represents a

block of zeros, meaning we are multiplying a

matrix multiplied with a

vector.

If we stack all the projection equations of all the measured data points in one matrix, we get a system of the form:

After rearranging the equations, we just need to find a non-zero vector in the null space of to solve the system. In most cases, however, there will not be an exact solution due to noise while measuring. Therefore, it is more convenient to search for a solution that minimizes the total error essentially solving a least square problem instead.

One way of solving it is to use Singular Value Decomposition (or SVD). After decomposing the big matrix we can take the right singular vector corresponding to the smallest singular value and we have found the camera matrix

. We can then factorize

into

using the QR-factorization method, as mentioned before.

In this article, we looked into the pinhole camera model and motivated the usage of the Discrete Linear transformation (DLT) by trying to find the intrinsic parameters of a given camera model. Using this setting as an example we looked into the methodology behind the approach and how it works.