Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: July 18, 2022

Fisheye lenses have become a staple in creative photography alongside other tilt-shift, telephoto zoom, and wide-angle lenses. This lens type applies a different distortion (or projection) mapping than a regular “pinhole” camera.

In this tutorial, we’ll demonstrate how to undo this distortion and extract a “straight” image from a fisheye photograph.

Essentially, we are interested in estimating the transformation involved in projecting a 3D point in space

onto a projected 2D coordinate

as follows:

This transform can further be divided into 5 intrinsic and 6 extrinsic parameters.

Extrinsic parameters in computer vision deal with the camera’s position (physical location in ,

, and

but also the pose of the camera) relative to the world reference or origin.

In , these are referenced as three rotations

and three translations

along the width, height, and depth dimensions.

Intrinsic parameters in computer vision deal with the camera’s ability to map out points in the real world with respect to a 2D “sensor” or “film”. We are interested in these parameters for applying and correcting lens distortions.

Literature dealing with intrinsic camera parameters in computer vision often references a 3 x 3 camera matrix, which we’ll denote as . In order to understand better these fundamental optical matrices and how to multiply them, we can look at this interactive website.

This is what this K matrix can look like:

The elements in the matrix can be decomposed as follows:

This K matrix corresponds to this camera matrix detailed in OpenCV’s literature.

After some simplifications, we have the resulting total transform matrix:

or alternatively:

We can represent the target coordinated only with and

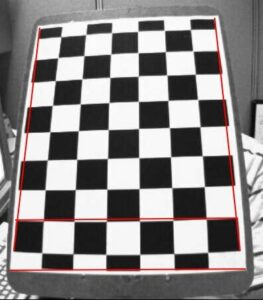

because we assume that our calibration target is flat. This is usually a printed checkerboard.

Fisheye lenses are not all created equal. In order to know how to correct the distortion on a fisheye lens, it could be helpful to know what kind of fisheye we’re dealing with to better approximate its focal lengths ““. Fisheye lenses can be rectilinear, stereographic, equidistant, equisolid angle, or orthographic. Here is a list of different types and their respective focal functions.

The angle referenced is the angle from the lens’s optical axis.

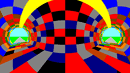

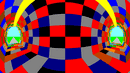

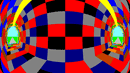

We will use the following image to present the different alternatives. The camera faces the left inside the colorful cylinder, as indicated by the arrow.

This lens works like a pinhole camera, which means that straight lines will remain straight in the resulting image. θ has to be smaller than 90°. The aperture angle is gaged symmetrically to the optical axis and has to be smaller than 180°:

Large aperture angles are challenging to design and lead to high prices.

This fisheye lens type maintains angles. This mapping doesn’t compress objects in the margin of the photograph as much as others:

Below are some examples of this fisheye lens type:

In contrast to the previous type, the equidistant fisheye lens maintains angular distances. This can be interesting for angle measurement applications. PanoTools uses this type of mapping:

Below are some examples of this fisheye lens type:

Alternatively, the equisolid angle fisheye lens maintains surface relations. The resulting image looks like a reflective surface of a sphere. This is a common type of fisheye lens. In comparison to the stereographic lens, this one does compress the margins.

Below are some examples of this fisheye lens type:

Orthographic lenses maintain planar illuminance. The center image is less compressed, however, the margins are very much distorted:

Below are some examples of this fisheye lens type:

Once we know the type of sensor (APS-C, 35mm, etc.) and the focal length of our lens, we can simply calculate F(x,y) depending on our lens type or pick a value from the table in this list.

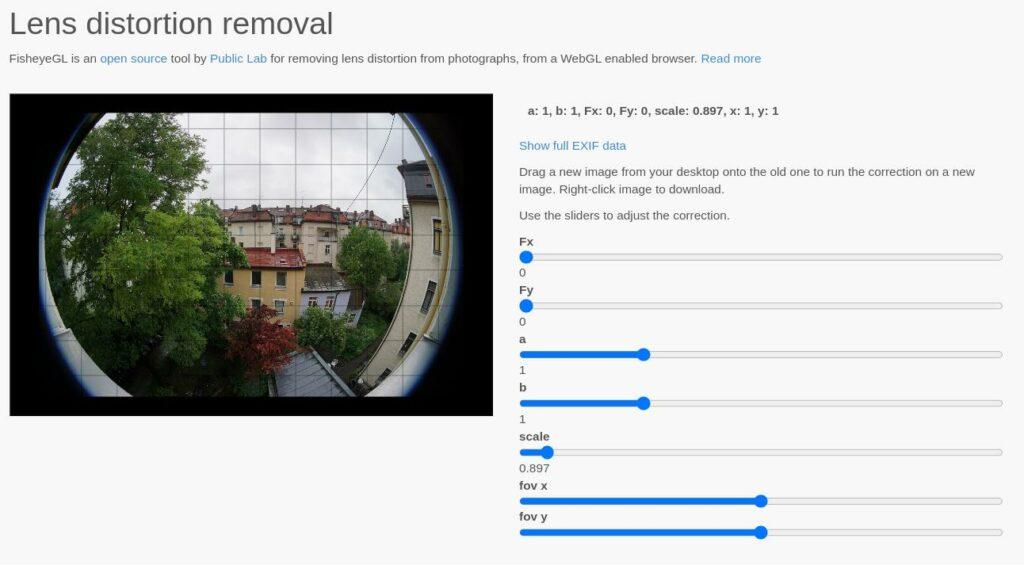

Finally, we can quickly test this new knowledge on our browse using FisheyeGl (a library for correcting fisheye, or barrel distortion, on browser images that require JavaScript with WebGL).

We’re going to use this APS-C, 75mm fisheye photograph for our experiment, which employs a very radical fisheye look:

Firstly, we can load the photograph onto the application:

We can see the different settings available, and we can recognize some of them, however, two new ones appear. These parameters a and b are the radial and tangential distortion coefficients, respectively.

We can then apply the following settings:

Finally, we can extract the following photograph:

We can see how the picture is re-distorted to achieve straighter lines on the edges of the resulting image. We can also note that the artifacts at the edges of the screen are very much warped. We can also note that the dimensions of the resulting image are more significant than the original. This is why we set the scale to 0.7.

By manually adjusting the radial and tangential distortion parameters, we could perhaps achieve a finer result, however, modeling these as linear across the entire image, this model limits us.

Alternatively, suppose we are trying to work with a specific camera, and we need to be very careful with approximating its resulting undistorted image. In that case, it is best to use a target. Even some “pinhole” cameras may require this treatment for downstream tasks as many lenses can introduce distortion in the image that the intrinsic parameters and linear distortion cannot model. Target-less calibration can only be precise because the model we’re using is limited.

Therefore, we can have a much finer model by approximating radial and tangential distortion coefficients through calibration. A standard practice in computer vision is to observe a target with known structure and dimensions and use a method to obtain the position of the different calibration points in the image. This is called photogrammetric calibration.

Targets for color cameras look like checkerboards like this one:

This particular method for camera calibration was introduced in a Flexible New Technique For Camera Calibration in 1999.

We can say that each point in the checkerboard will generate the following (previous equation):

We can use this equation to define a homography matrix as follows:

We can now estimate a 3×3 homography matrix instead of a 3×4 projection matrix. To solve for , we need to observe at least 4 points as

has 8 degrees of freedom (DoF), and each point consists of a pair of

coordinates.

To compute from

, this method relies on four steps:

The most common approach to estimate non-linear (distortion) parameters of a lens is to model them using the following equations:

and

In this case, represents the distance between the pixel in the image and the principal point.

Also is the point as projected by an ideal pinhole camera.

and

are additional non-linear parameters.

Lens distortion can finally be approximated by minimizing the following error function:

One of the best computer vision libraries out there is OpenCV.

Using tutorials from libraries like OpenCV, we can automatically detect the corners on the checkerboard and extract an intrinsic parameter camera matrix and display them on the image:

By taking multiple pictures, each mapping out more calibration points in our camera sensor, we can more accurately estimate the camera’s intrinsic parameters along with the distortions in the image.

Additional information can be found in this tutorial.

If we were using a thermal camera, there are other calibration target types available that we could leverage. However, the procedure would be the same.

In this article, we reviewed different methods for programmatically correcting fisheye images.