Learn through the super-clean Baeldung Pro experience:

>> Membership and Baeldung Pro.

No ads, dark-mode and 6 months free of IntelliJ Idea Ultimate to start with.

Last updated: March 18, 2024

In this tutorial, we’ll show a matrix approach to do 2D convolution.

Convolution is fundamental in signal processing, computer vision, and machine learning. It applies a filter or kernel to an input image or signal and extracts relevant features.

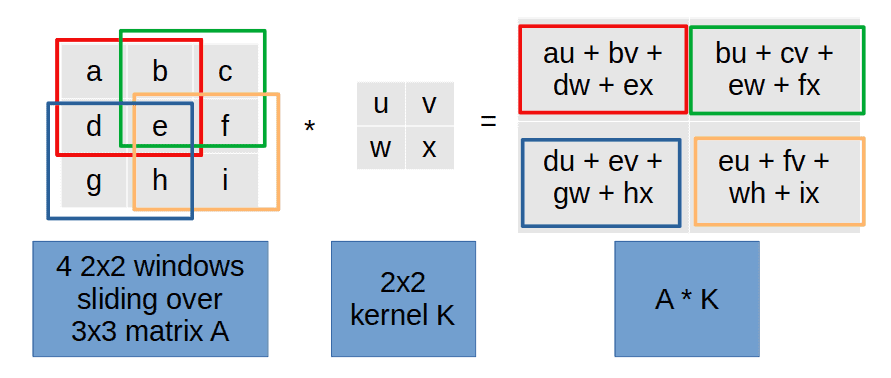

Typical implementations use a sliding-window operation where the kernel moves across the input image. For each placement of the kernel on the input image

, we compute the dot product of the kernel and the overlapping image pixels:

However, the kernel approach can be computationally expensive, especially for large images and kernels. An alternative is to compute convolution as matrix multiplication, which can be a more efficient approach.

Let be a matrix of size

and

a

kernel. For convenience, let

.

To calculate the convolution , we need to calculate the matrix-vector multiplication

where:

It’s a three-step process.

First, we define the matrixes, which represent all the horizontal sliding windows for kernel row

:

In the second step, we complete horizontal sliding by constructing a matrix

. We define

as the horizontal concatenation of matrix blocks

:

The final step is to represent vertical window sliding. We do that by padding with zero matrices (denoted

):

All that is now left to do is compute , where

is a row-vector form of the input matrix

.

Let’s construct the matrix for a

matrix

convolved with a

kernel

:

In this example, we have . First, we build the blocks

and

:

and get after concatenation:

The final step is to pad the matrix with blocks of zeros:

The zeros in the matrix blocks are highlighted in red, and

is exactly of the size

.

Now, let’s rewrite as

:

Finally, we multiply and

:

Since , we obtain the result of the convolution

as a

matrix by simplifying and reshaping

:

Let’s say and

are:

The corresponding ,

, and

are as follows:

The result of the convolution is:

We can reshape :

Matrix multiplication is easier to compute compared to a 2D convolution because it can be efficiently implemented using hardware-accelerated linear algebra libraries, such as BLAS (Basic Linear Algebra Subprograms). These libraries have been optimized for many years to achieve high performance on a variety of hardware platforms.

In addition, the memory access patterns for matrix multiplication are more regular and predictable compared to 2D convolution, which can lead to better cache utilization and fewer memory stalls. This can result in faster execution times and better performance.

In this article, we showed how to compute a convolution as a matrix-vector multiplication. The approach can be faster than the usual one with sliding since matrix operations have fast implementations on modern computers.