1. Overview

In this tutorial, let’s discuss the PostgreSQL exception, FATAL: sorry, too many clients already. Generally, the PostgreSQL server throws this error when it cannot accept a connection request from a client application.

We’ll explore its causes, troubleshoot the issue, and understand some guidelines to prevent it.

2. How, When and Why

The DB server starts with a limited number of connections. Sometimes the connections are exhausted. Consequently, the DB server cannot serve new connections. That’s when it throws the exception, FATAL: sorry, too many clients already.

First, let’s understand how, when, and why this issue occurs. Let’s assume four client applications connect to a PostgreSQL database:

Mostly, applications implement connection pooling during the boot-up time. Suppose, the database administrator configured the PostgreSQL database server for a maximum of 90 connections. We must remember, that the database reserves some connections for its internal use. The actual connections available for clients are even fewer. The application deployment team configures each client application with a connection pool size of 30.

Now let’s say the deployment team brings up the client applications sequentially, starting with Client-1. When Client-4 tries to start, the client applications Client-1, Client-2, and Client-3 have already acquired and blocked 90 connections. Hence, when Client-4 requests 30 more connections from the database server, the server rejects the request with the error FATAL: sorry, too many clients already.

The error can also occur when developers try to connect to the PostgreSQL server using database management tools such psql, pgAdmin, DBeaver, etc. After all, these tools also try to acquire connections to the database and in the event of inadequate connections, they might also face the same error.

3. How to Troubleshoot

First, let’s start with determining the maximum number of connections by running a query in the PostgreSQL database:

show max_connectionsBy default, it’s set to 100, however it can have higher values. We can set it by modifying the PostgreSQL runtime connection setting max_connections in postgresql.conf file present in the database server:

/var/lib/postgresql/data # cat postgresql.conf | grep max_connections

max_connections = 100 # (change requires restart)However, we should first investigate the high usage of database connections instead of setting it to a higher value, for a permanent solution.

When the client applications report such errors, we must ascertain the number of active connections to the database server. For each active connection, a backend process runs in the database server. The PostgreSQL database tracks these backend processes in the pg_stat_activity view. We can run an SQL query on it to fetch the active and idle connections:

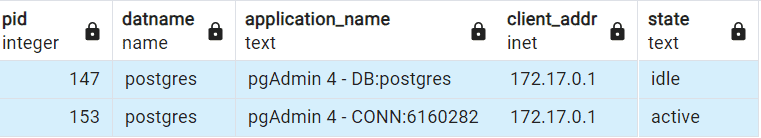

select pid, datname, application_name, client_addr, state

from pg_stat_activity

where state in ('idle', 'active')Let’s take a look at a sample output showing the connection details:

Let’s understand the meaning of the columns in the output:

| Name | Description |

|---|---|

| pid | process id of the backend process |

| datname | Name of the database this backend is connected to |

| application_name | Name of the application that is connected to this backend |

| client_addr | IP address of the client connected to this backend |

| state | Current overall state of this backend. Possible values are:

|

After discovering the active and idle connections, we can identify the inconsequential ones and stop or kill the backend processes holding them up. Moreover, we can also forcibly terminate the sessions from the database using the pg_terminate_backend system administration function:

select pg_terminate_backend(147)The database function pg_terminate_backend() takes the backend process ID 147 and terminates it.

4. How to Prevent

The primary step to prevent the error is to accurately benchmark the application to assess its database server connection requirement. After benchmarking, we can size and configure the database server for the maximum connection requirement. Then, the deployment team can set the optimum connection pool size in the application.

Additionally, we should be cautious in setting the connection pool size of the application processes in environments where auto-scaling is enabled. When new processes are spawned automatically in environments such as in a Kubernetes cluster, the connection size in the database might be inadequate for serving more connection requests.

Developers should set proper connection timeouts in the applications and close connections appropriately. Consequently, connections aren’t held up for longer durations and are returned to the pool or the database server.

Developers should diligently exit database management tools such as pgAdmin, DBeaver, etc. once they finish their work. Configuring these tools to close connections and sessions after a certain period of inactivity is a good practice.

Infrastructure and network engineers should restrict the connectivity to the database server. Additionally, database administrators can configure the database to manage the source IPs allowed to connect.

5. Conclusion

In this article, we discussed the PostgreSQL error FATAL: sorry, too many clients already.

Prevention is always better than cure, hence we must benchmark applications to ascertain their database connection requirements. Furthermore, we must configure the operating environment to allow only the necessary connections to the database and nothing more than that.