1. Introduction

In this tutorial, we’ll present the Jump Search algorithm.

2. Search Problems

In a classical search problem, we have a value and a sorted array

with

elements. Our task is to find the index

of

in

.

If is a linked list, it doesn’t have indices. Instead, each element is a

pair, so we’re looking for the item whose key is

. We’ll denote the

th element of the list

as

just as we do with arrays.

However, if is an array, we can access any element in an

time (assuming that

fits into the main memory). In contrast, if

is a list, we need to follow

pointers to reach

, so the complexity of access is

. In the worst case, we want to reach the end of the list, so we traverse all the

elements to get to it, which takes

time.

That’s why an array search algorithm such as Binary Search isn’t suitable for singly-linked lists. It checks the elements of back and forth, but going back isn’t an

operation when

is a list, and checking

after

(

) takes more than a constant time.

3. Jump Search

Jump Search is a list search algorithm but can handle arrays equally well. It assumes that its input is a sorted linked list. That means that each element’s key should be

than the next one’s.

The algorithm partitions into the sub-lists of the same size,

,

,

,

and checks them iteratively until locating the one whose boundaries contain

:

Once the sub-list is found, the algorithm checks its elements one by one until finding or reaching the last one in the sub-list if

isn’t there.

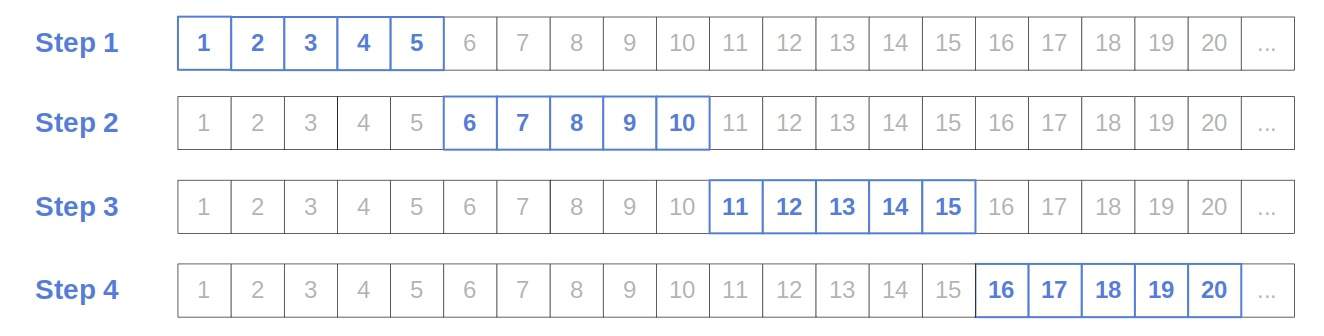

The name comes from the jumps of size between the steps. For instance, this is how the algorithm runs when

:

3.1. Pseudocode

Here’s the pseudocode:

We can set to any value we like, but the algorithm’s complexity depends on our choice of

.

3.2. Complexity Analysis

Let’s say that our list has elements. In the worst case, we check all the

sub-lists’ boundaries and compare all the elements in the last sub-list to

. So, we perform

comparisons.

The minimum of is

when

. So, if we set

to

, Jump Search will run in

time with respect to the number of comparisons.

However, to reach the new sub-list’s end, we need to follow pointers from the previous step’s

. As a result, we traverse all

nodes in the worst case. So, the algorithm has an

worst-case time complexity if we consider node traversals. However, if a comparison is more complex than following a pointer, we can say that the complexity is

.

3.3. Arrays

As we said before, Jump Search can handle arrays too. We prefer it over logarithmic Binary Search when going back (e.g., from testing if to checking whether

) is expensive or impossible.

That can happen when is so large that it can’t fit into the working memory or is a stream of data we’re downloading from an external source. In the former case, requesting

after

might require loading new blocks from the disk into RAM. In the latter case, downloading individual elements is expensive since we get them over a network, so going back and forth like in Binary Search would be slow.

4. Conclusion

In this article, we presented the Jump Search algorithm. We use it to search singly-linked lists in time, but it can also search arrays.