1. Overview

In today’s deployment and ops world, it’s unusual to run our services directly on our physical servers. More often, we decide between either a virtual host (on-premises or cloud) or using containers like Docker.

This move toward greater abstraction requires our mastery of Infrastructure as Code (IaC) and container orchestration such as Kubernetes. But we can implement some advantages using smaller steps.

In this tutorial, we’ll explore virtualization and containerization. We’ll compare the technical differences and trade-offs.

2. Why Abstraction?

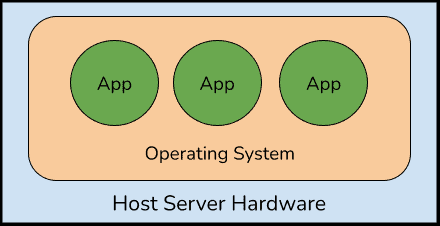

In its simplest configuration, we host applications and services in an operating system (Linux, Windows, etc.) that has been installed on a server computer:

This remains common in the server rooms of countless small businesses.

However, widespread virtualization in the data centers and in the cloud began in the mid-2000s. Container use, led by Docker, standardized and gained traction in the mid-2010s.

What continues to drive the adoption of these technologies?

We gain these advantages when we run our services this way:

Flexibility and Scalability: if we need to expand or shrink our server’s capabilities, we can easily change the configuration or copy it to another host. If we need more services running, we can spin up more virtual machines, cloud instances, or add containers to our pods.

Resource utilization: If we need lots of servers to handle something, but only once a month, we no longer need to keep those servers standing idle the rest of the time. We can allocate our hardware (computing and storage) resources dynamically, as we need them

Process isolation: Instead of keeping track of a variety of services installed on a monolithic system, we can keep each service’s code, configuration, and dependencies separate. That means we won’t break one thing by updating another. Also, we can keep one hungry application from slowing down all the others

Configuration and change management: If we need to change an important system, we can create a snapshot of a virtual machine. If things go very wrong, we can quickly back out our changes and be no worse than we were before. Also, documenting configuration and changes becomes much more straightforward when we address one service at a time. And when we move to Infrastructure as Code, writing our configuration with a tool like Terraform or Ansible, these definitions help us avoid any forgotten or “just for now” infrastructure changes.

3. Virtualization Basics

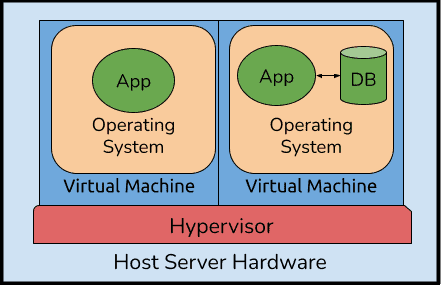

Virtualization refers to running a virtual machine in software. The “host” system runs virtualization software, usually called a hypervisor. “Guest” systems run in the hypervisor. The “guest” operating system has little or no idea it is not running directly on the hardware (“bare metal”):

Some examples of virtualization software:

- Linux’s KVM

- VMware Workstation or ESXi

- XenServer

- VirtualBox – it’s free and good for desktop use

- DosBox – it’s an emulator famous for old games, but also able to run full operating systems such as BeOS

- Microsoft’s Hyper-V

We can use these tools to serve up applications, such as a wiki and its database, or a network monitoring suite.

We can also use these tools on our development desktops to safely experiment with other environments.

In the mid-2000s, Intel and AMD added virtualization extensions to their CPUs (Intel VT and AMD-V, or SVM mode). These are standard features on today’s servers; however, on a desktop, we may have to enable them in our BIOS settings. This hardware assistance greatly simplifies and speeds up the hypervisor.

3.1. What Can Virtual Machines Do?

We gain portability when we work with virtual machine images instead of “bare metal” server installations. Virtual machine images are standardized, so we can easily move them from one host machine to another as needed. Many hypervisors even support live migration, where a virtual machine continues to run as it transfers seamlessly from one physical machine to another.

Cloud services such as AWS, GCP, and Azure provide much more than virtual OS installations. But their foundations rest upon virtual machine instances. We don’t have to go buy new hardware and install Ubuntu Server ourselves. We can just specify the RAM and CPU resources we want to pay for, ask for a particular OS, and off we go.

A virtual machine, like any full installation of an operating system, must boot up and provide all of its own functionality. Each may run a completely different Linux kernel or distribution. We may run another OS entirely, such as Windows or a BSD. This keeps our guests completely isolated from each other, aiding both security and simplifying configuration and dependencies. It is also heavier, taking up more room and more CPU.

From a user’s point of view, a virtual machine appears exactly the same as a physical machine.

4. Container Basics

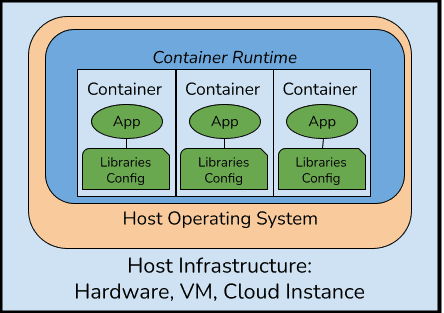

A container engine (like Docker) runs on a full OS (like Linux). The OS provides the kernel and related services (filesystems, networking, process management):

A container provides everything else needed to run a particular service:

- Code or application binaries

- Dependencies such as libraries

- Possibly an interpreter or runtime, such as Python or a JRE

- Configuration and localization, such as environment settings or user accounts

All of these elements are pinned to a specific version. Think of a package-lock.json file for node, or requirements.txt for pip: the container uses commands and libraries from a specific image. We can ask it to use the latest versions from an image, or we can specify an exact version. That way, when we use a container, we are also specifying our software’s environment (aside from the kernel version, which is determined by the host OS).

4.1. The Role of Docker

For many of us, “Docker” and “containers” are synonyms. And while we know other container engines exist (such as Red Hat’s Podman), we can learn the essentials of container architecture and use from the Docker ecosystem.

Docker also hosts a huge library of container images from which we can build and run our own local containers.

Container images work like templates for our running containers. They become containers when the container runtime loads and runs them. Containers run on top of whatever kernel and operating system we are using and add in everything else needed. They are often built upon a minimal source image, such as the popular “alpine.”

Our main focus in using a container image is to bundle a particular app or service and all its attendant dependencies. For example, we could deploy a Java WAR archive along with its server.

In docker lingo, we pull an image from the Docker Hub. Then we run it locally on our container engine or runtime (containerd, Docker’s engine, and CRI-O are some examples).

4.2. Union or Overlay Filesystems

Containers are drastically smaller than virtual machines. This translates to less storage but also near-instant startup times.

As already mentioned, container images can selectively include only the elements of an operating system needed for their application.

Another secret to their small size lies in how instead of using their own individual filesystems, they layer elements on that of a base image. The base remains read-only, and any changes only happen in the local layer inside the container.

If a system runs containers descended from the same base image, it only needs one copy of the read-only layer. More space savings!

4.3. Hosted Containers

We can use containers to keep in sync with our development, testing, and production environments. No more “but it works on my machine!”

When using them in production, we can pull, customize, and run an image ourselves as part of the deployment. But even if we do this on a cloud instance, it still leaves us with a lot of management and ops work.

Cloud providers support working directly with our container images. For example, Amazon Fargate accepts an image and makes it available after a few resource configurations.

Kubernetes, the de-facto standard for container orchestration, adds in high availability and self-healing to our containerized applications. It also adds much more complexity. But while we may not think we need k8s yet, preparing for it by containerizing our applications now helps us be ready to scale in the future.

5. Conclusion

Virtual machines and containers solve similar problems. But virtual machines do so with much more overhead, imaging, and running an entire operating system for each instance.

Containers run under a single operating system or in a group via orchestration. They provide easily replaced lightweight services.

In this article, we’ve compared running a full virtual machine to running our applications in containers.

Virtual machines may be more useful for longer-term installations, which also need flexibility. Containers work well for almost everything else.